Configuration to allow an Integration Flow developed with ACE Toolkit to connect to Event Streams

- Lab Overview

- Prerequisites

- Lab Environment

- 0 - Preparation

- 1 - Create a Topic in Event Streams

- 2 - Create SCRAM credentials to connect to Event Streams

- 3 - Create Resources in the ACE Toolkit

- 4 - Create Configurations in ACE Dashboard

- 5 - Deploy BAR file using ACE Dashboard

- Summary

Lab Overview

The most interesting and impactful new applications in an enterprise are those that provide interactive experiences by reacting to existing systems carrying out a business function. In this tutorial, we take a look at an example.

This session explains what configuration is needed to deploy an Integration Flow developed with ACE Toolkit that uses the Kafka Nodes to connect to an Event Streams cluster using the latest version of the ACE Integration Server Certified Container (ACEcc) as part of the IBM Cloud Pak for Integration.

Lab’s takeways:

- Creating and Configuring an EventStreams Topic

- Configure App Connect Enterprise message flow using App Connect Enterprise toolkit

- Configuring App Connect Enterprise service

- Deploying App Connect BAR file on App Connect Enterprise Server

- Testing App Connect Enterprise API sending a message to EventStreams

Prerequisites

- You need to have an OpenShift environment with CP4I. For this session, your proctor will provide you a pre-installed environment, with admin access (more details below). If you want to create your personal environment, you can request an environment on IBM TechZone.

- You should have an Event Stream runtime pre-created in your CP4I on ROKS environment.

- You need to have the IBM App Connect Enterprise Toolkit installed in your desktop. Follow the sections Download IBM App Connect Enterprise for Developers and Install IBM App Connect Enterprise from this tutorial to install it.

Lab Environment

For this session you will use a ROKS 4.10 environment with Cloud Pak for Integration 2022.2. For this section, your lab proctors pre-installed this cluster for you with a pre-configured IBM Event Streams instance.

0 - Preparation

In this section you will do some initial steps to prepare your environment for the lab.

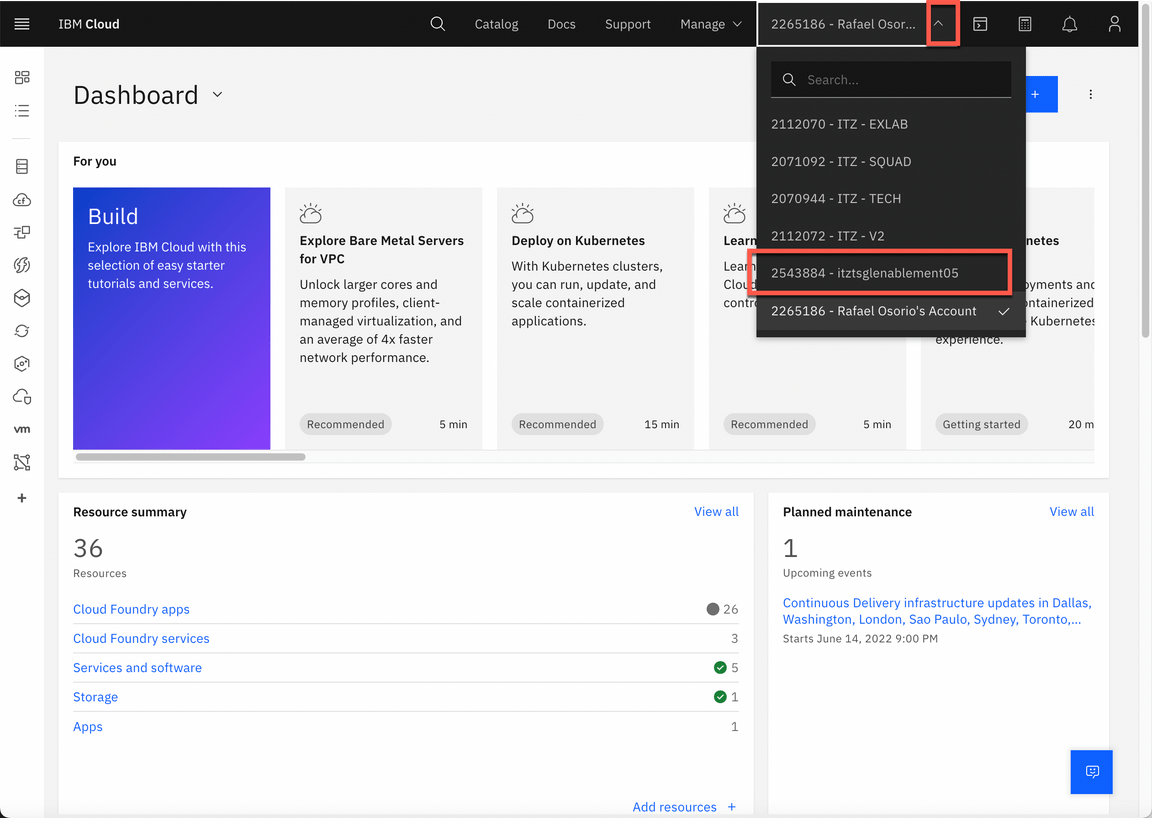

Next steps, assume that you already accepted the Account invitation in IBM Cloud (account: 2543884 - itztsglenablement05). This is the same account of GitOps lab. If you didn’t accept, please check the GitOps lab preparation section to complete it.

Log in IBM Cloud.

In IBM Cloud dashboard, change your IBM Cloud account to 2543884 - itztsglenablement05.

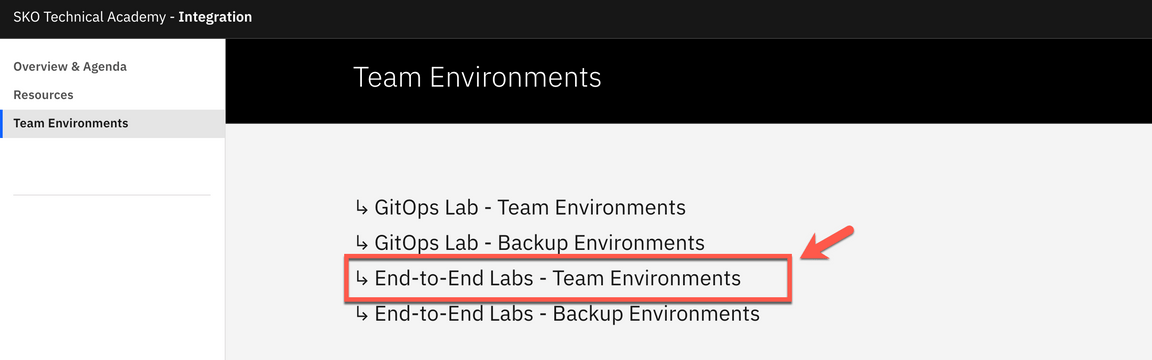

Now, click here to open the Team Environments page.

Click to go to the End-to-End Labs - Team Environments.

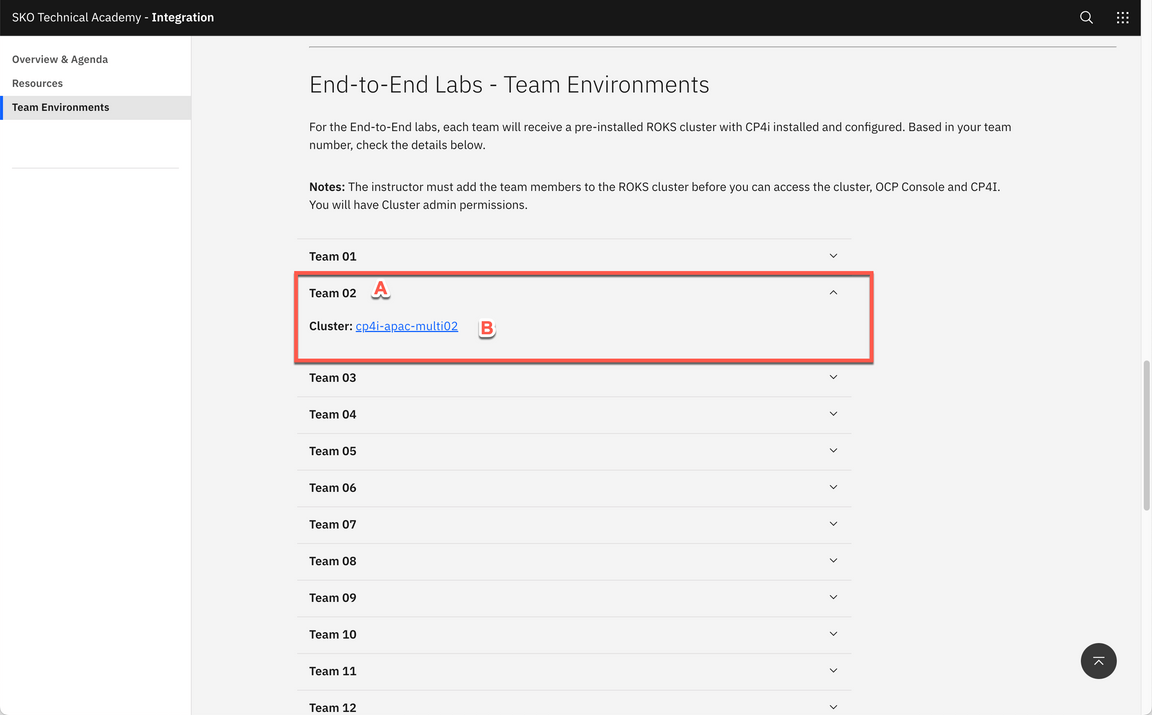

- Open your Team’s section (A) (check the number of your team with the Lab’s proctors). Then, open your team’s Cluster page (B).

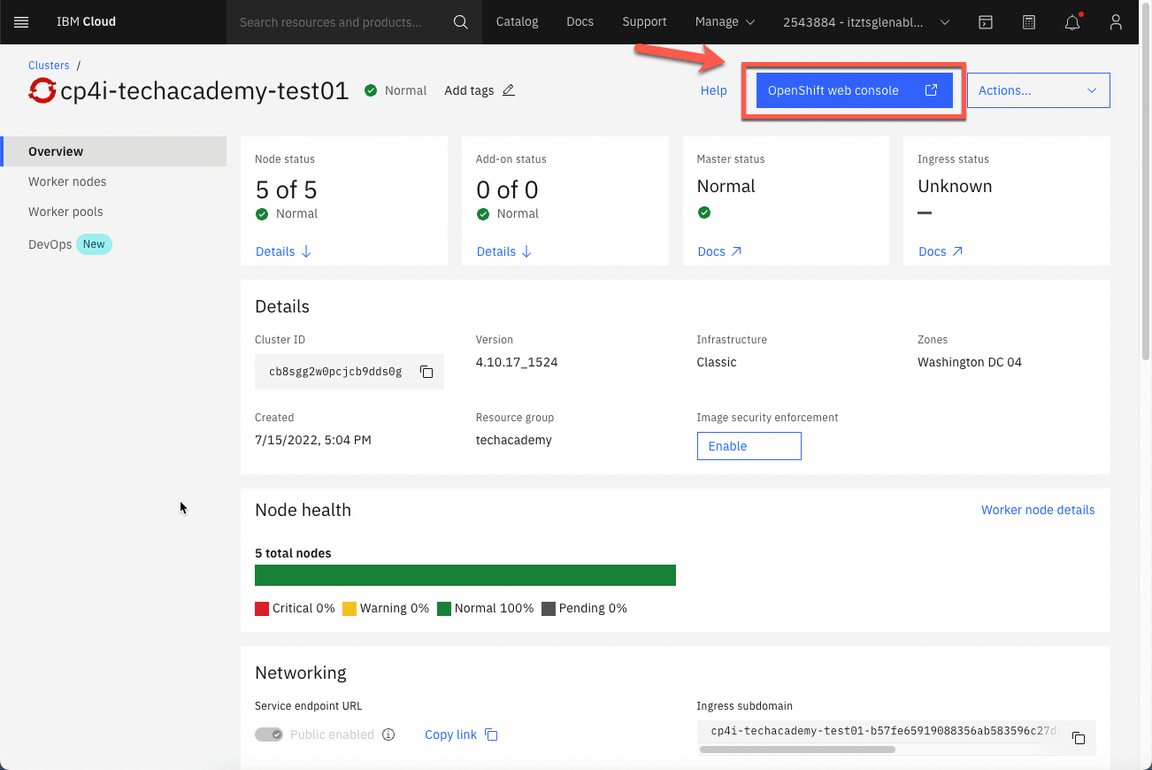

- On your cluster’s page, click OpenShift web console.

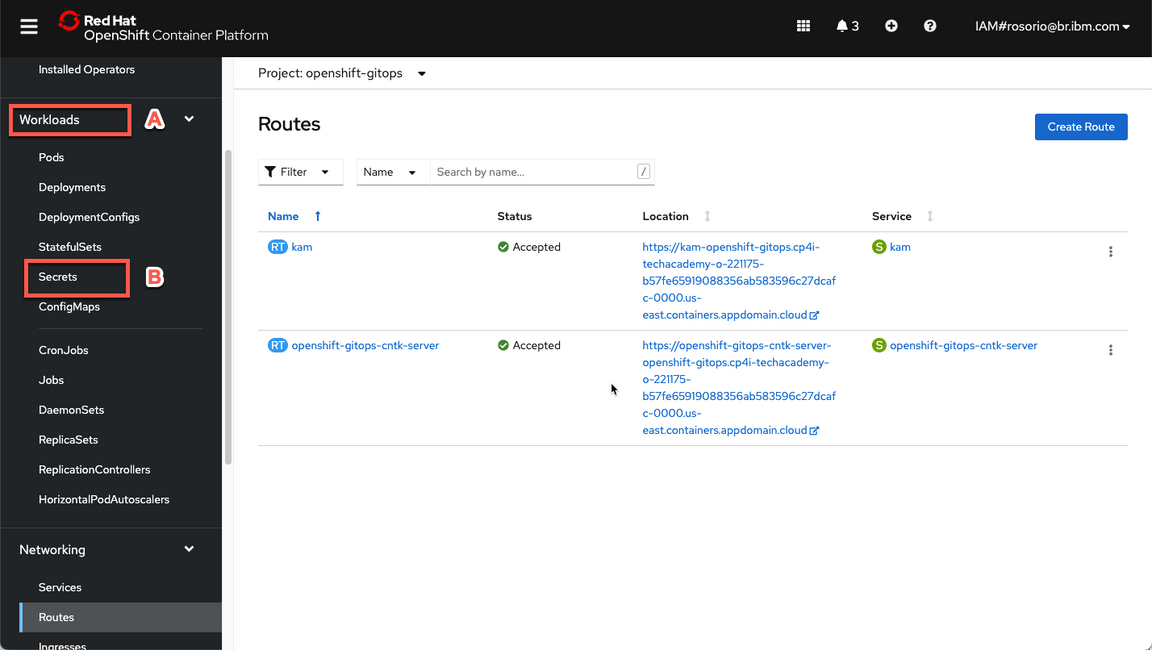

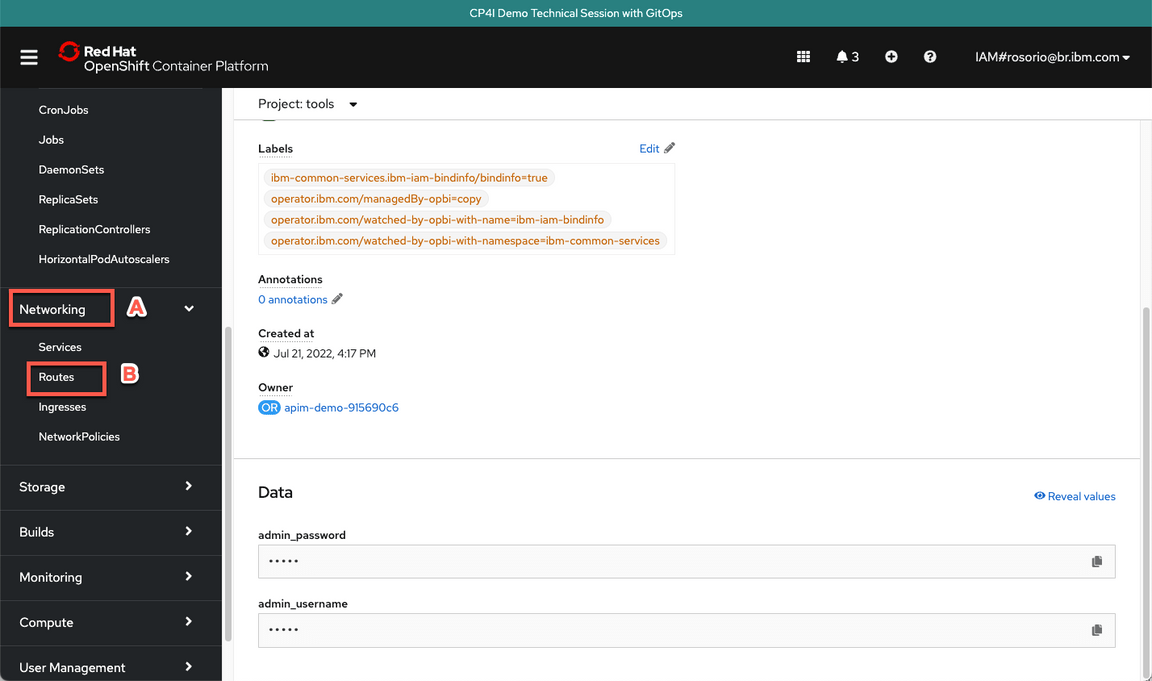

- Great, now let’s check your Cloud Pak for Integration environment. First we need to get the Platform Navigator URL and password. Let’s do it! Back to the OpenShift Web Console, open Workloads (A) and Secrets (B) again.

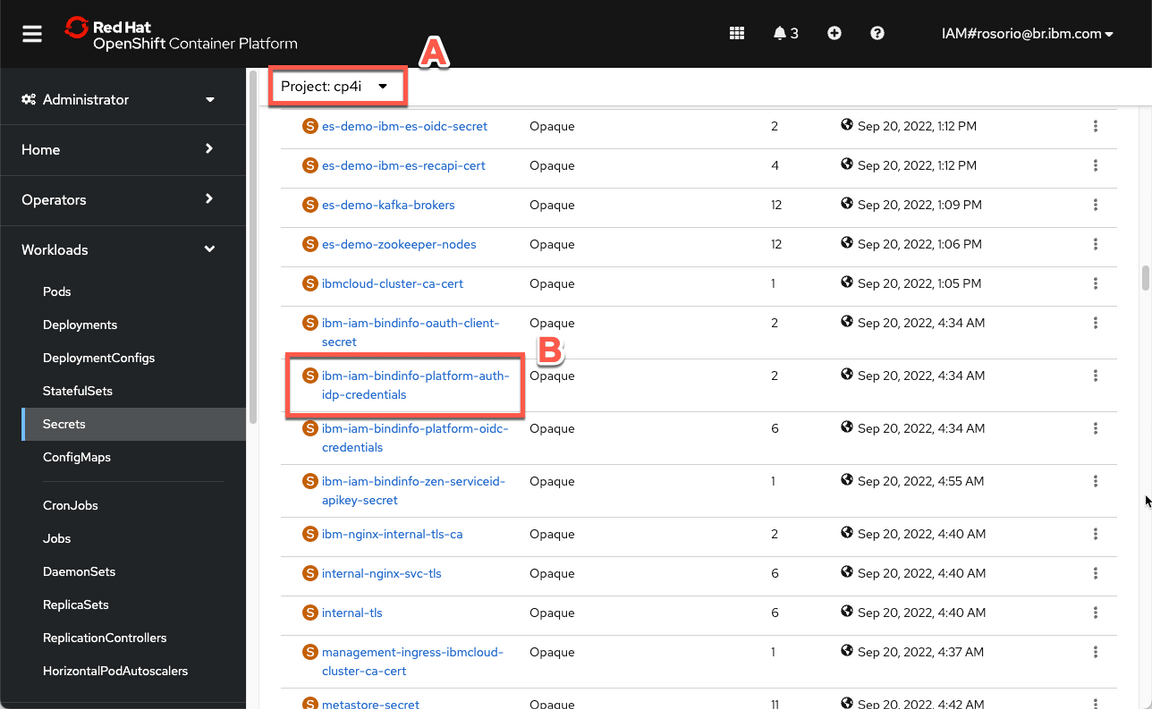

- Now filter by cp4i project (A) and open the ibm-iam-bindinfo-platform-auth-idp-credentials secret (B).

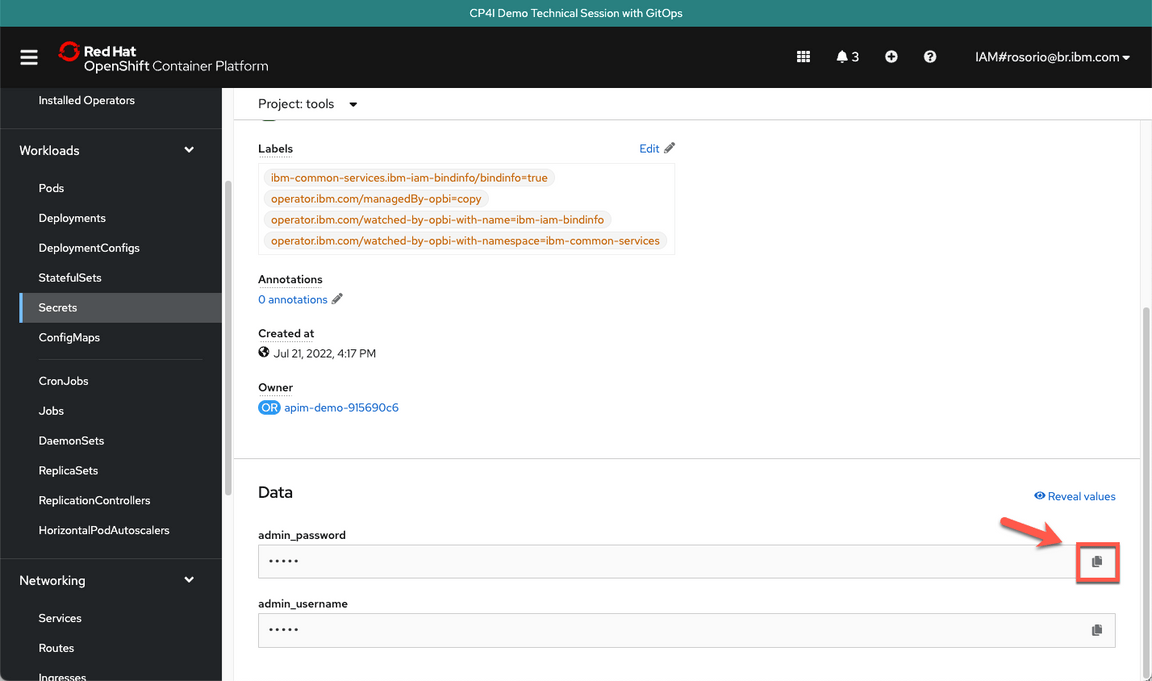

- Scroll down and click to copy the admin_password. You are welcome to take note of this password.

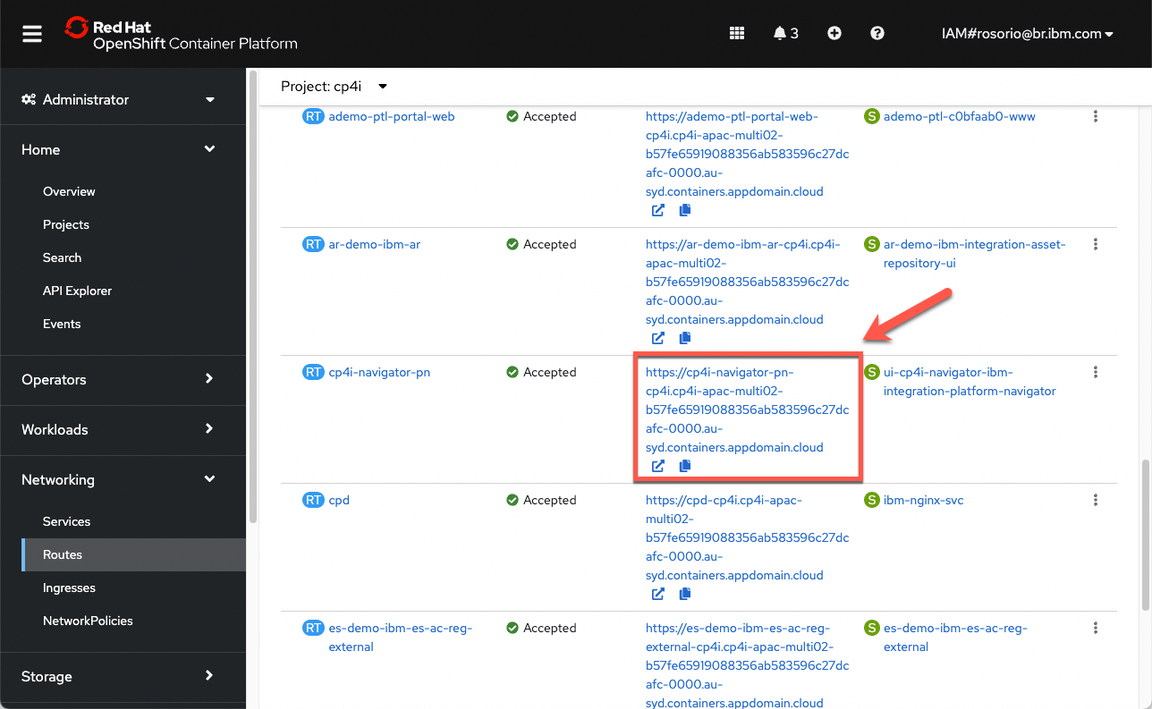

- Now, let’s get the Platform Navigator URL. Open Networking (A) and Routes (B).

- Scroll down and click on the cp4i-navigator-pn location to open the Platform Navigator.

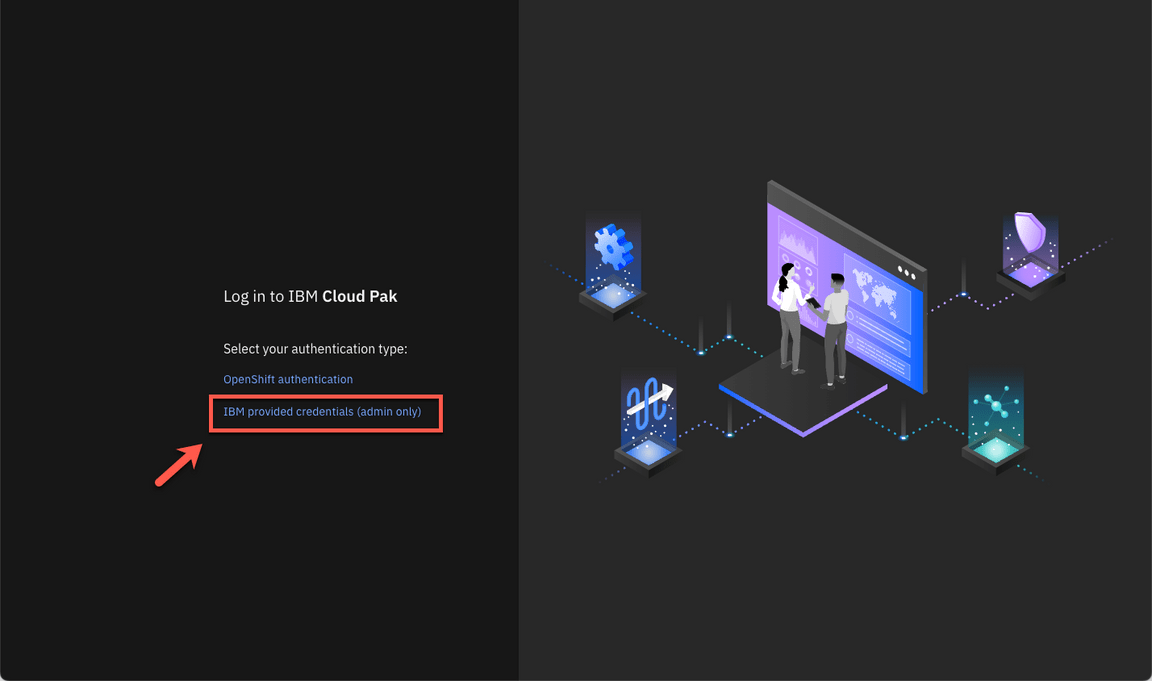

- If necessary, accept all the risks, and click IBM provided credentials (admin only) link.

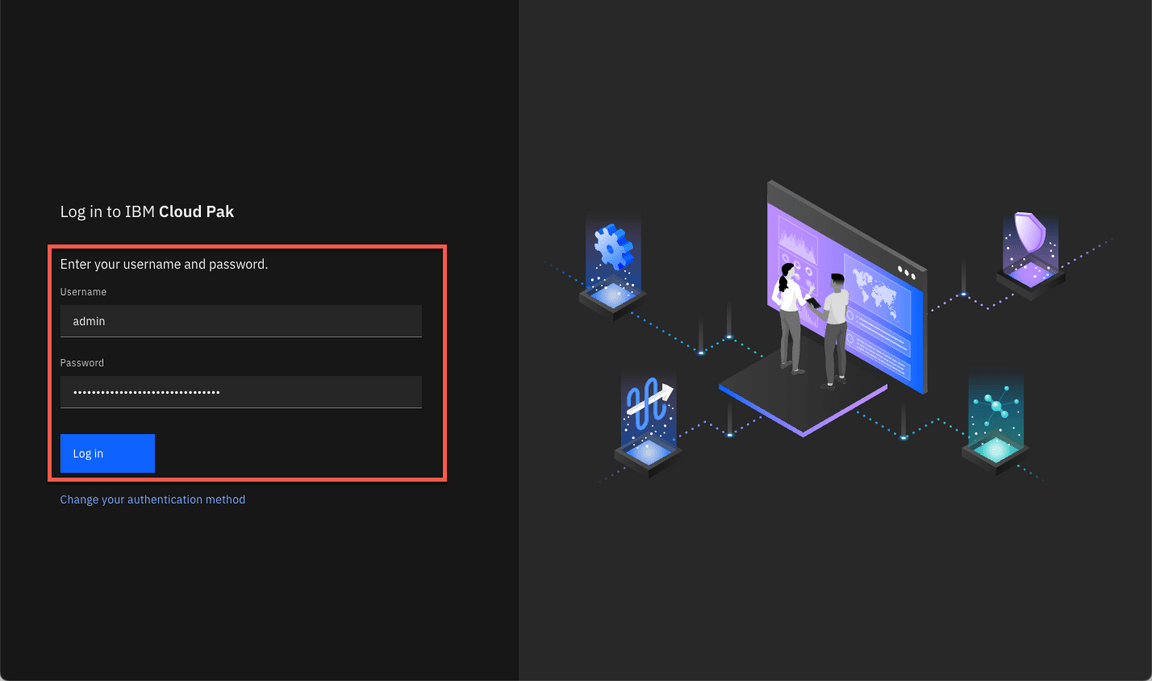

- Log in with admin user and the password that you copied in the previous step.

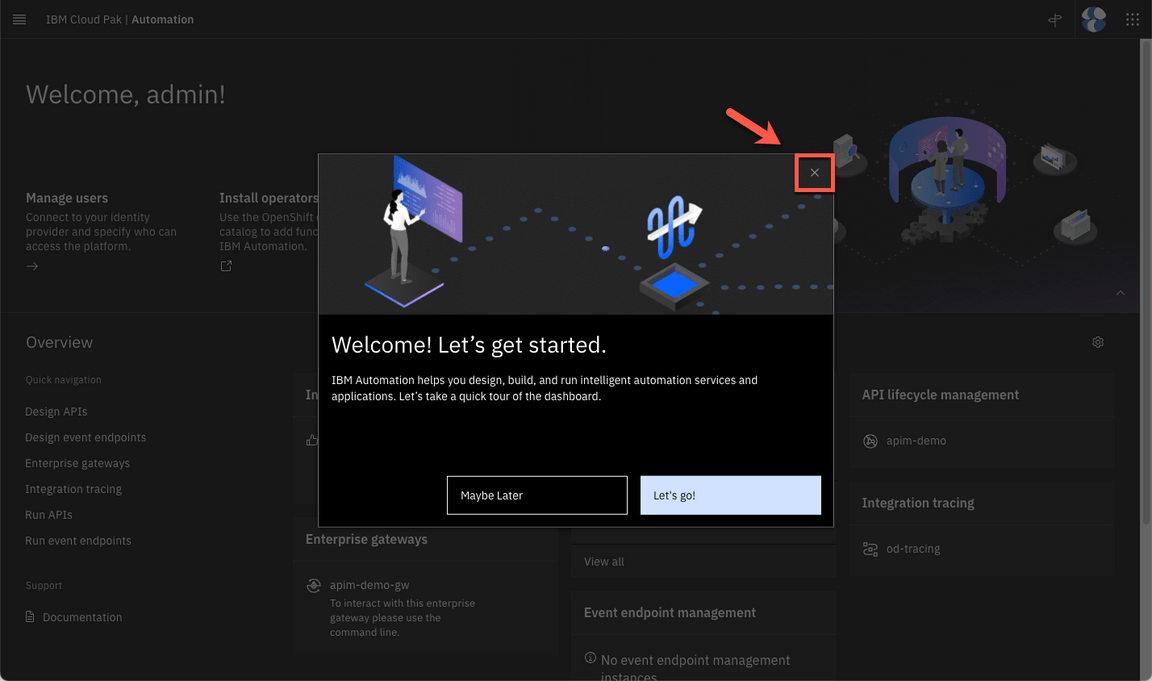

- Close the Welcome dialog. Great, now you are ready for the lab. Enjoy it!

1 - Create a Topic in Event Streams

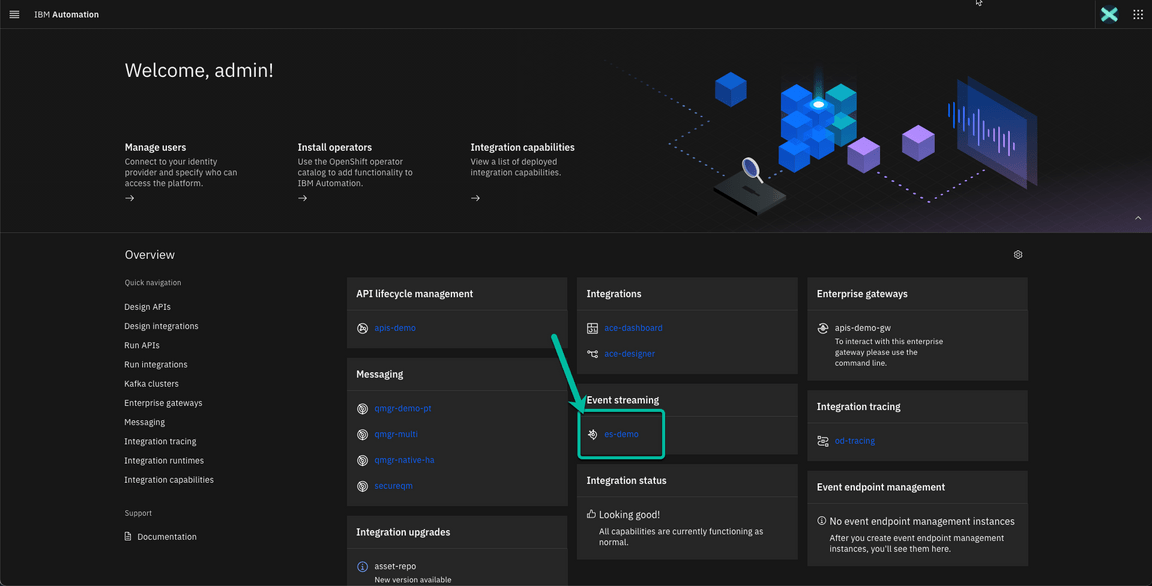

- Navigate to the Event Streams instance from the CP4I Platform Navigator. Click on es-demo.

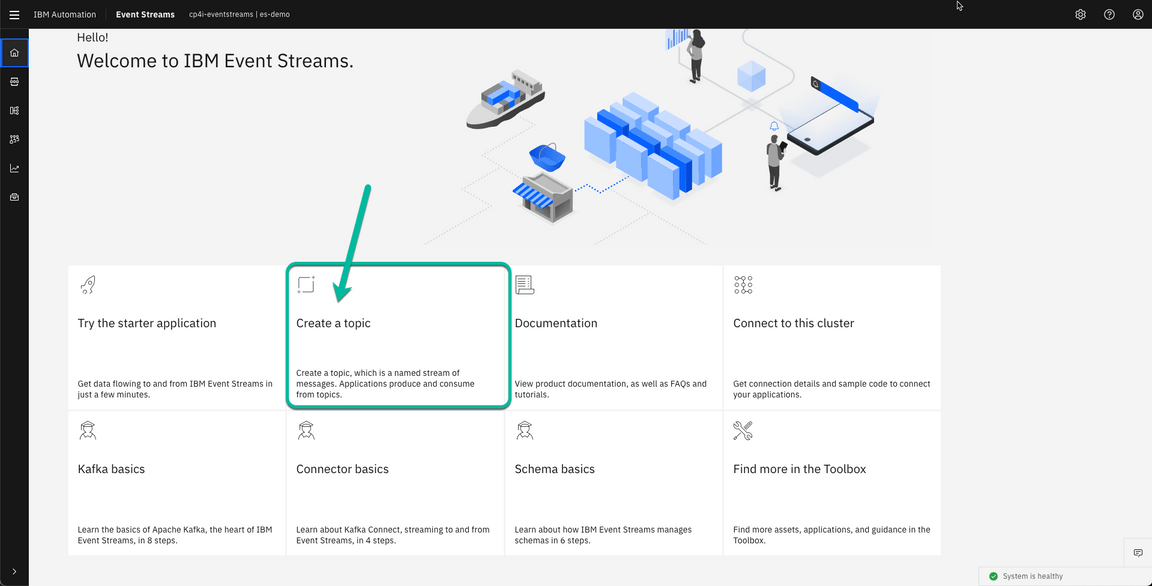

- From the Event Streams Home page click on the Create a topic tile.

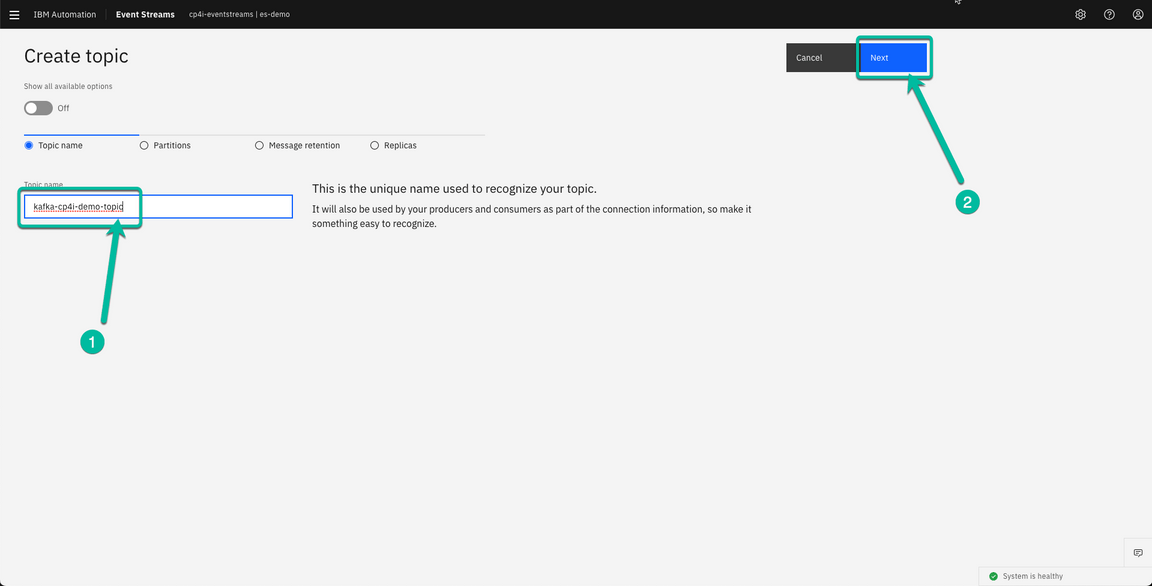

- The wizard will guide you during the process. In the first screen type the topic name. In my case I have used kafka-cp4i-demo-topic. You can use any name, you will use it later on when cofniguring your Flow in the Toolkit.

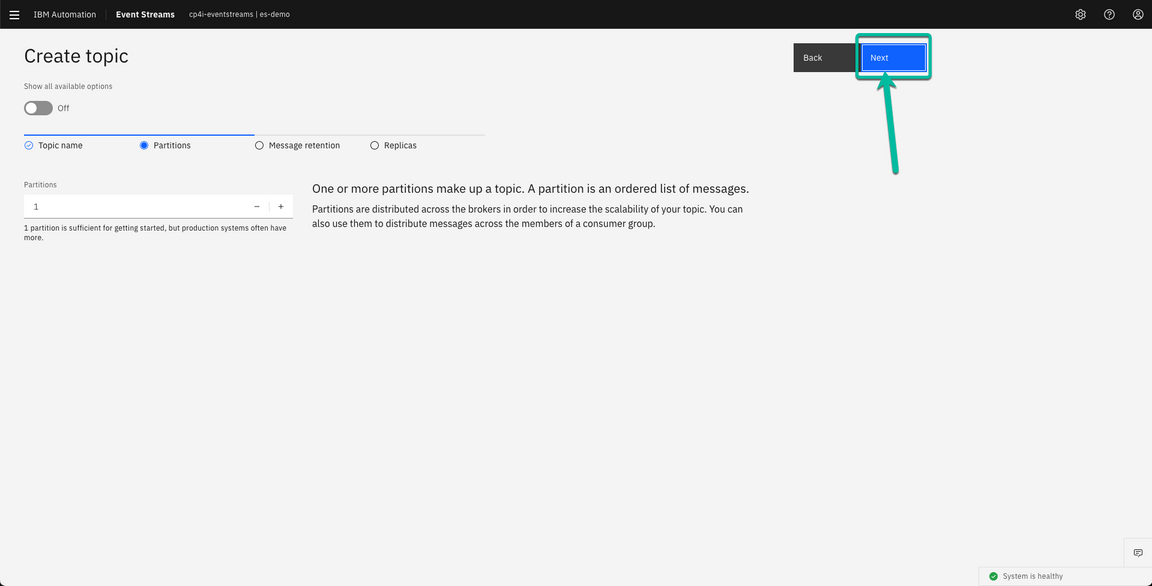

- You can review the different options, but for simplicity I’m accepting the defaults, so you can simply click Next.

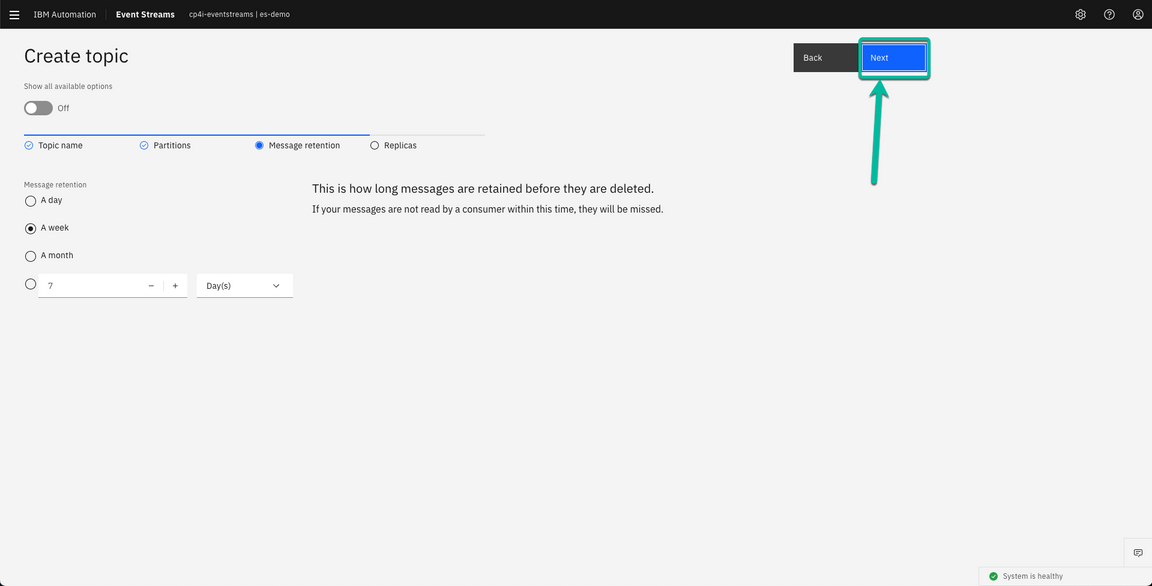

- You can change the retention period, but I’m accepting the default clicking Next without manking any change.

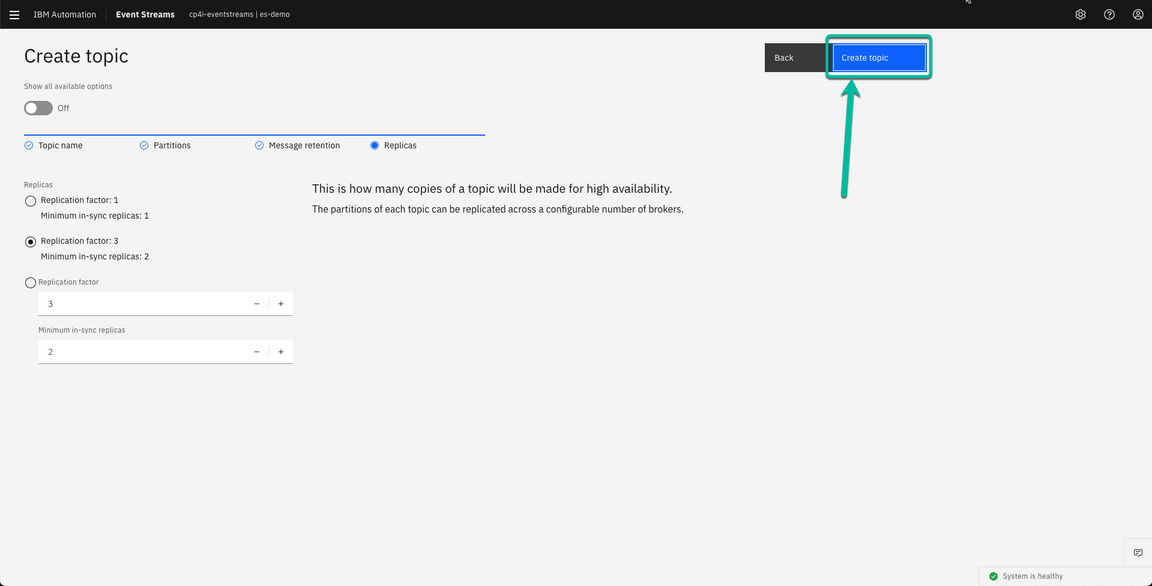

- Click Create Topic to complete the wizard.

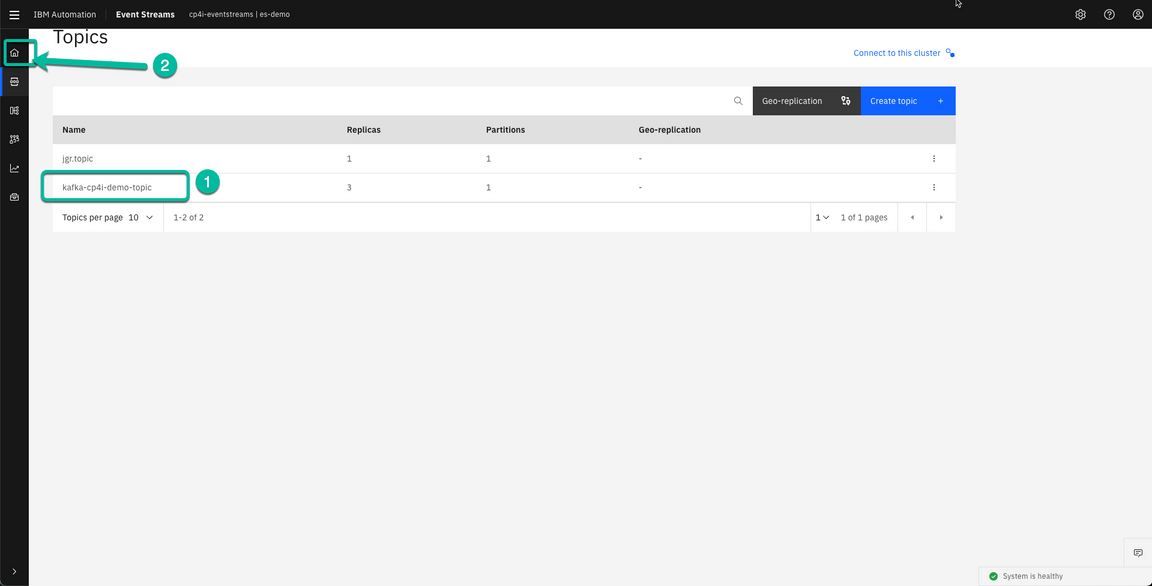

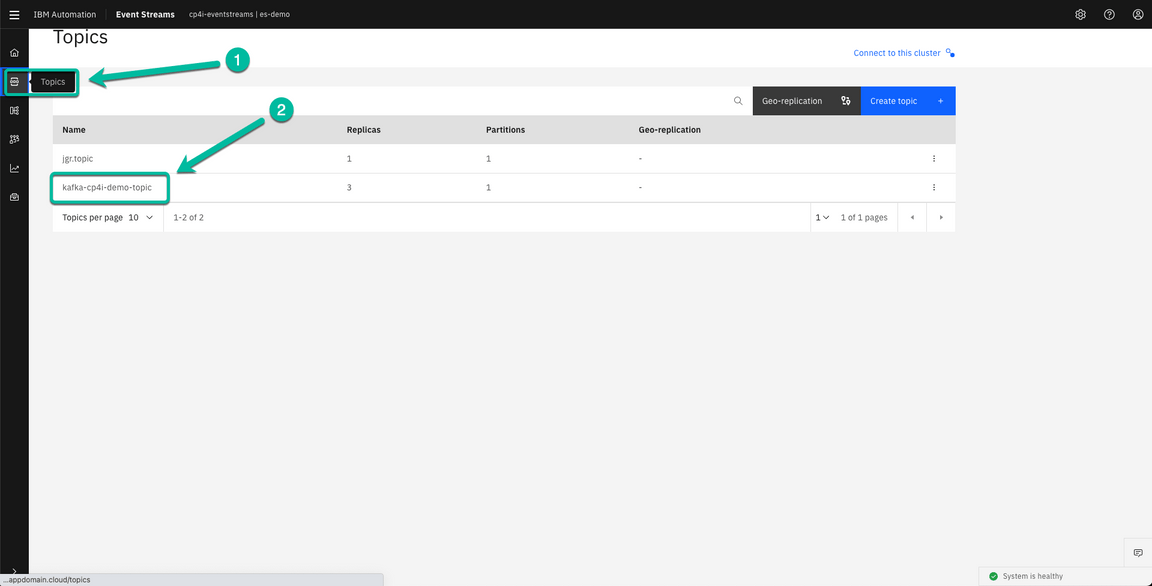

- The new topic will be displayed in the Topics page. First confirm the topic was created and then return to the home page clicking on the Home icon.

2 - Create SCRAM credentials to connect to Event Streams

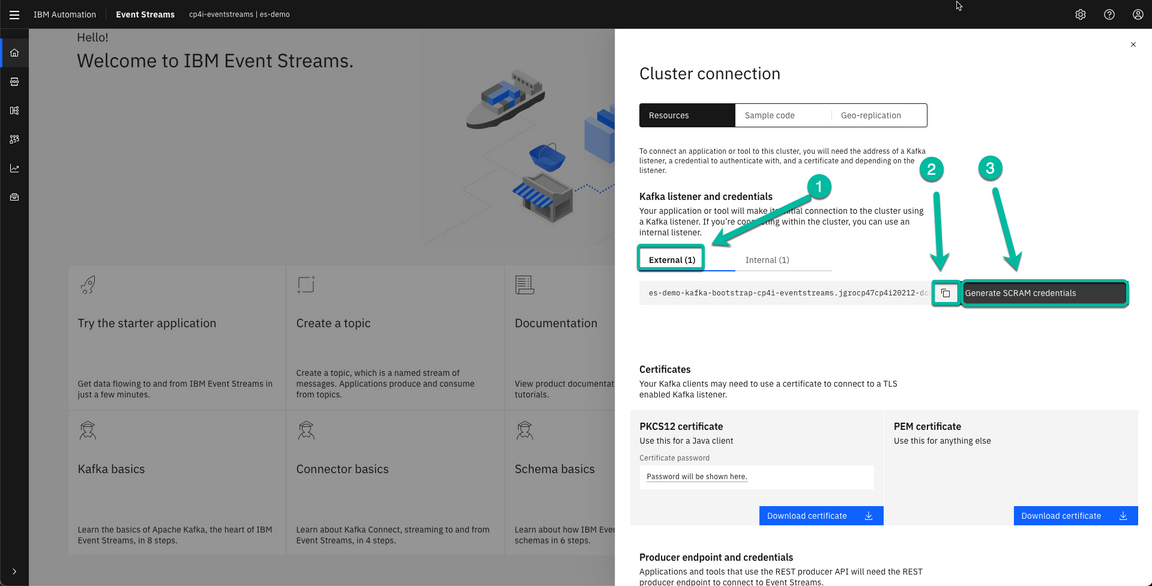

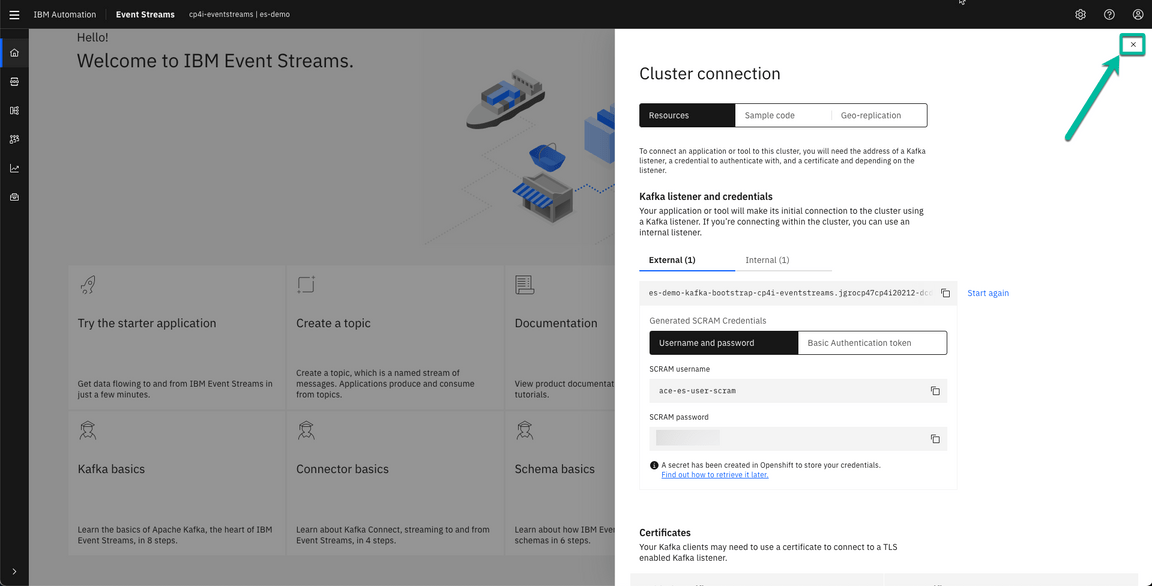

- From the Event Streams Home page click on the Connect to the cluster tile.

- The wizard will guide you duirng the process. First, make sure you have selected External. Then, copy the bootstrap information and paste it in a safe place since we will need it later on. Finally, click on Generate SCRAM credentials.

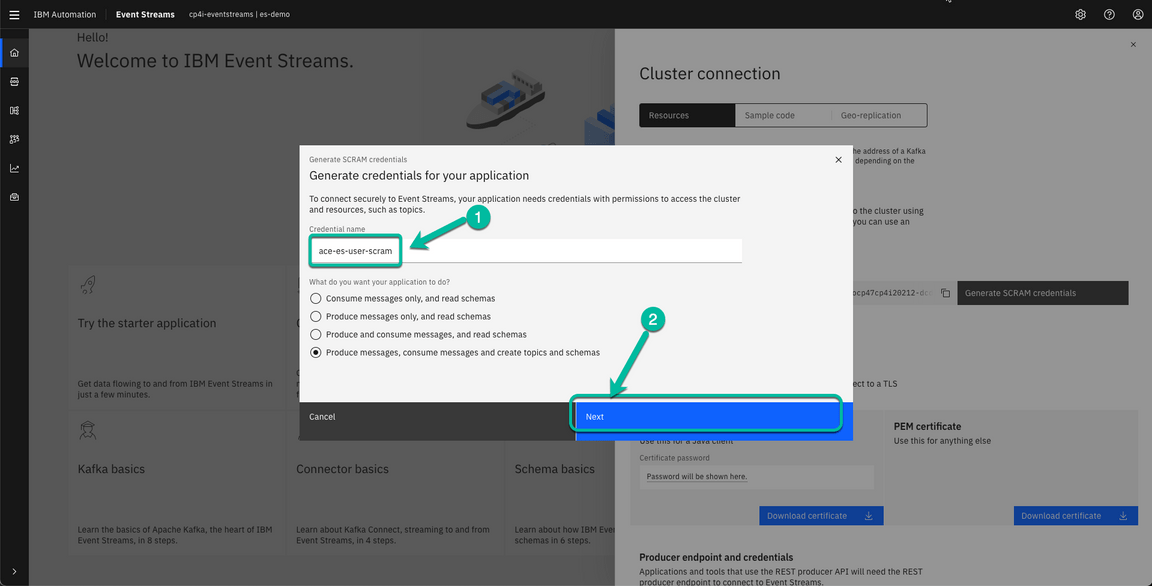

- In the pop up window type the name of the user you want to create. In my case I have used ace-es-user-scram. Then click Next.

- I will grant full access to the new user, but you can control the level of acces as needed. Once you adjust the values click Next.

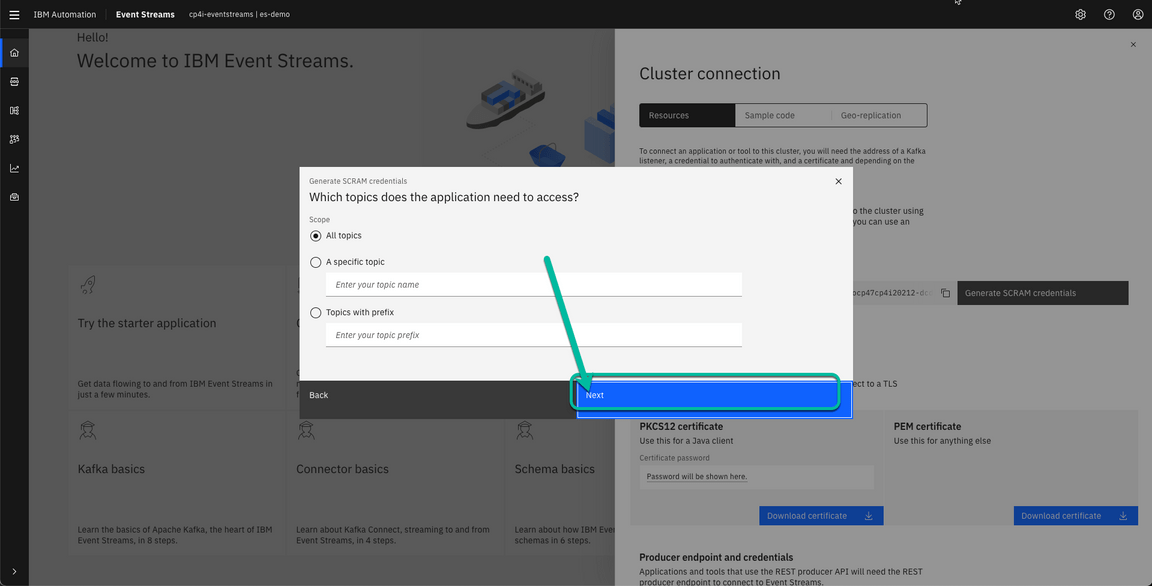

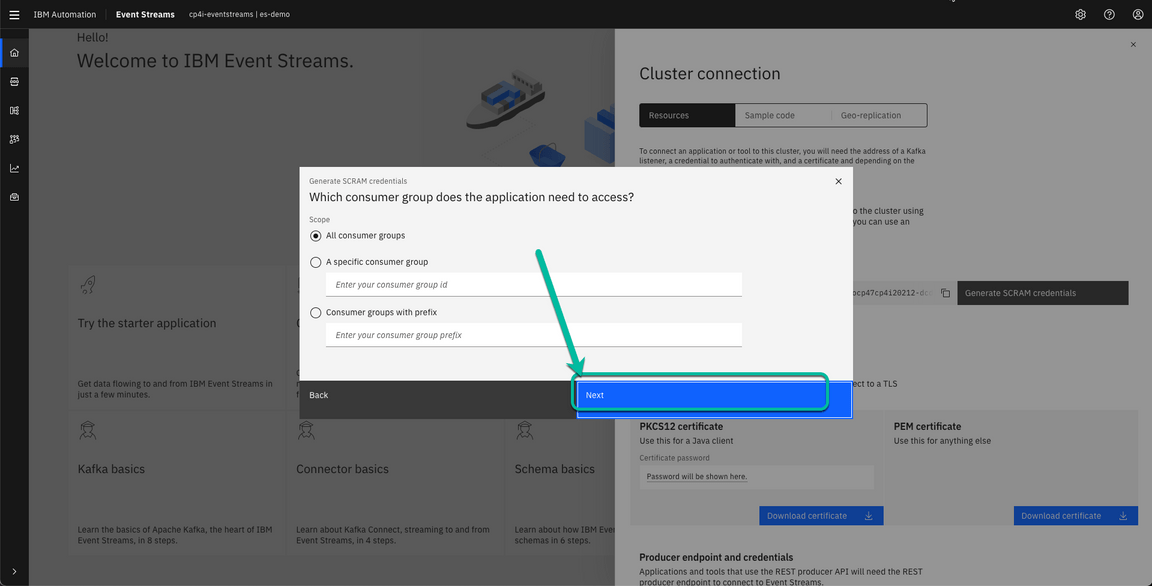

- Adjust the values as needed and click Next.

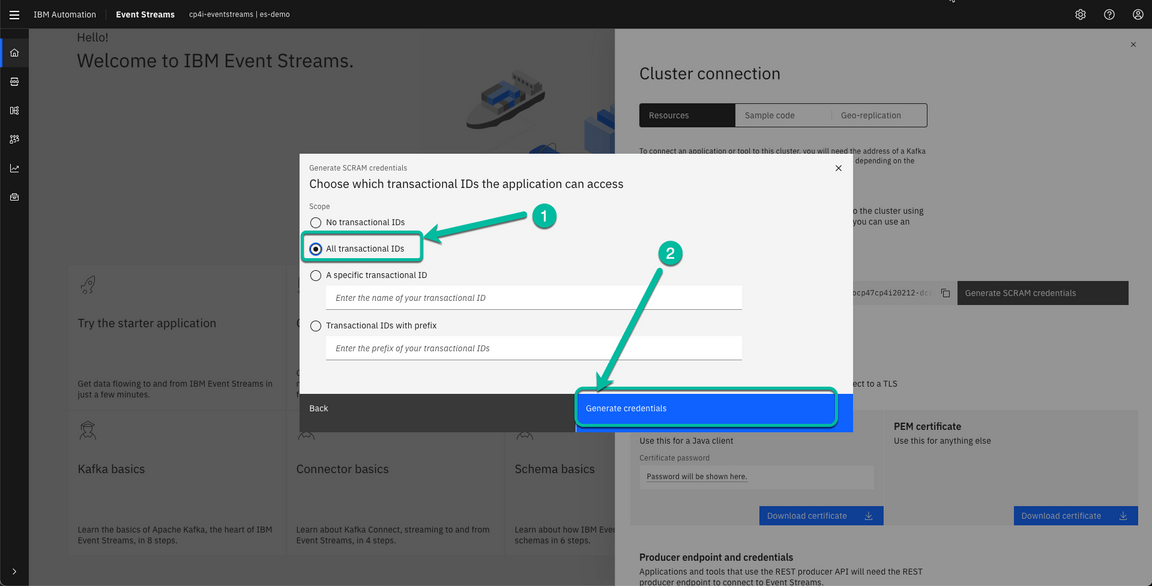

- Select All transactional IDs or the value you prefer and then clik Generate credentials.

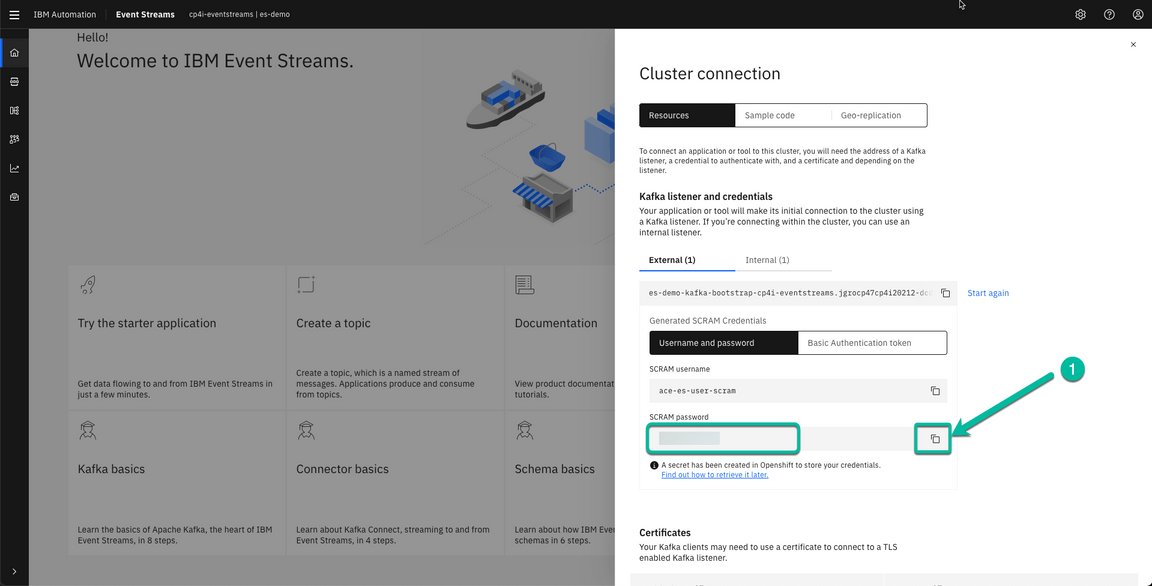

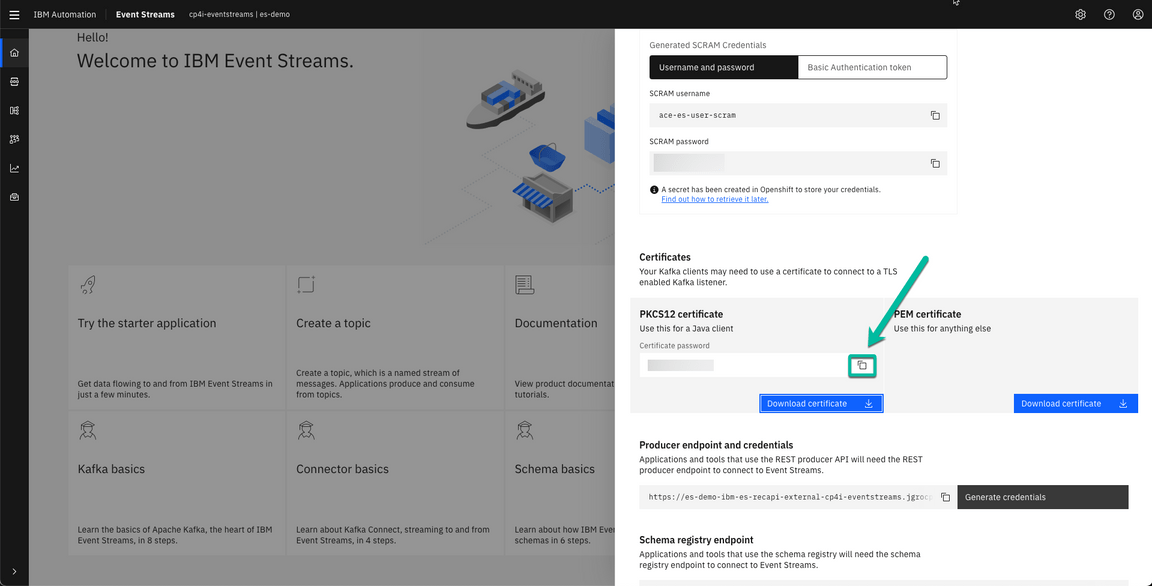

- After a moment the credentials are generated and presented to you. Make sure to copy the password and paste it into a notepad since we will use it later.

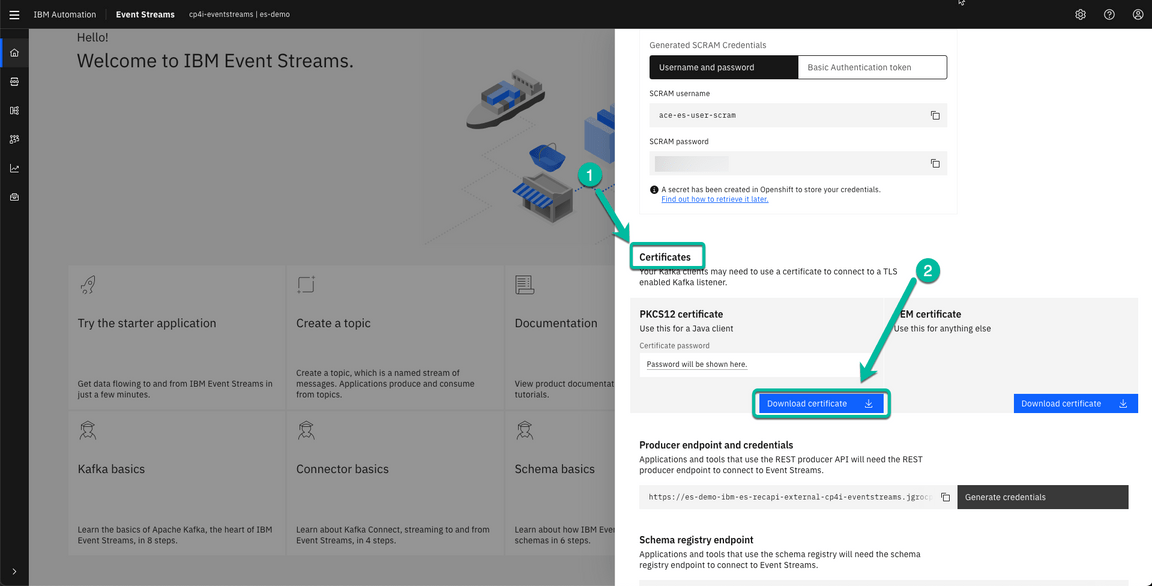

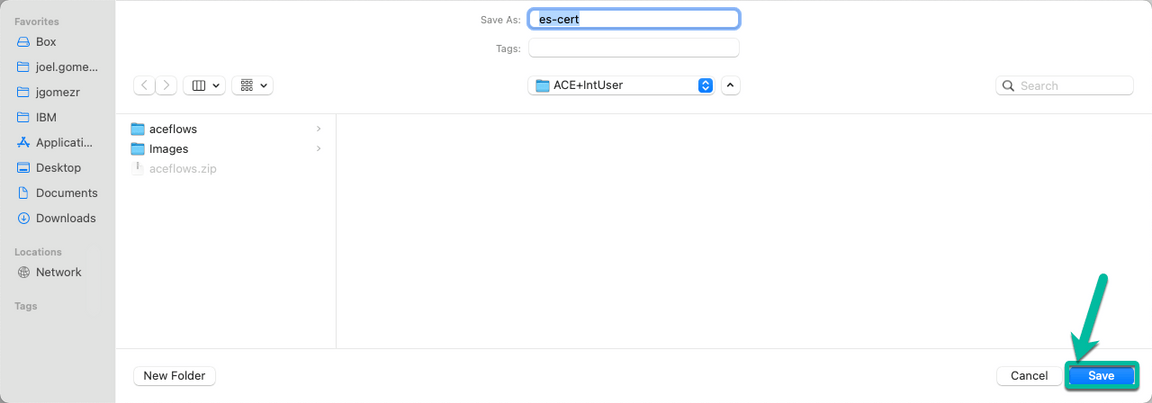

- Scroll down to the *Certificates section and click on Download certificate.

- Navigate to the folder where you want to save the certificate and then click Save.

- Once the certifcate is saved the corresponding password will be displayed. Click on the *Copy icon to paste it into a notepad for future use.

- Scroll up and close the wizard.

- Before we move to ACE we will need to convert the certificate format from PKCS12 to JKS. Open a Terminal and navigate to the location where you stored the certificate in step 8 and run the following command changing the cert-password value for the actual password you got in setp 10.

keytool -importkeystore -srckeystore es-cert.p12 -srcstoretype PKCS12 -destkeystore es-cert.jks -deststoretype JKS -srcstorepass <cert-password> -deststorepass <cert-password> -srcalias ca.crt -destalias ca.crt -noprompt

Now we are ready to move to ACE and create the required configuration using the information we have collected.

3 - Create Resources in the ACE Toolkit

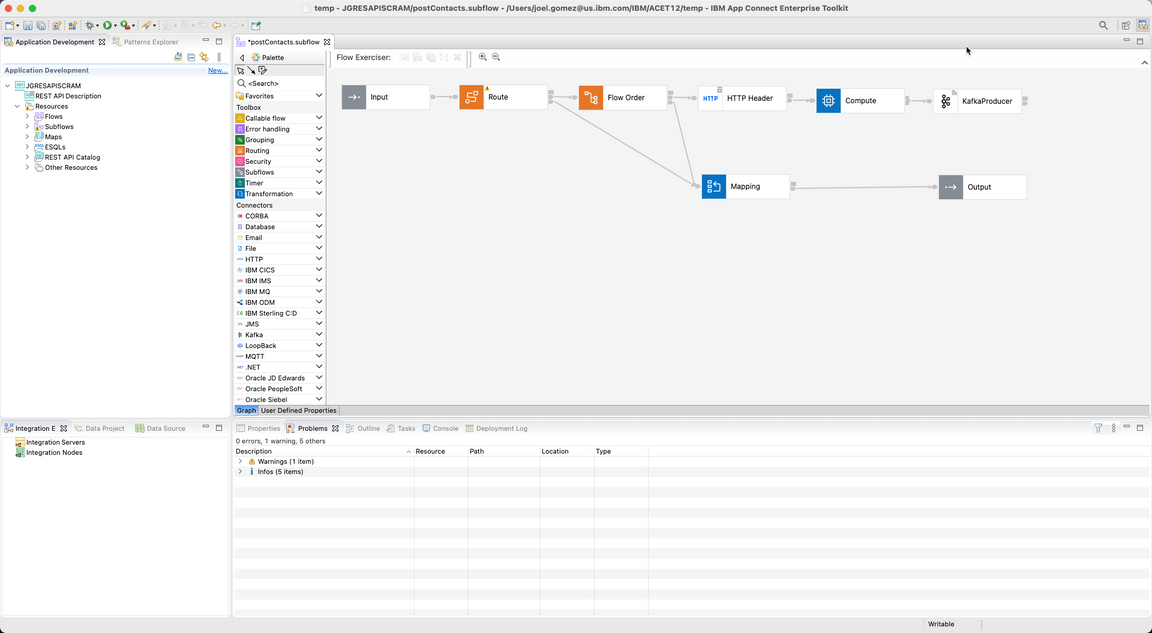

For this lab I have created a simple project that exposes an API to POST information into a Kafka topic. The following picture shows the corresponding flow. Go ahead and download the Project Interchange here. Then, import the project using the File > Import > Project Interchange option.

First we will create a Policy Project to store the Event Streams configuration.

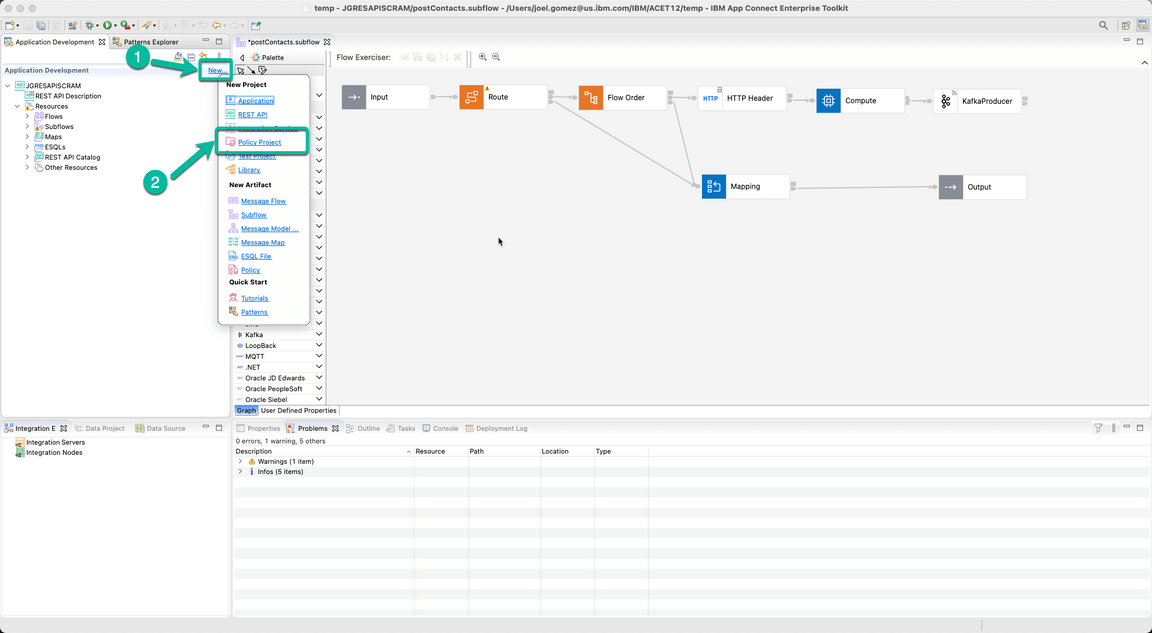

- From the Application Development pane click on *New and select Policy Project.

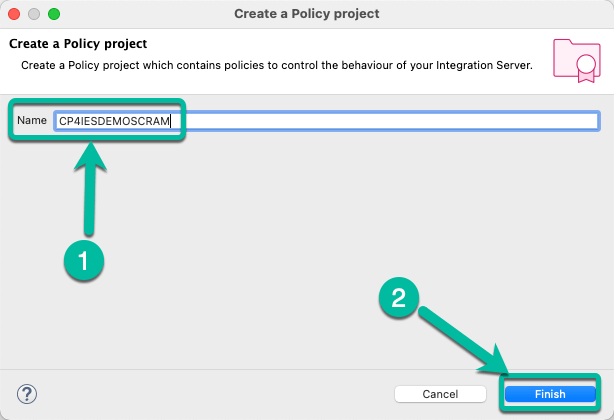

- In the pop-up window enter the name of the project, i.e. CP4IESDEMOSCRAM and click Finish.

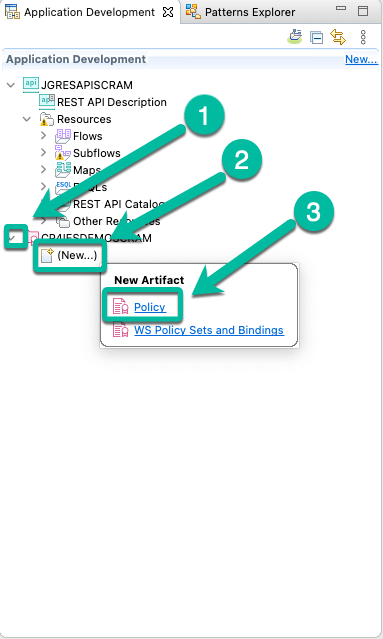

- The project appears in the Application Development pane. Expand the project and click (New…). Then select Policy from the New Artifact menu.

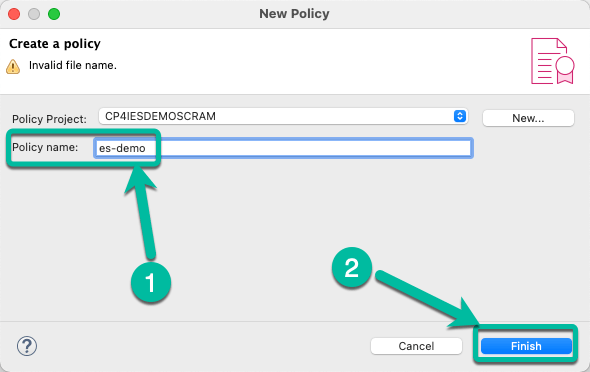

- In the pop-up window enter the name of the policy, i.e. es-demo and click Finish.

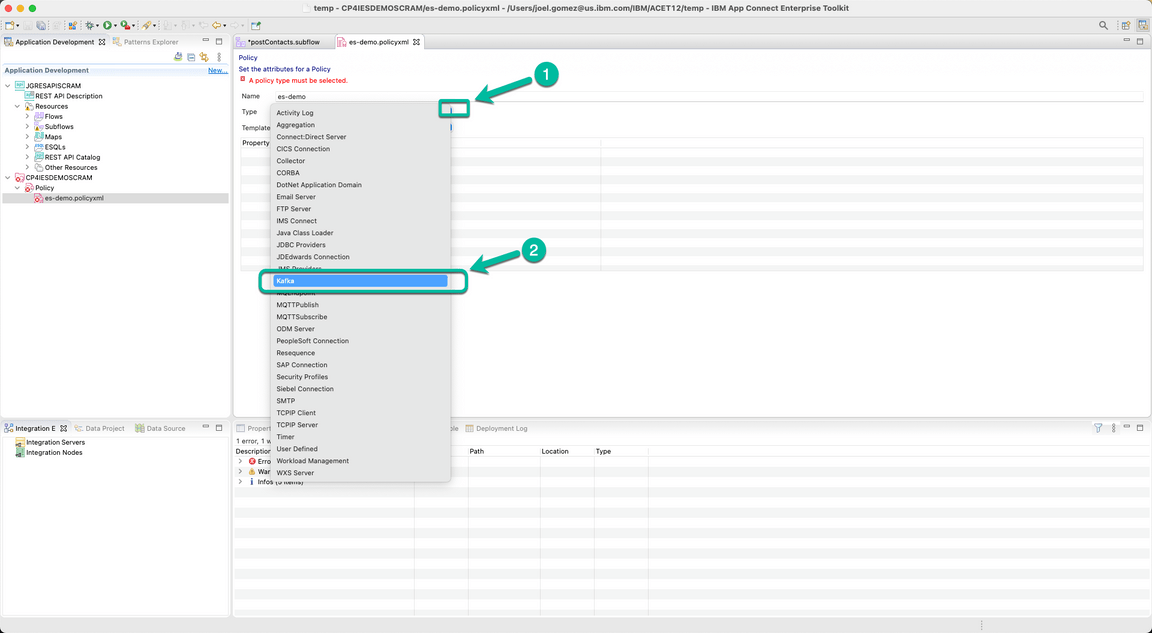

- The *Policy Editor opens to enter the data. Click on the Type drop down box and select Kafka.

The default values from the Kafka Template are used to pre-populate the policy.

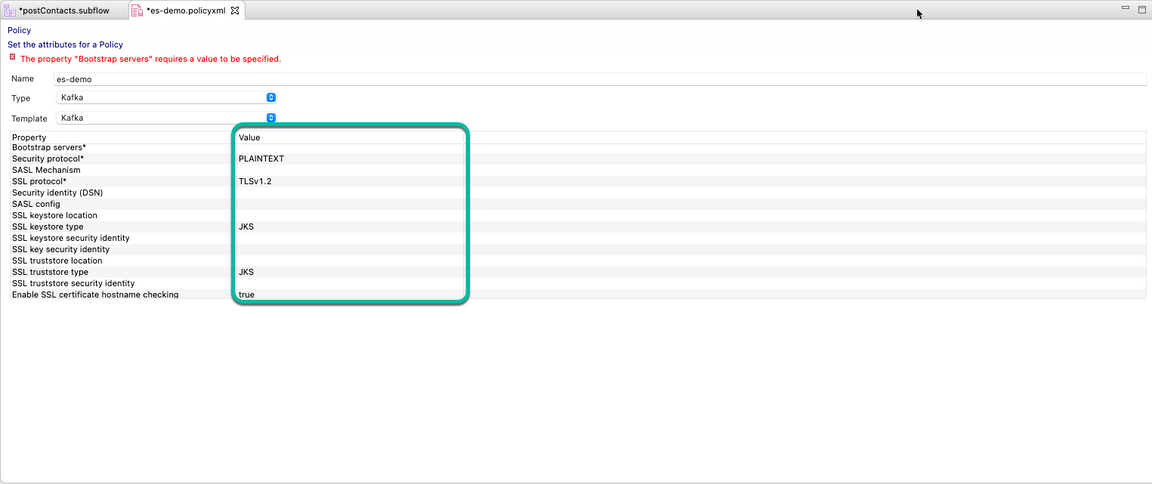

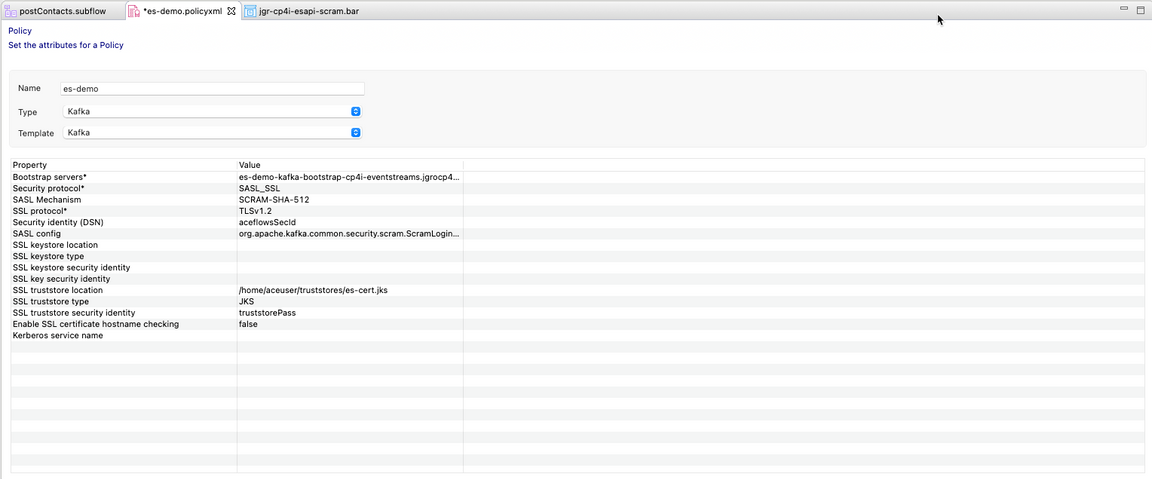

- Update the policy using the following values:

| Property | Value |

|---|---|

| Bootstrap servers | <Value copied from previos section> |

| Security protocol | SASL_SSL |

| SASL Mechanism | SCRAM-SHA-512 |

| SSL protocol | TLSv1.2 |

| Security identity (DSN) | any value, i.e. aceflowsSecId |

| SASL config | org.apache.kafka.common.security.scram.ScramLoginModule required; |

| SSL truststore location | /home/aceuser/truststores/es-cert.jks |

| SSL truststore type | JKS |

| SSL truststore security identity | truststorePass |

| Enable SSL certificate hostname checking | false |

The policy should look similar to this:

Don’t forget to Save your changes.

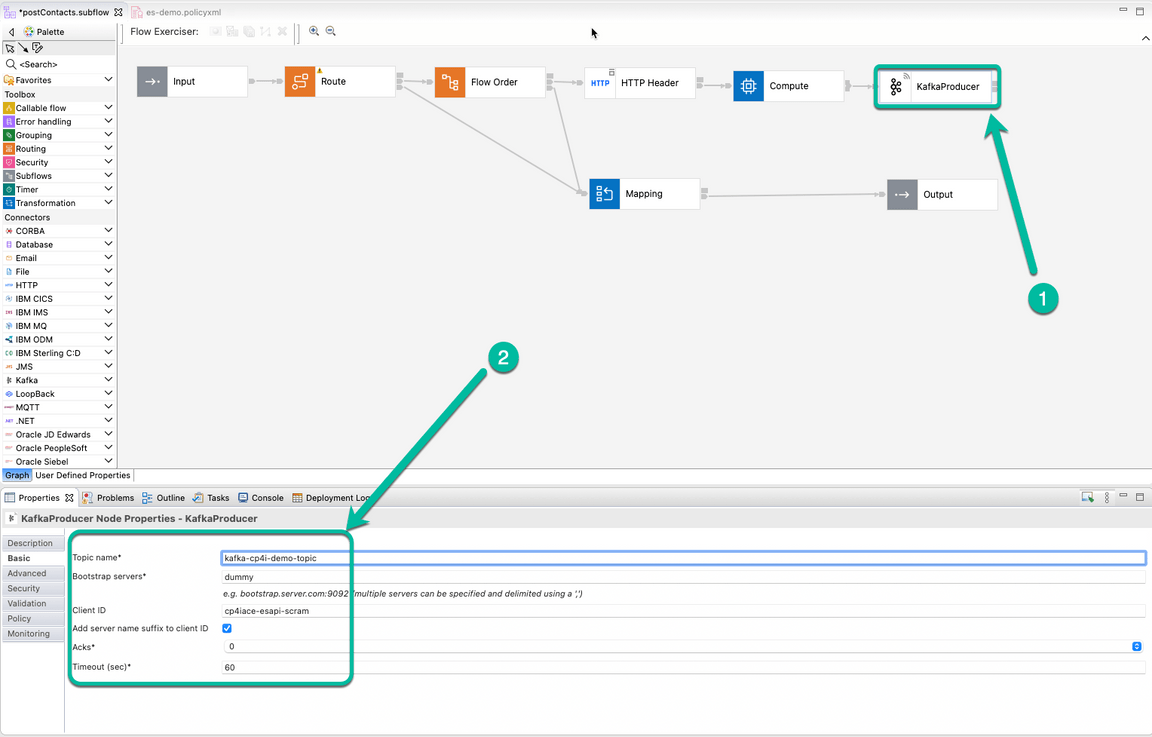

Go back to the *Flow Editor to update the flow. Select the KafkaProducer node and update the properties in the Basic tab. Use the following information as a reference:

| Property | Value |

|---|---|

| Topic name | <Value used in the first section> i.e. kafka-cp4i-demo-topic |

| Bootstrap servers | We have configured this value in the policy, but since this is a mandatory field we will enter dummy |

| Client ID | any value, i.e. cp4iace-esapi-scram |

The tab should look similar to this:

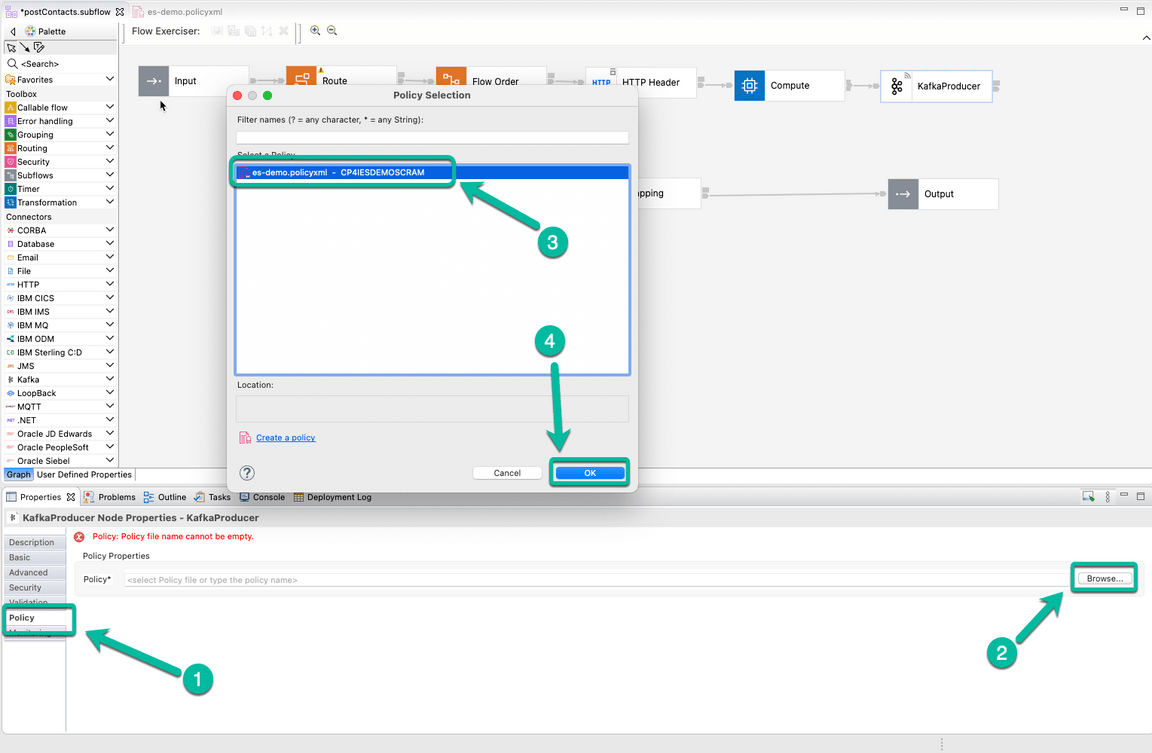

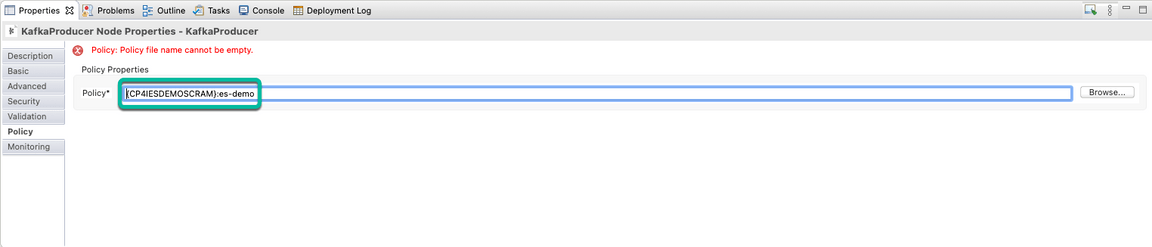

- Select the Policy tab and in the window click Browse. From the pop-up window select the policty we just created and click OK.

- The policy with be displayed in the *Policy field. Save your project to get rid of the error message.

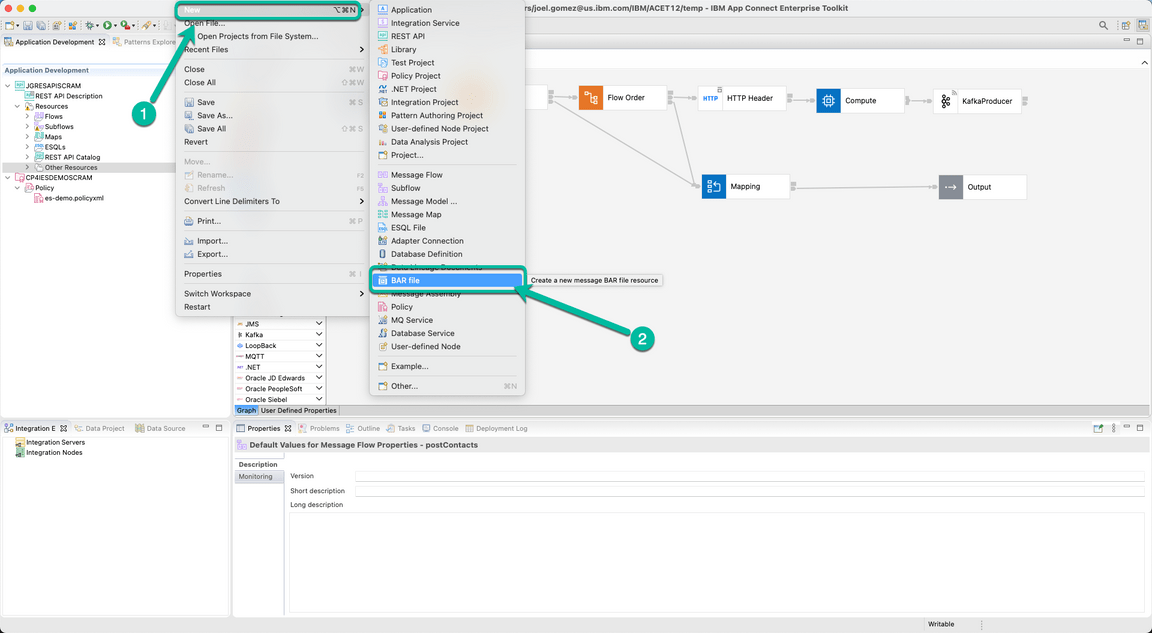

- Now we will create the BAR file to deploy our flow. Navigate to the *File menu and select *New and then BAR file

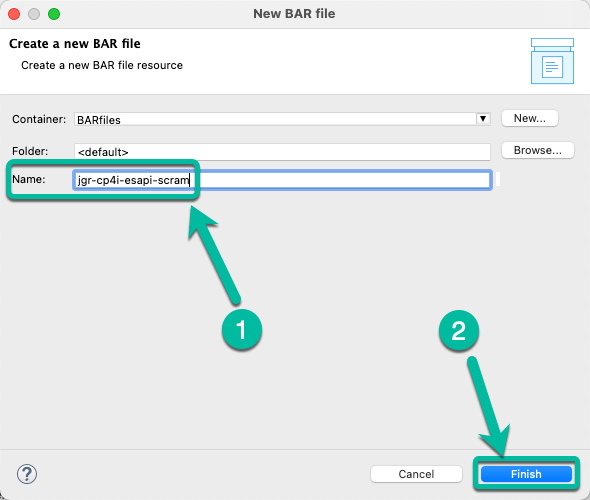

- In the pop-up window enter the name of the BAR file, i.e. jgr-cp4i-esapi-scram and click Finish.

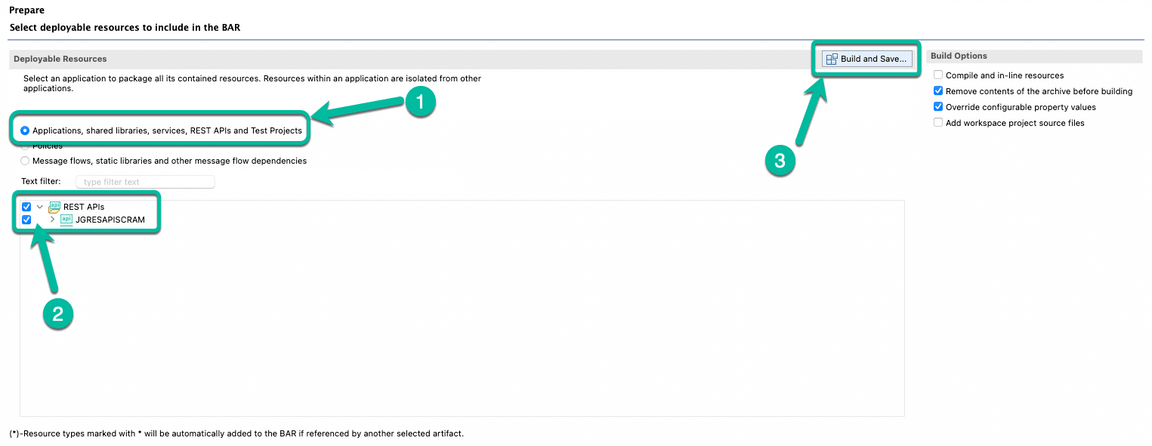

- The BAR Editor is open. Select Applications, shared libraries, services, REST APIs, and Test Projects to display the right type of resources and then select your application, in this case JGRESAPISCRAM. Finally click Build and Save… to create the BAR file.

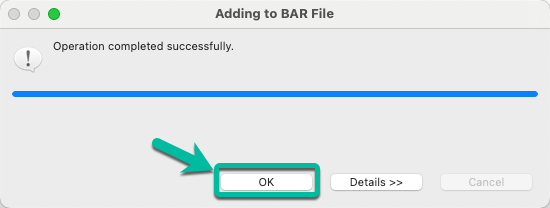

- You can dismiss the notification window reporting the result clicking OK.

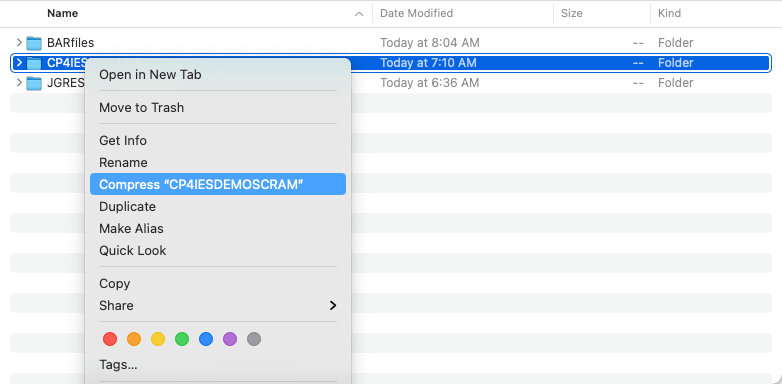

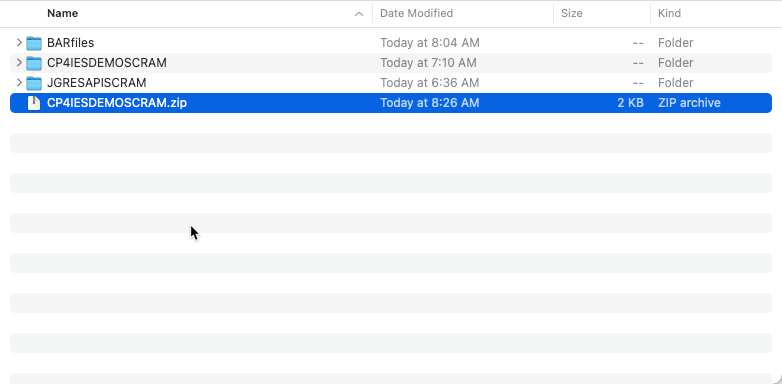

- Before we head to the ACE Dashboard we need to do a final step. We need to create a zip file from the Policy Project we created earlier in this section. For that you need to navigate to the folder where your ACE Toolkit workspace is located (in my case that use Mac it is located at /Users/<your user>/IBM/ACET12/<you workspace>) and use your tool of choice to compress the folder where the policy project is located. The following picture shows a possible option.

- Take a note where the zip file is located because we will use it in the next section.

Now we can move to the next phase.

4 - Create Configurations in ACE Dashboard

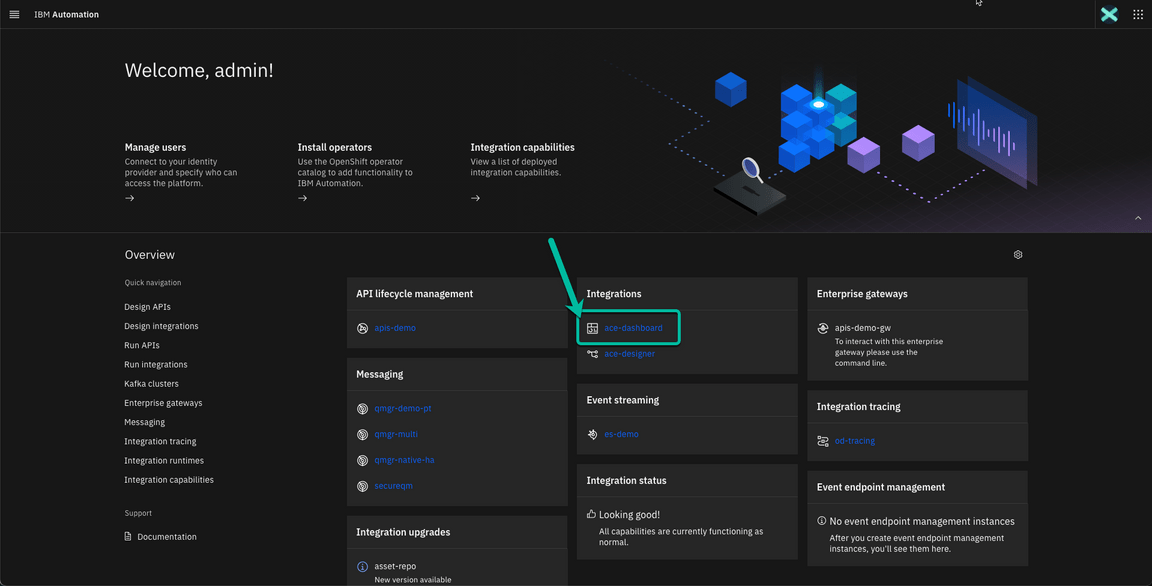

- Back to CP4I Platform Navigator, open the ACE Dashboard.

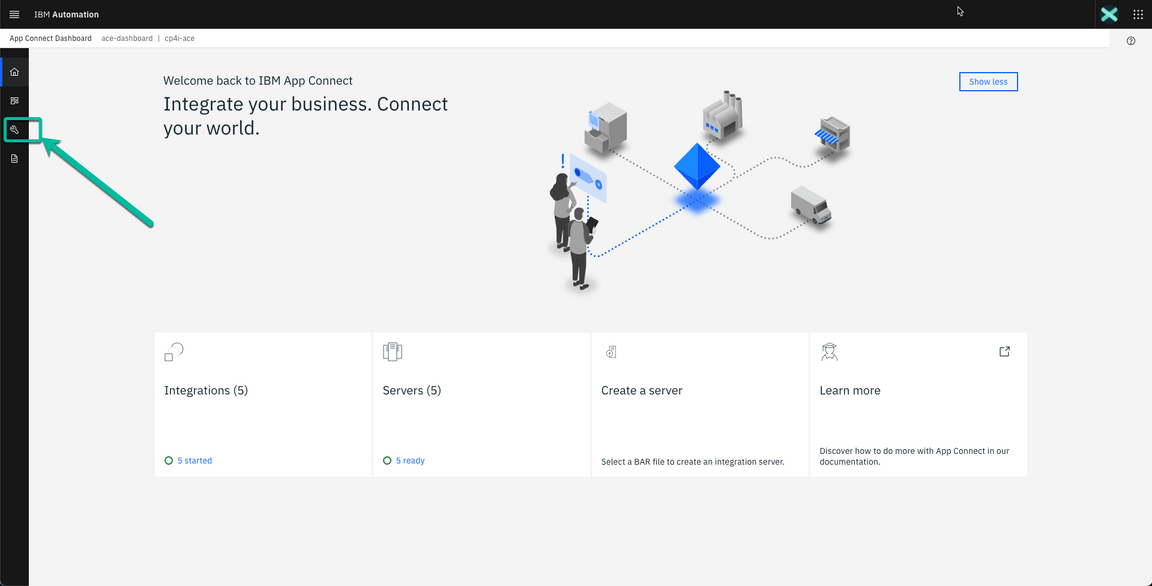

- Click on the Wrench icon to navigate to the Configuration section.

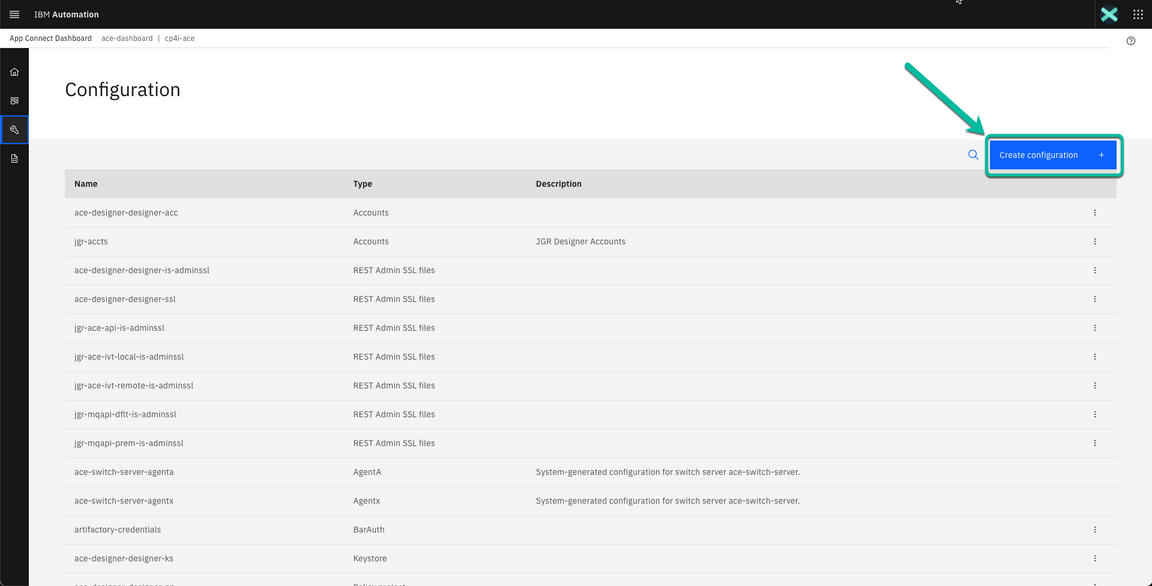

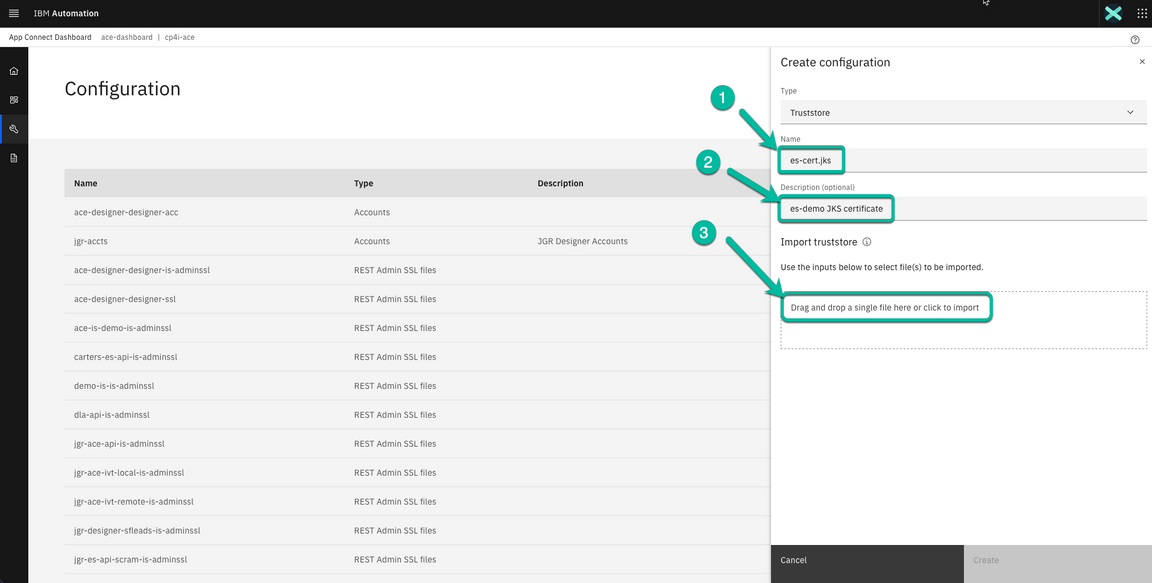

- Once you are in the Configuration window, click on *Create configuration.

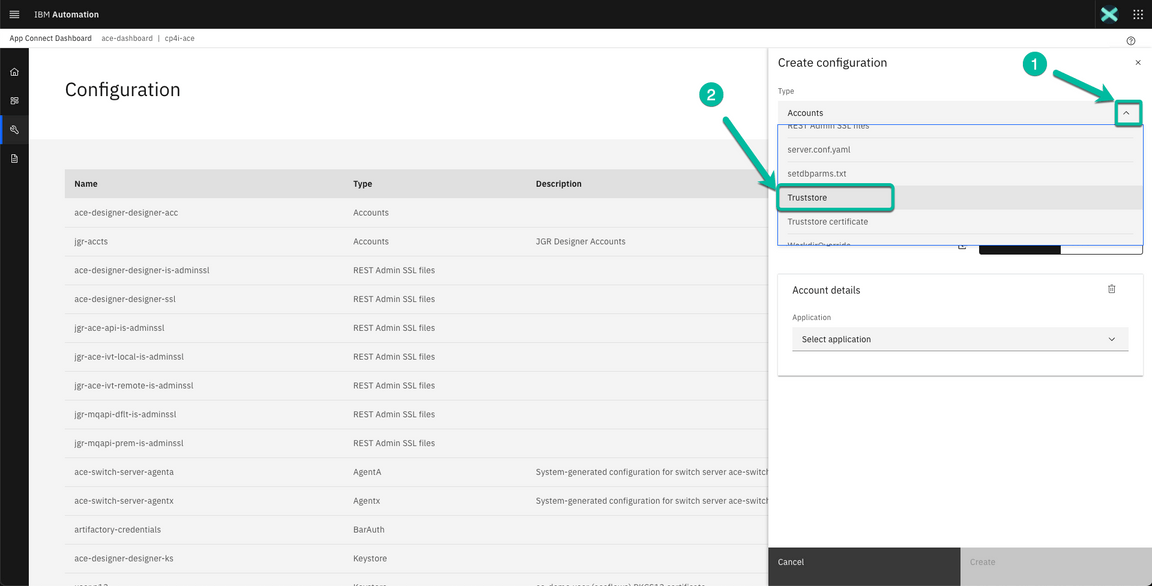

- In the wizard click on the Type drop down box to select Truststore to upload the Event Streams certificate.

- In the next window enter the name of the certificate you created in the previous section (es-cert.jks), then a brief description if you want to. In my case I used es-demo JKS certificate. Finally click on Drag and drop a single file here or click to import.

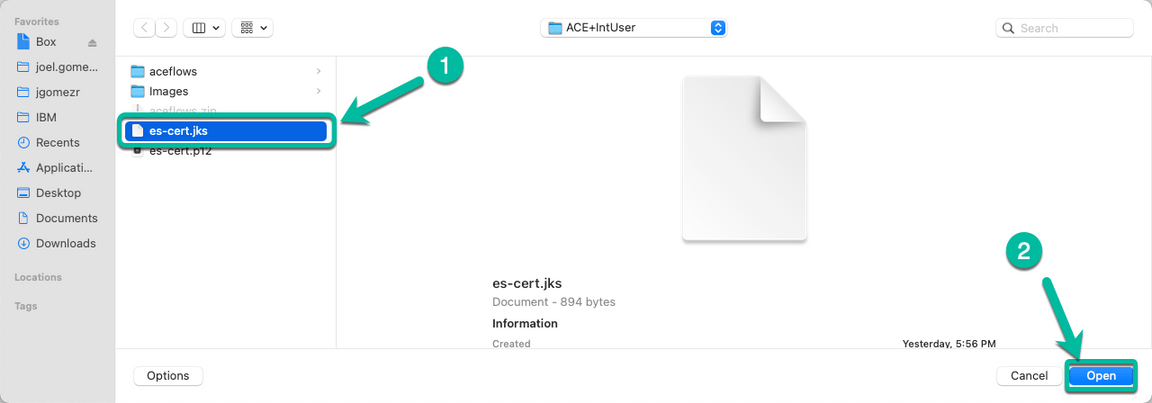

- Navigate to the folder where you craeted the JKS certificate in the previous section and select the file and click Open.

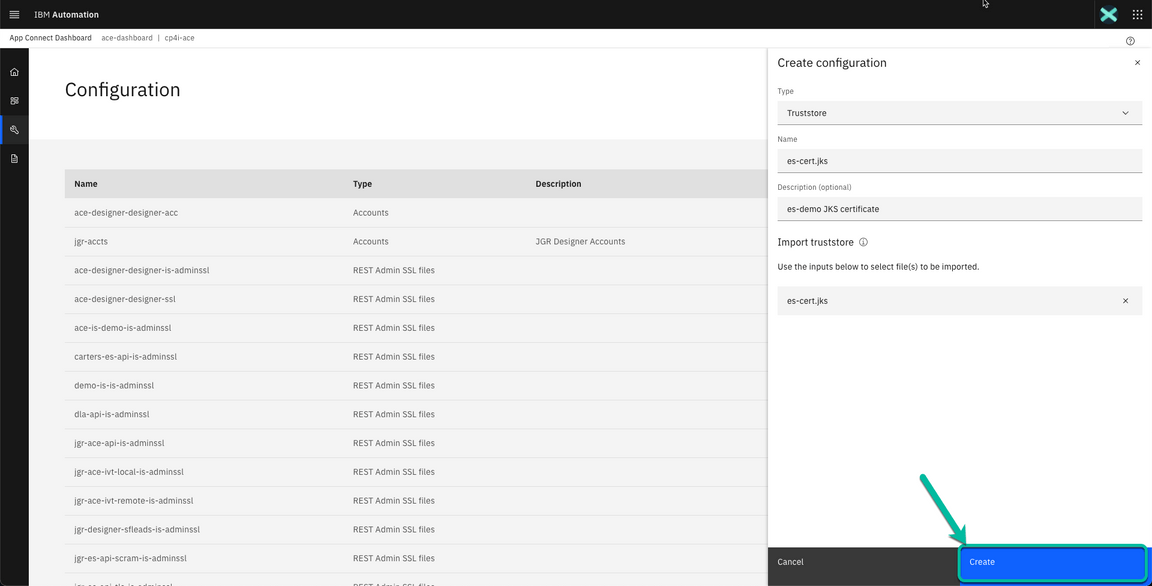

- Finally click Create to add the TrustStore Configuration.

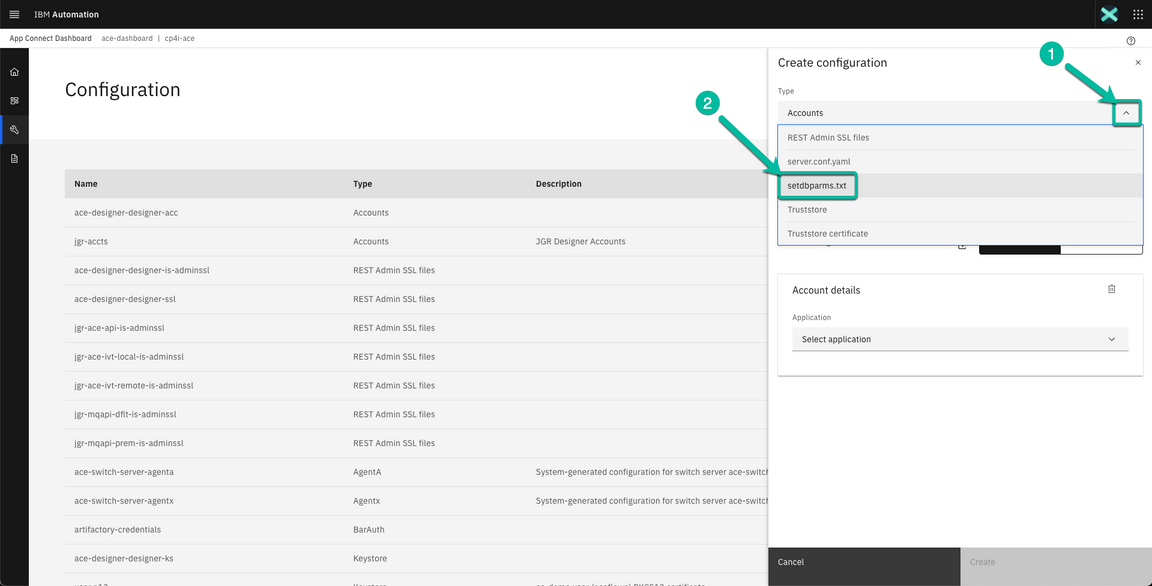

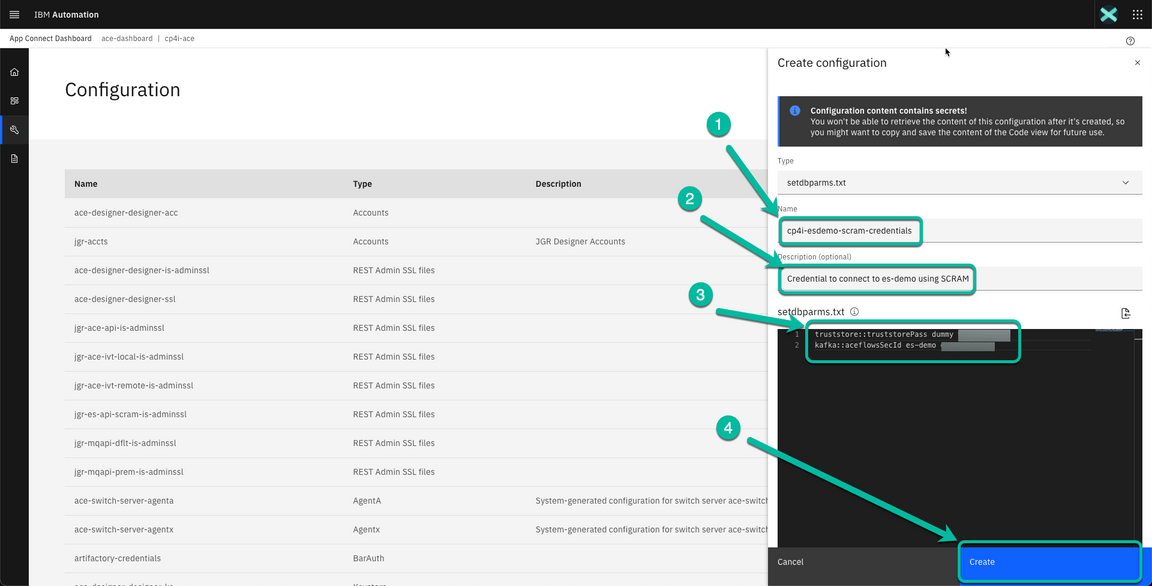

- Now we will define the Configuration to store the credentials selecting the setdbparms.txt type from the drop down box.

- In the next window enter the name of the configuration. In my case I used cp4i-esdemo-scram-credentials. Followed by a brief description i.e. Credential to connect to es-demo using SCRAM. Finally use the following information as a reference to enter the data in the text box.

| Resource Name | User | Password |

|---|---|---|

| truststore::truststorePass | dummy | <Password obtained in step 10 of section “Create SCRAM credentials to connect to Event Streams”> |

| kafka::aceflowsSecId | <Name of user created in step 3 of section “Create SCRAM credentials to connect to Event Streams”, i.e. ace-es-user-scram> | <Password obtained in step 7 of section “Create SCRAM credentials to connect to Event Streams”> |

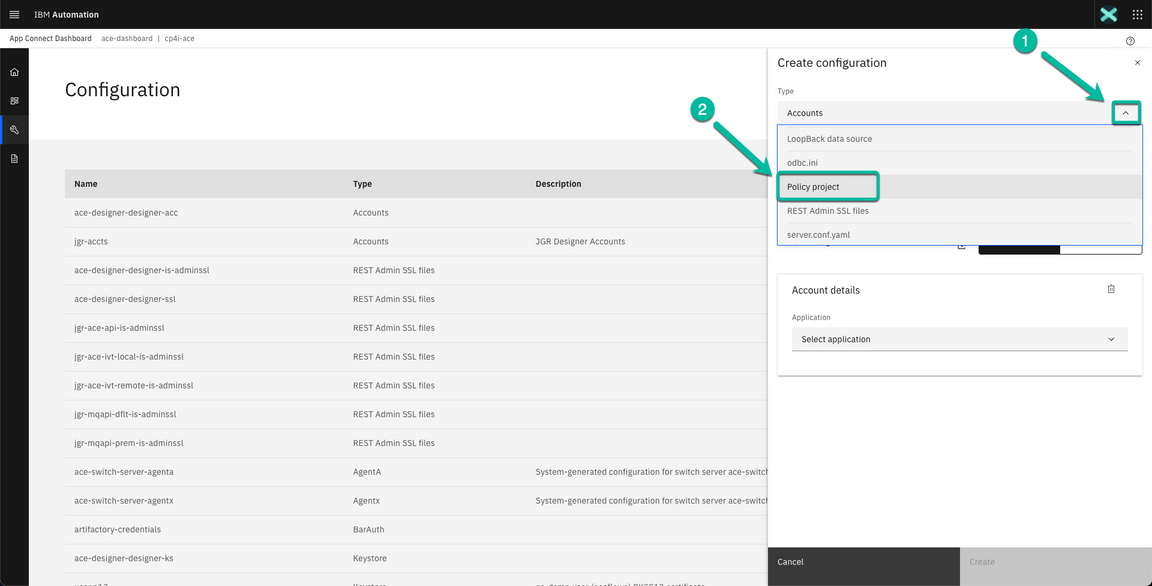

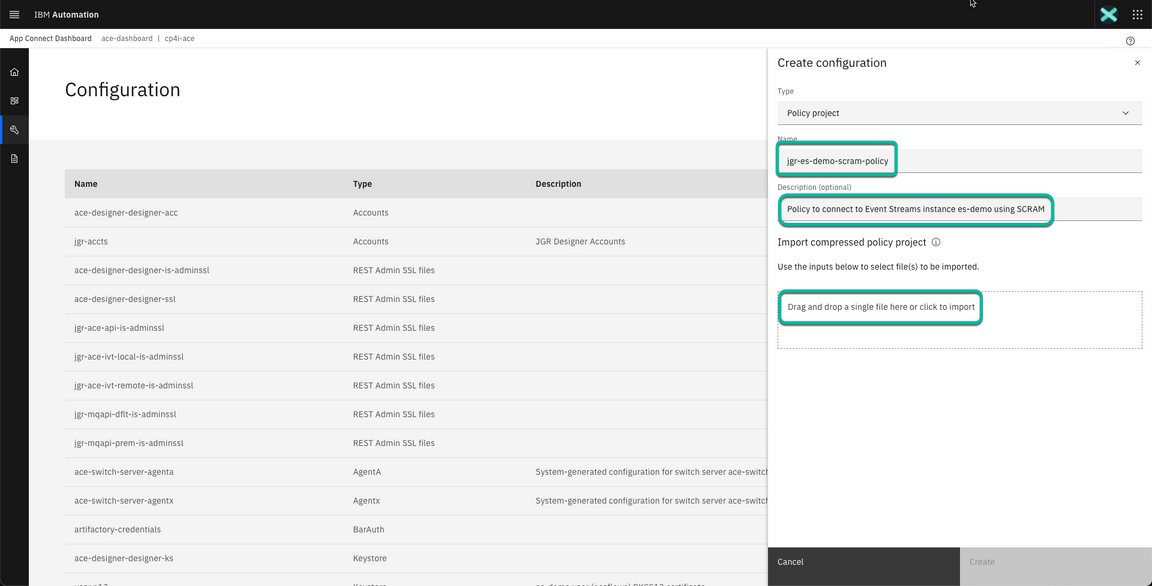

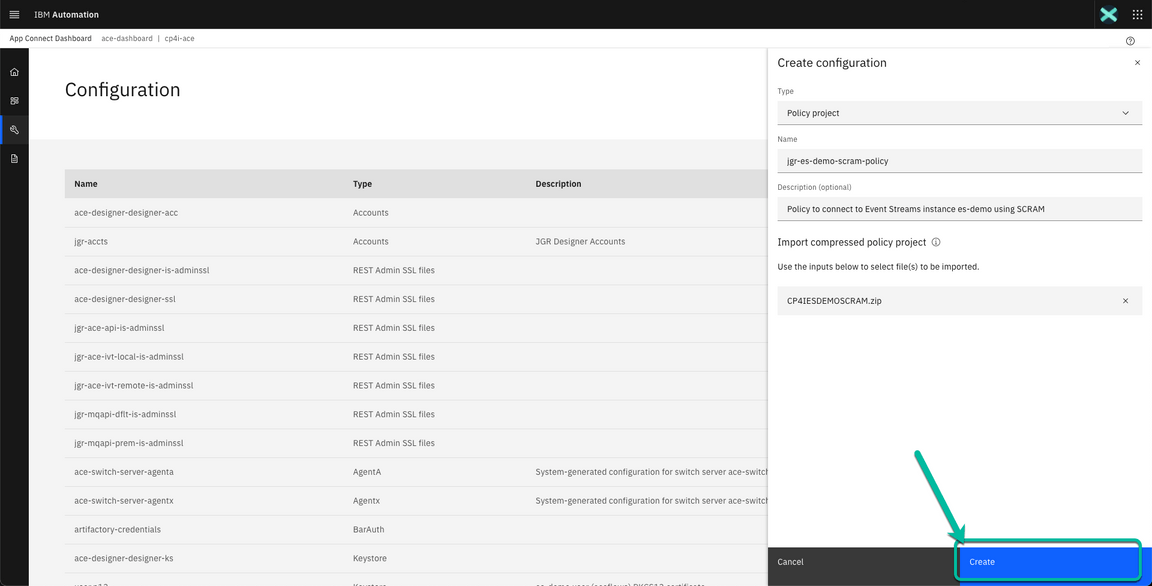

- Now we will create the Configuration for the Policy selecting the Policy project type from the drop down box.

- In the next window enter the name of the configuration, i.e *jgr-es-demo-scram-policy, a brief description if you want to, i.e. Policy to connect to Event Streams instance es-demo using SCRAM and finally click on hyper link Drag and drop a single file here or click to import to import the zip file we created in the previous section.

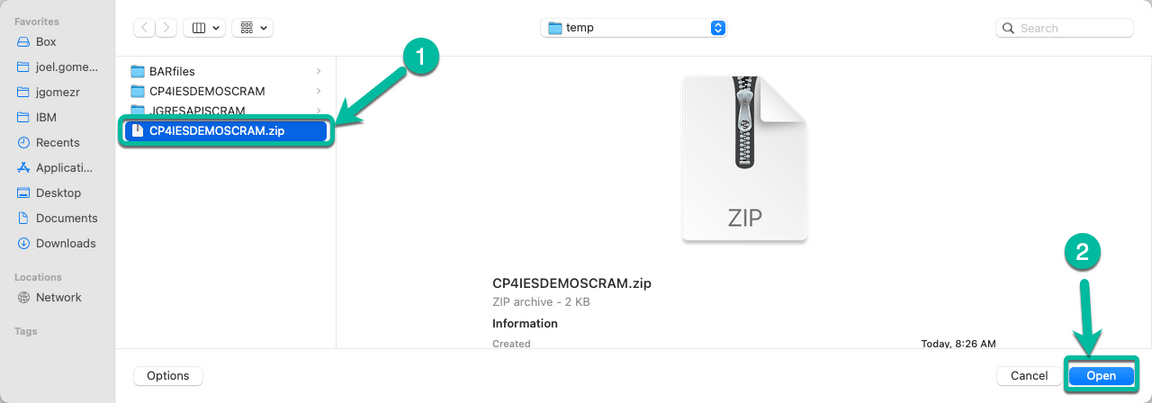

- Navigate to the folder where you saved the zip file in the previous section, select the file and click Open.

- Finally click Create to save the configuration for the policy project.

We are ready to move to the final phase of the process.

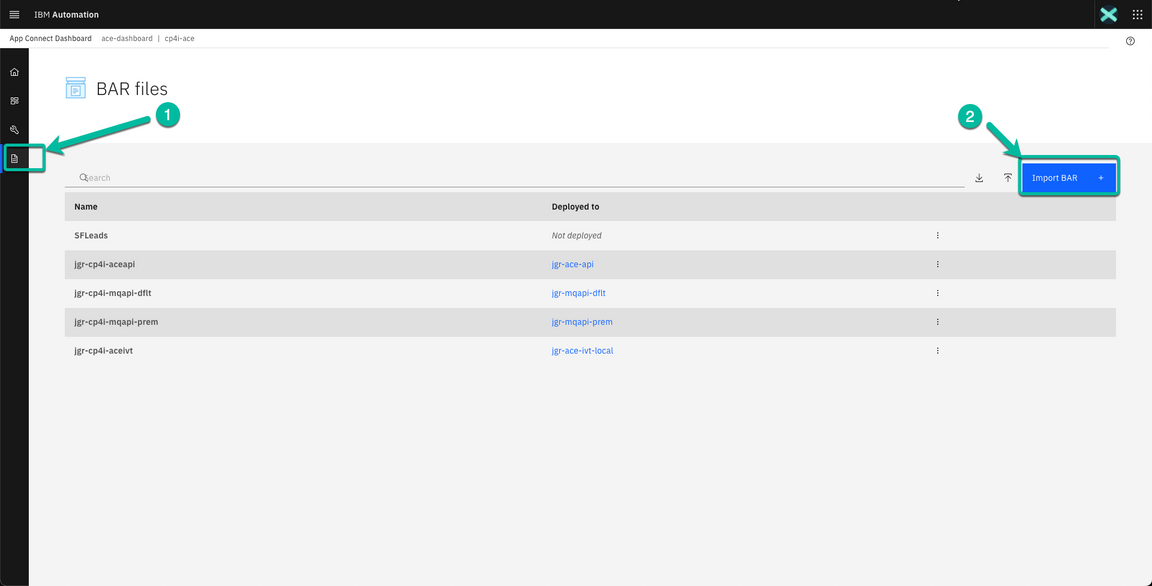

5 - Deploy BAR file using ACE Dashboard

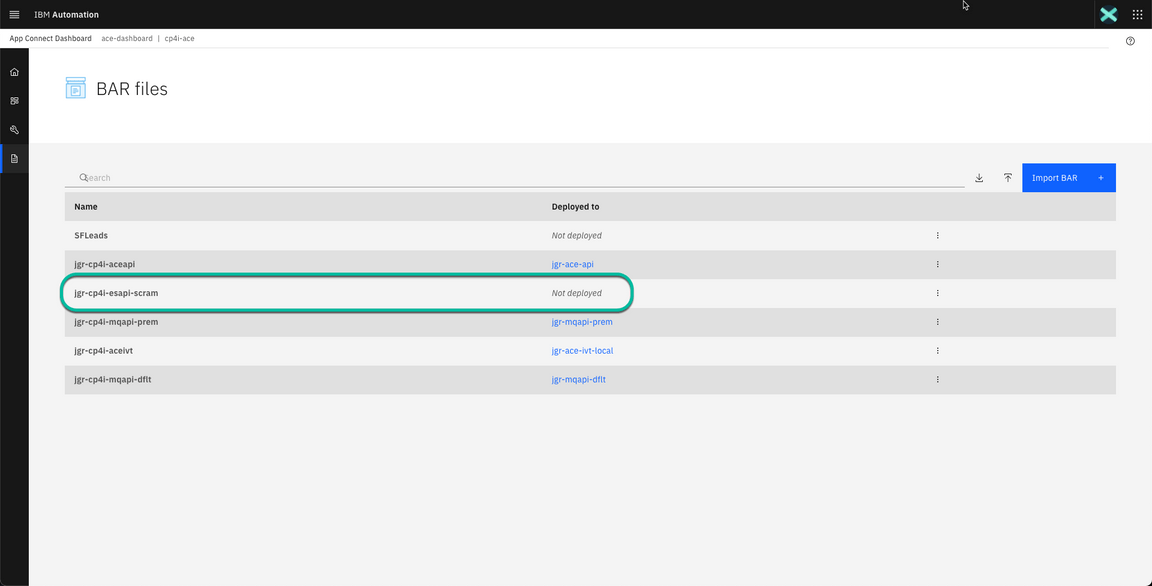

- From the ACE Dashboard home page navigate to the BAR files section clicking the Document icon and then click Import BAR + button.

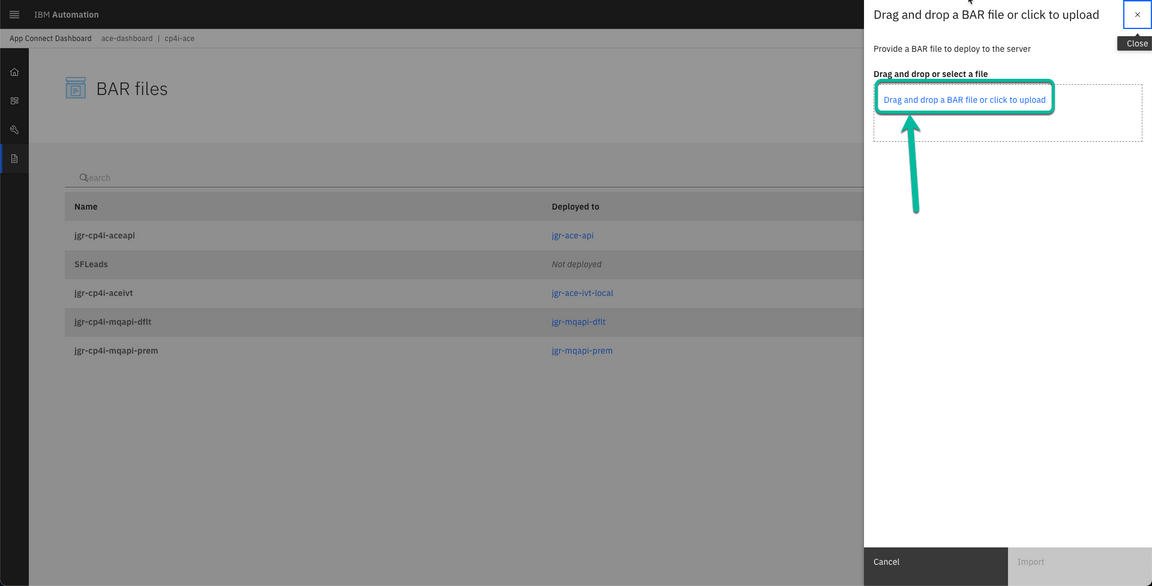

- In the wizard click the hyperlink Drag and drop a BAR file or click to upload to upload the BAR file we created in the previous section.

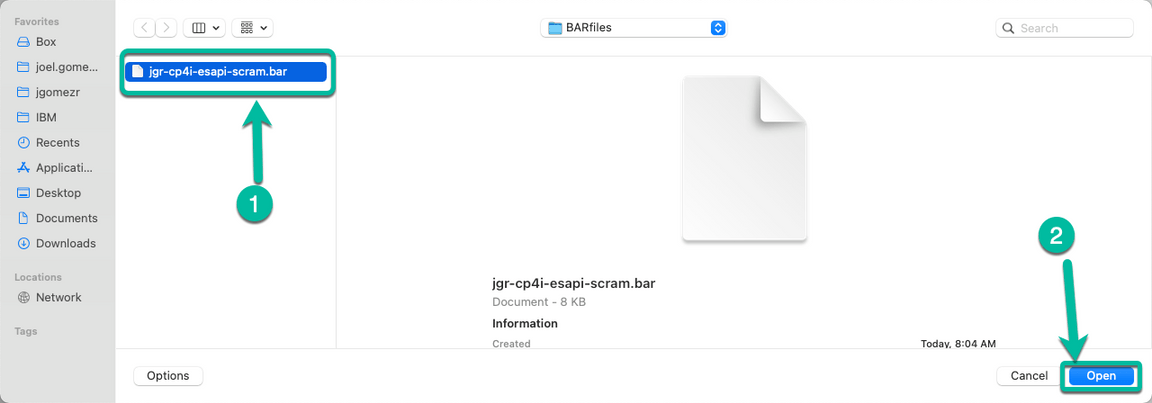

- Navigate to the folder where the BAR file was created (nn Mac you can find it at /Users/<your user>/IBM/ACET12/<your workspace>/BARfiles) and select the file and click Open.

- To complete the process click Import.

- After a moment the file will be displayed in the list of BAR files available with the status of Not deployed

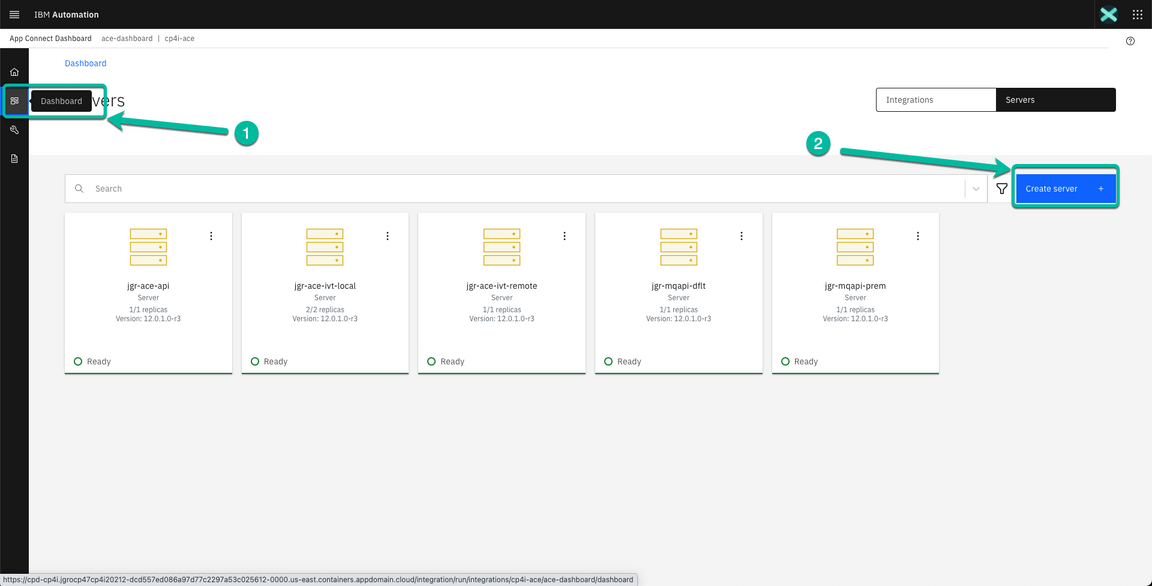

- Now navigate to the Dashboard section and click on the Create server + button.

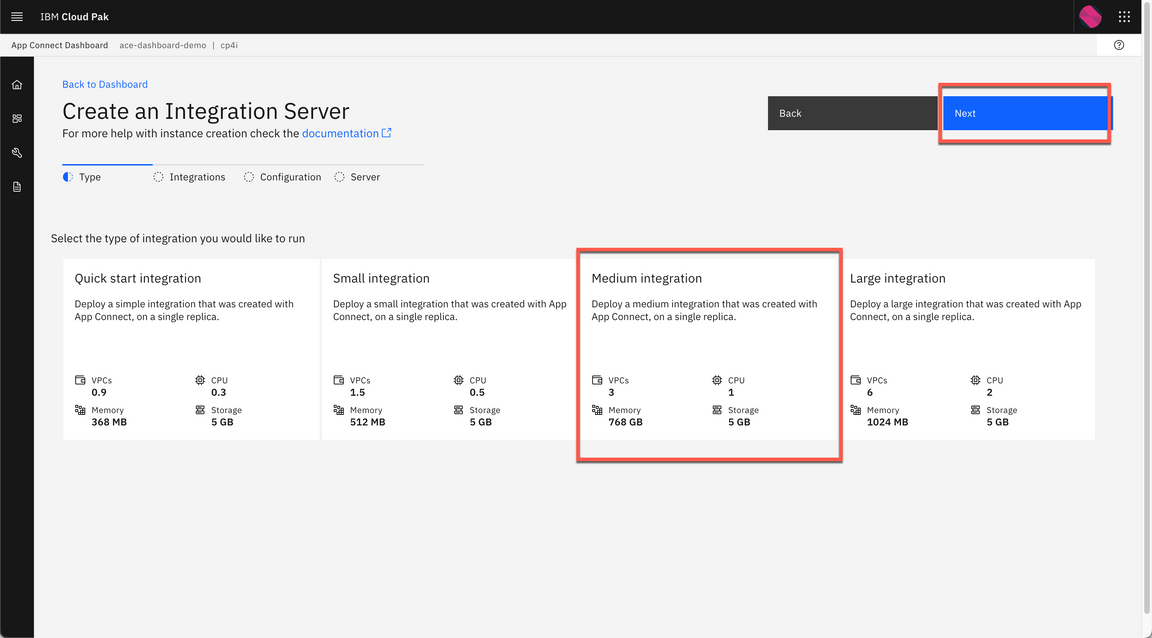

- This will start the deployment wizard. Select the Medium Integration tile and then click the Next button.

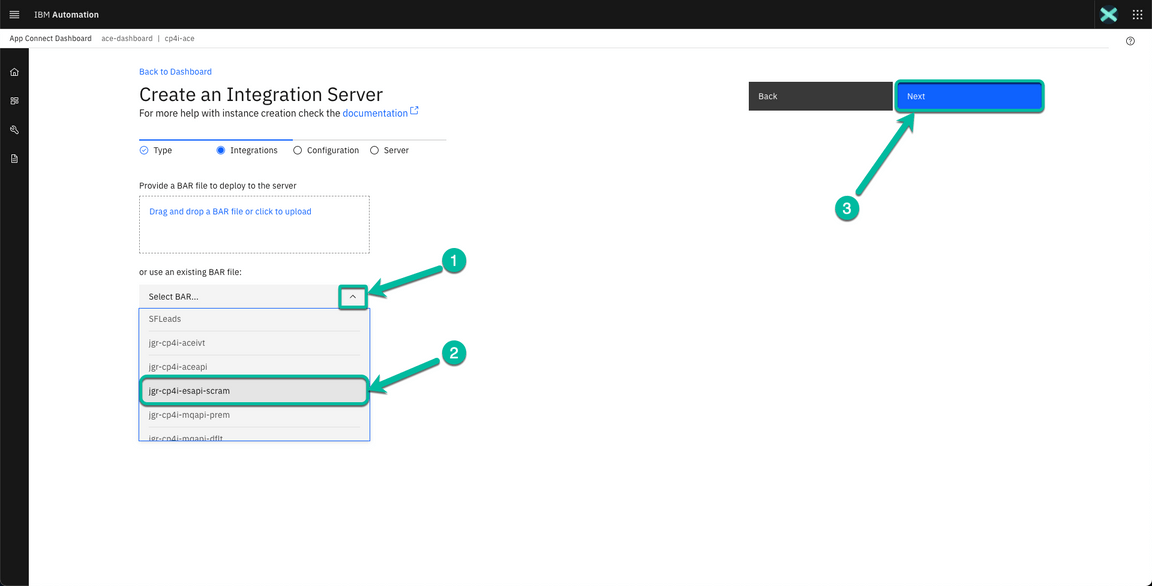

- In the next window click on the drop down box to select the BAR file we previuosly uploaded and then click Next.

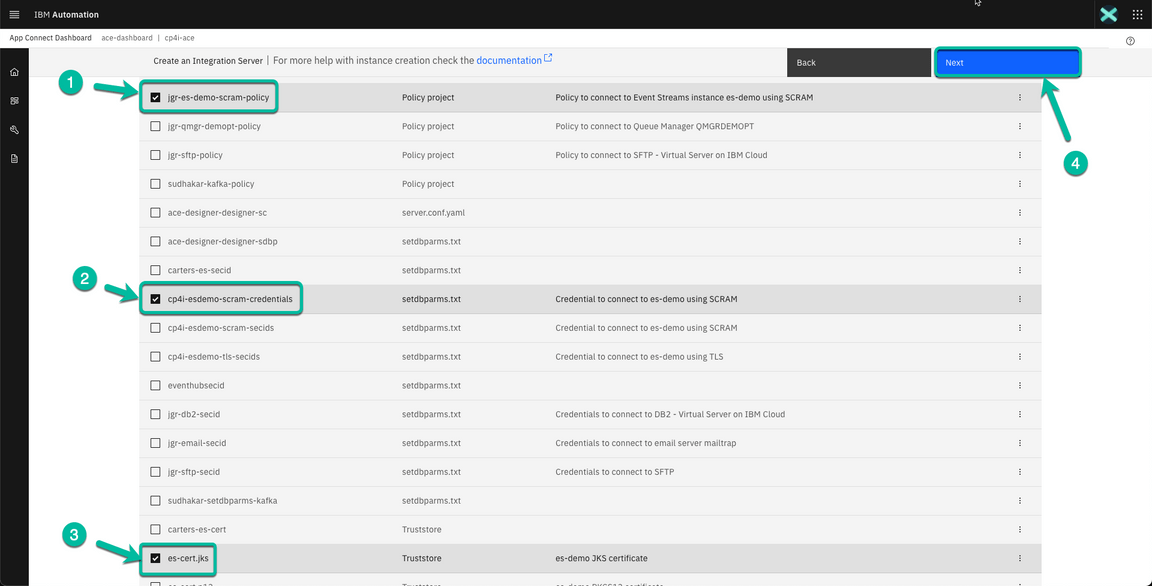

- In the next window select the 3 configurations we created in the previous section and then click Next.

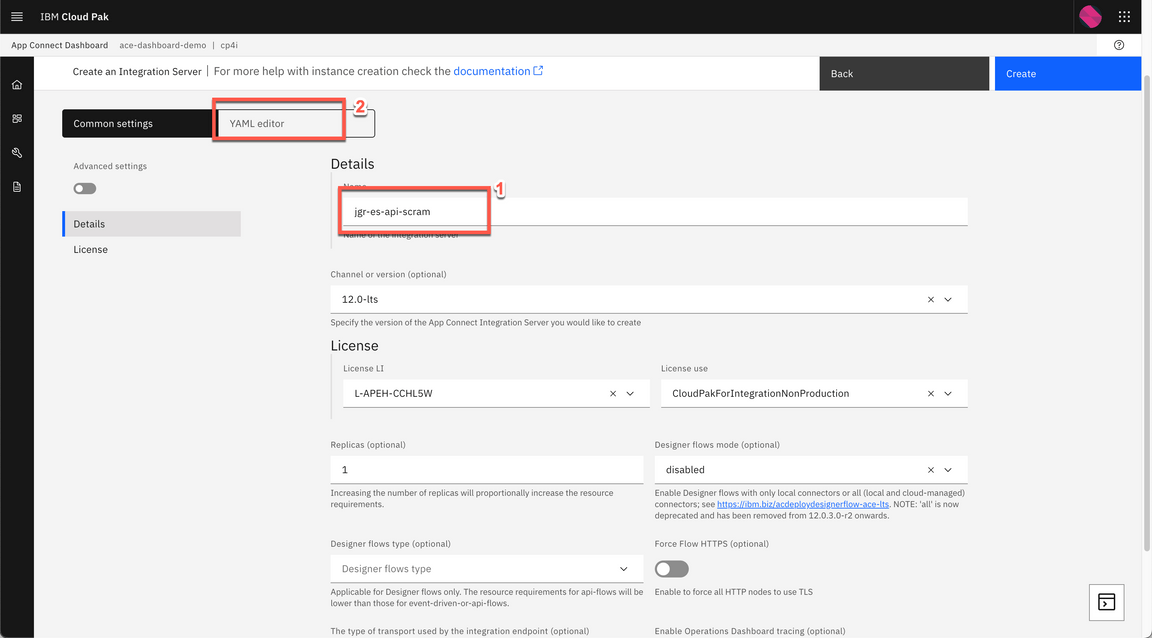

- In the next page give your deployment a name, i.e jgr-es-api-scram and open the YAML EDITOR.

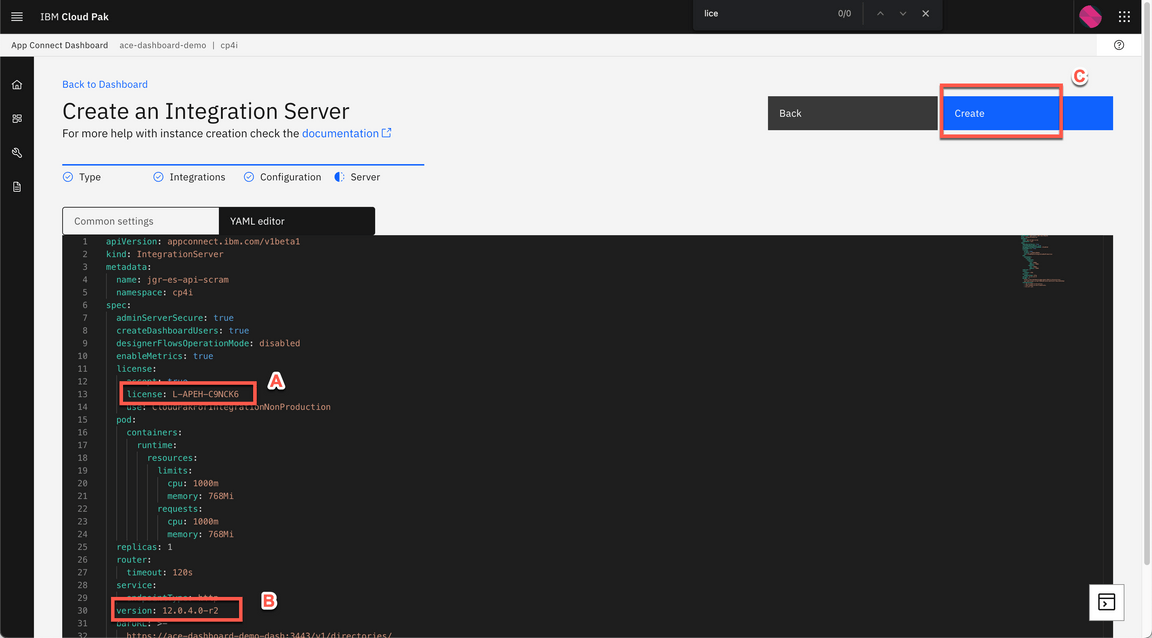

Because of a known issue in Integration Server v12.0.5 with Kafka Nodes, we will use a previous version of the Integration Server. Let’s do it!

Change the license value to L-APEH-C9NCK6 (A). And change the version value to 12.0.4.0-r2 (B). Then click Create (C).

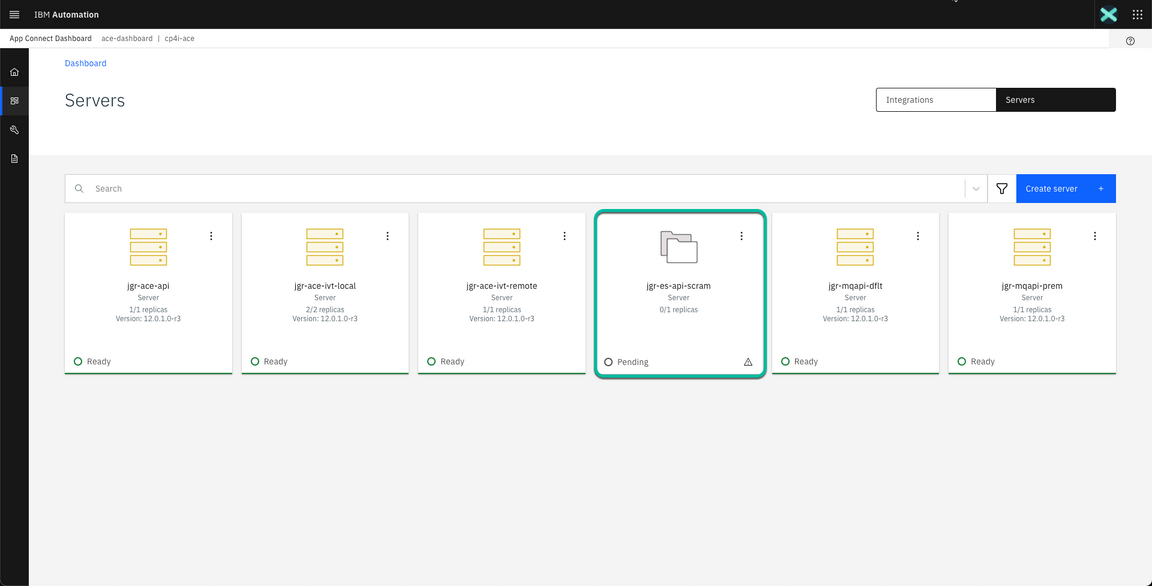

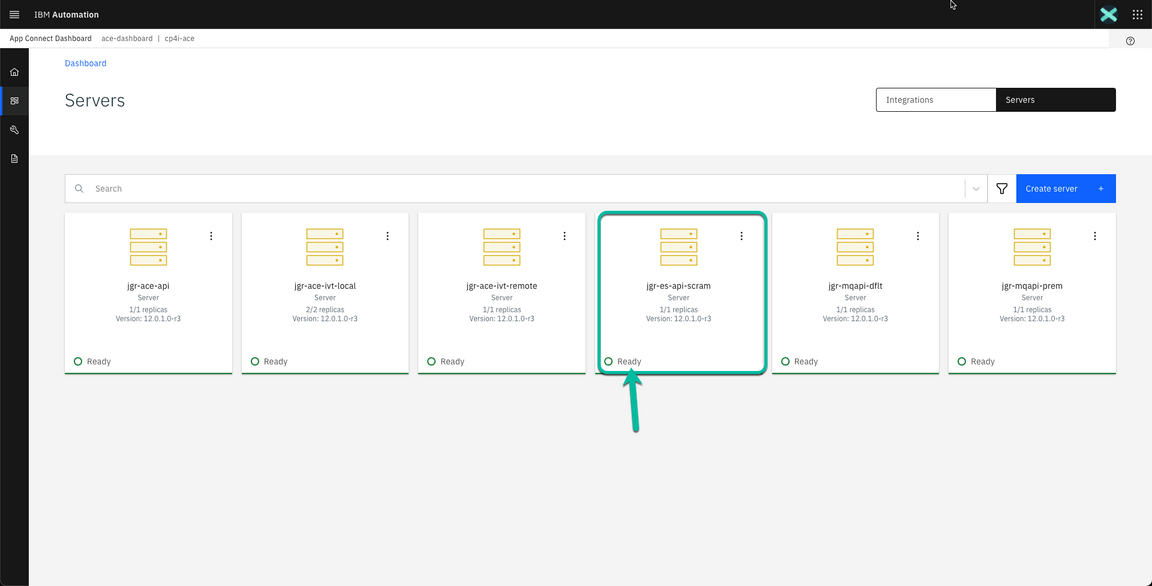

- After a moment you will be taken back to the Dashboard page where you will see a new tile with your Integration Server deployment name in Pending state, similar to the picture shown below:

- The App Connect Dashboard is deploying the Integration Server in the background creating the corresponding pod in the specificed namespace of the Red Hat OpenShift Container Platform. This process can take more than a minute depending on your environment. Click the refresh button in your browser until you see the tile corresponding to your deployment in Ready state as shown below:

Now is time to test everything is working as expected. Click on the tile corresponding to your deployment.

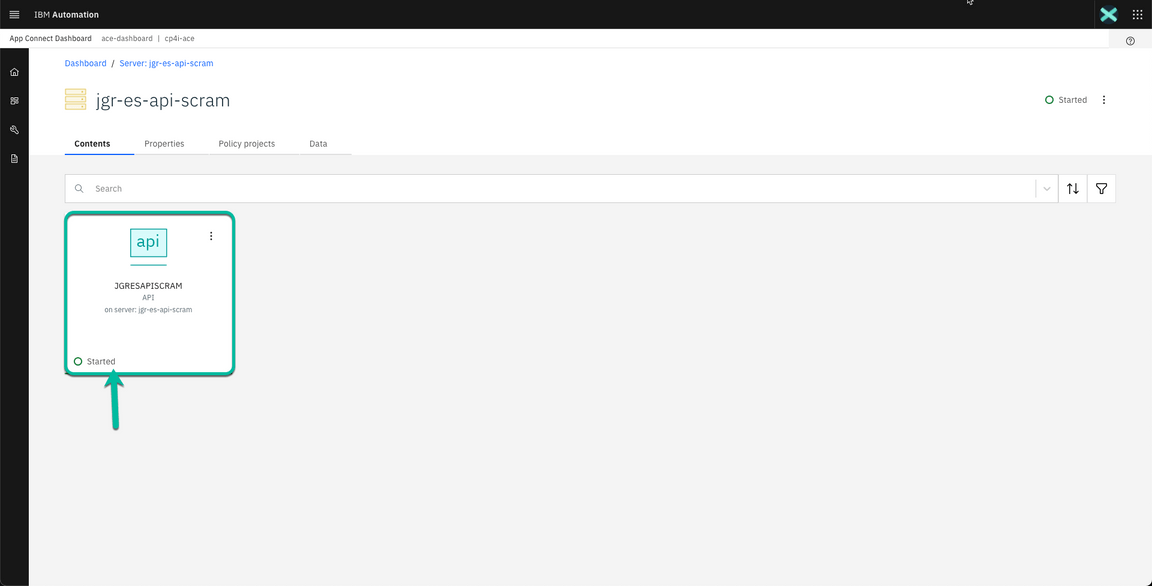

- The next window shows the API in started state. Click on the tile to get the detaisl.

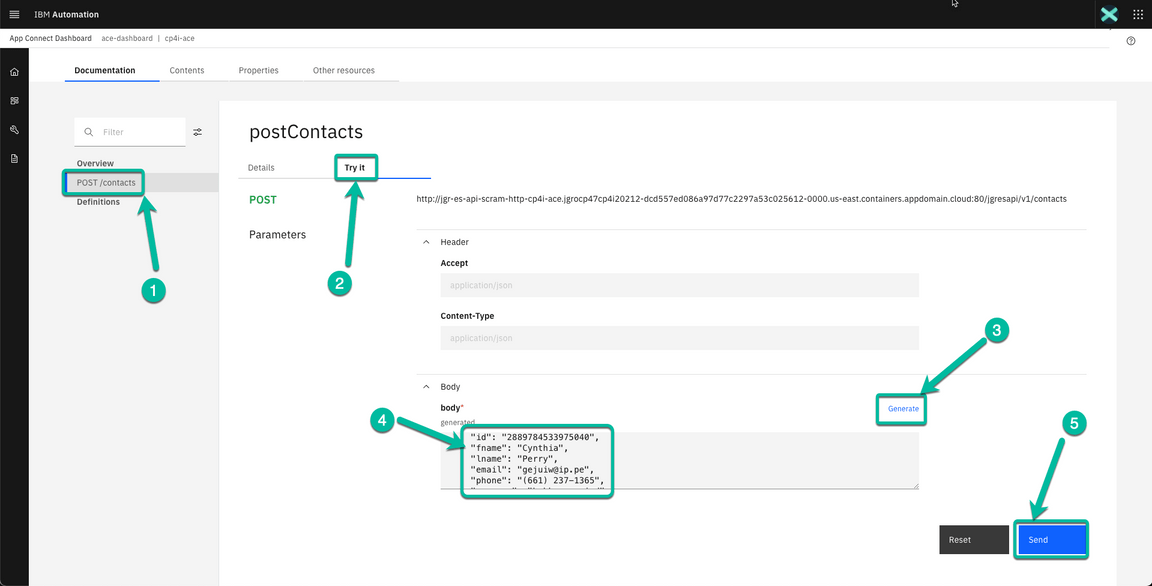

- In the next page navigate to the operation implemented in your flow (POST /contacts). Then click on the Try it tab followed by the Generate hyperlink to generate some test data. Review the data generated and click the Send button.

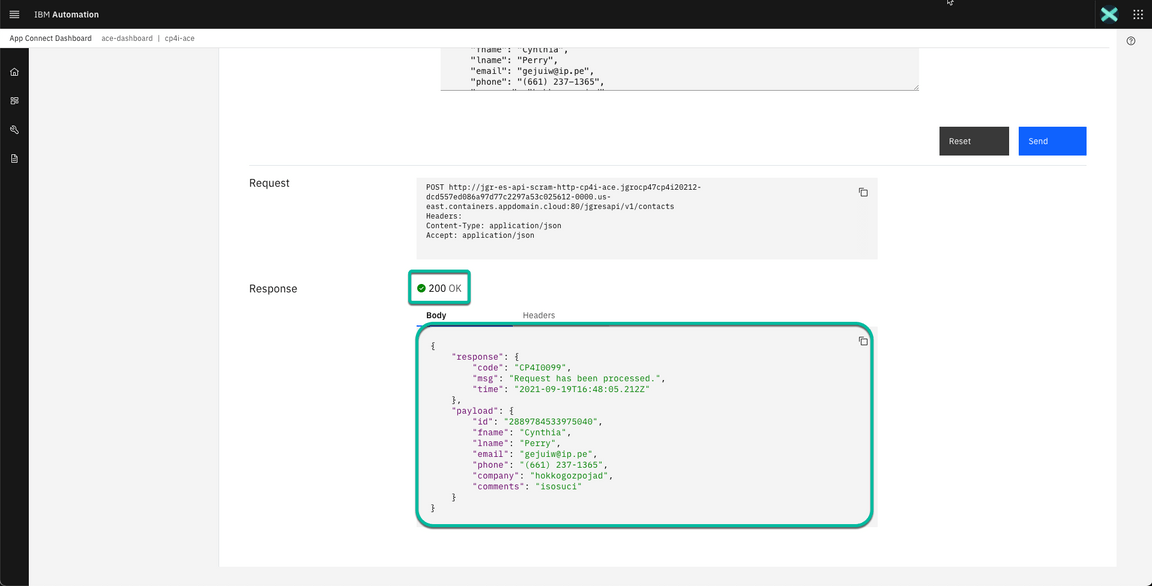

- After a moment you will get a response back. If everything is OK you will receive a 200 response code indicating the request was successful and the same payload you sent plus some metadata including a timestamp showing the request completed few seconds ago.

- To fully confirm the message landed on the corresponding topic, you can navigate to the Event Streams instance and check the message is there. Once you are in the Event Streams Home page click on the Topics incon in the navigation section and then click on the topic you used in your flow. In my case kafka-cp4i-demo-topic.

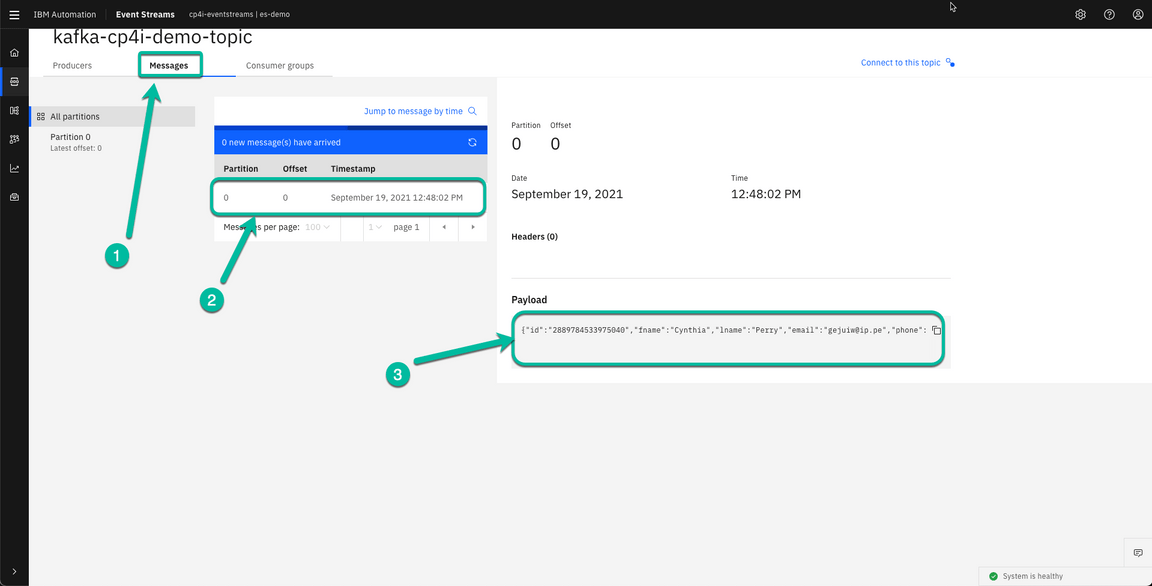

- In the topic page navigate to the Messages tab, then select the most recent message and confirm the payload includes the same data we sent during the test.

Congratulations! You have completed this lab.

Summary

You have successfully completed this lab. In this lab you learned:

- Create and Configure an EventStreams Topic

- Configure App Connect Enterprise message flow using App Connect Enterprise toolkit

- Configure App Connect Enterprise service

- Deploy App Connect BAR file on App Connect Enterprise Server

- Test App Connect Enterprise API sending a message to EventStreams