IBM MQ Uniform Clusters Demo

Introduction

We’re running a cluster of IBM MQ queue managers in Red Hat OpenShift, together with a large number of client applications putting and getting messages to them. This workload will vary over time, so we need flexibility in how we scale all of this.

Simplifying your messaging solution deployment is very important for your Operations team, but it is good for your business too. Increased business high availability can provide the difference between a satisfied customer who wants to do more business with you or a disappointed customer who is looking for an alternative option. In addition, it improves innovation, because a team that is used to quickly shipping experiments and getting back user-validated results fast, will soon find itself naturally innovating.

This demo will show how we can easily scale the number of instances of our client applications up and down, without having to reconfigure their connection details and without needing to manually distribute or load balance them.

It will also show how to quickly and easily grow the queue manager cluster – adding a new queue manager to the cluster without a complex, new, custom configuration. In this demo, we will see the Uniform Cluster capability of IBM MQ in action.

Let's get started!

(Demo Slides here)

1 - Accessing the environment

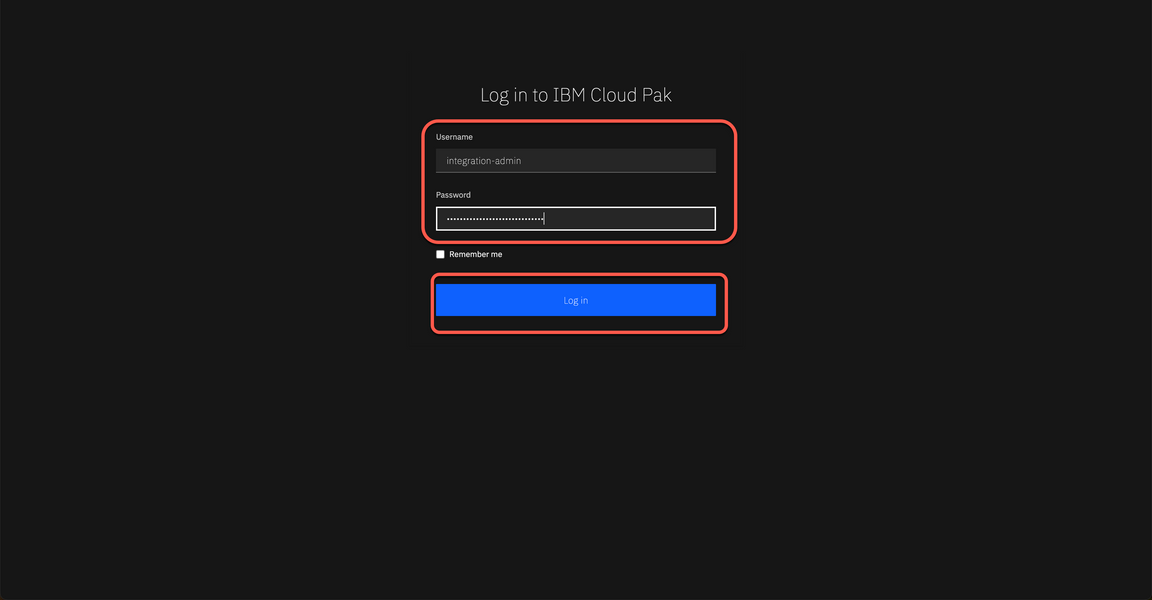

| 1.1 | Log in to Cloud Pak for Integration |

|---|---|

| Narration | Let’s see how to scale the IBM MQ cluster and client applications in OpenShift. Here we have an IBM Cloud Pak for Integration environment with IBM MQ operator installed. We have a cloud version of the product on IBM Cloud. Let me log in here. |

| Action 1.1.1 | Open Cloud Pak for Integration page and log in with your username and password.

|

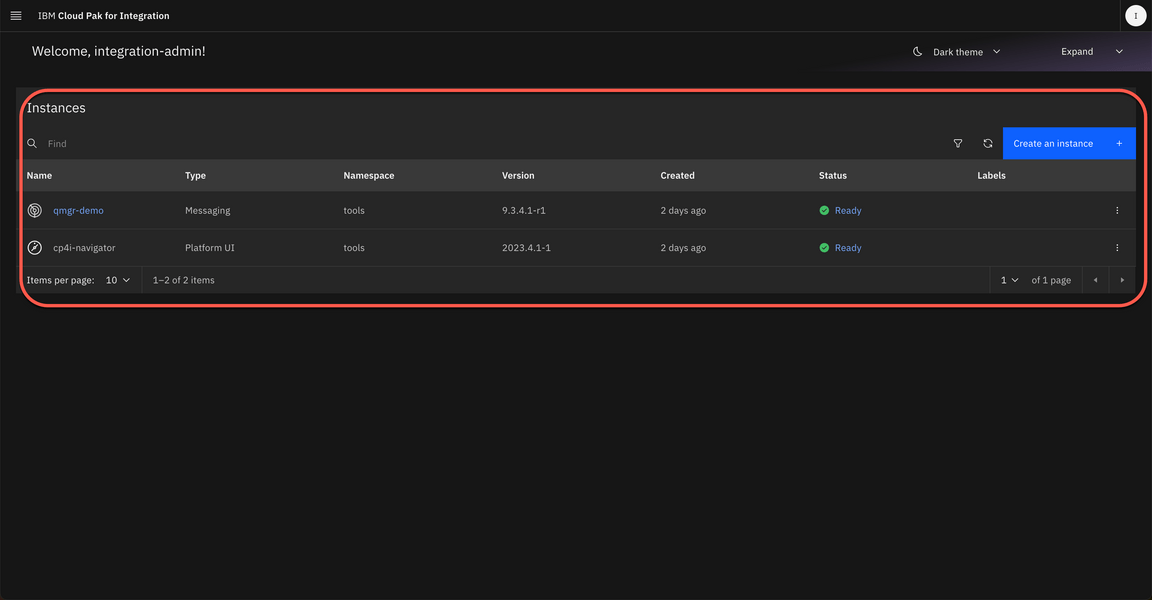

| 1.2 | View the Cloud Pak for Integration instances |

|---|---|

| Narration | Welcome to IBM Cloud Pak for Integration! We’re now at the home screen showing all the capabilities of the Pak, brought together in one place. Specialized integration capabilities — for API management, application integration, messaging, and more — are built on top of powerful automation services. As you can see, you are able to access all the integration capabilities your team needs through a single interface. By now, we have a basic MQ instance here. IBM MQ is a universal messaging backbone with robust connectivity for flexible and reliable messaging for applications and the integration of existing IT assets. In this demo, to scale our IBM MQ Cluster, we will create a Uniform cluster. |

| Action 1.2.1 | Show the Instances page.

|

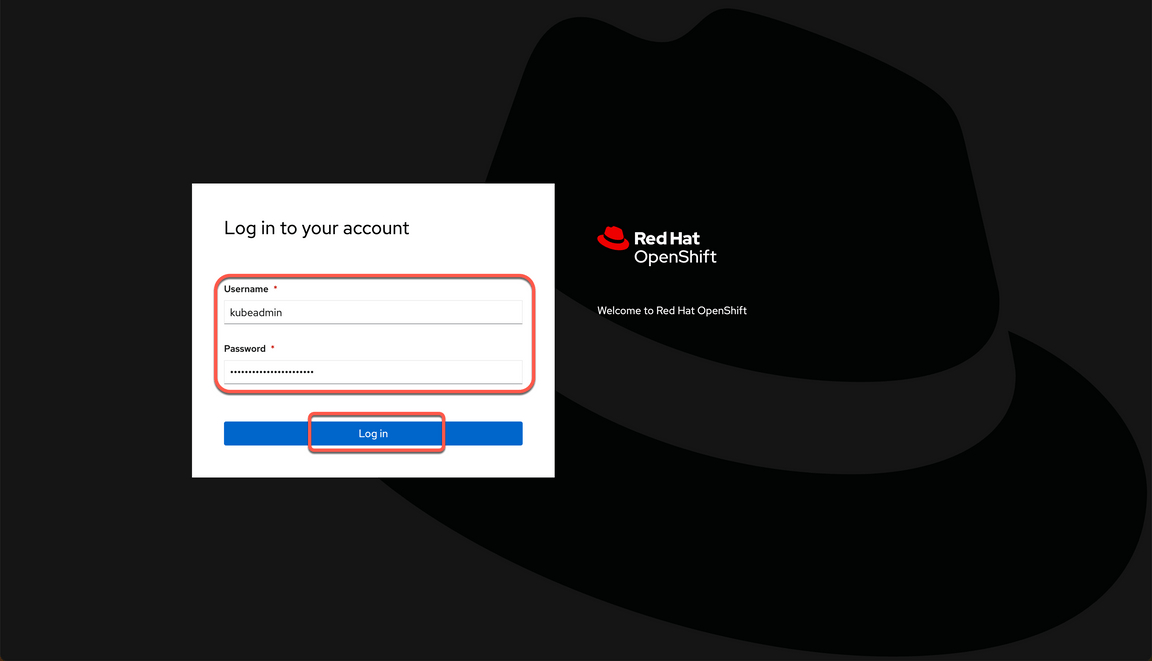

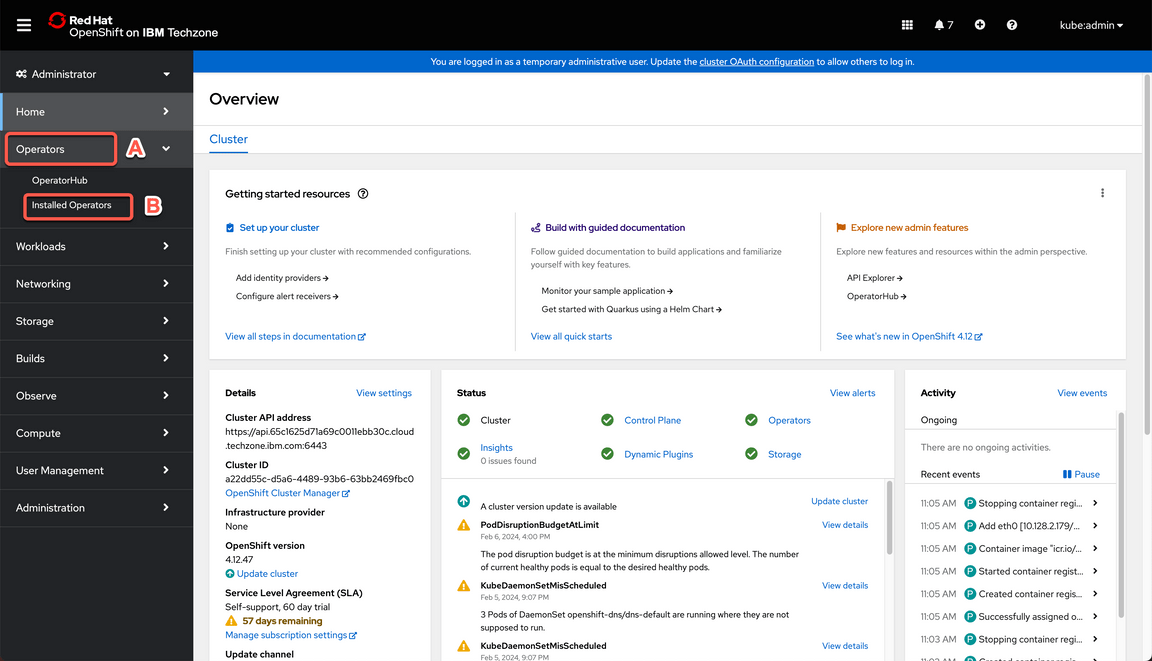

| 1.3 | Access OpenShift Web Console |

|---|---|

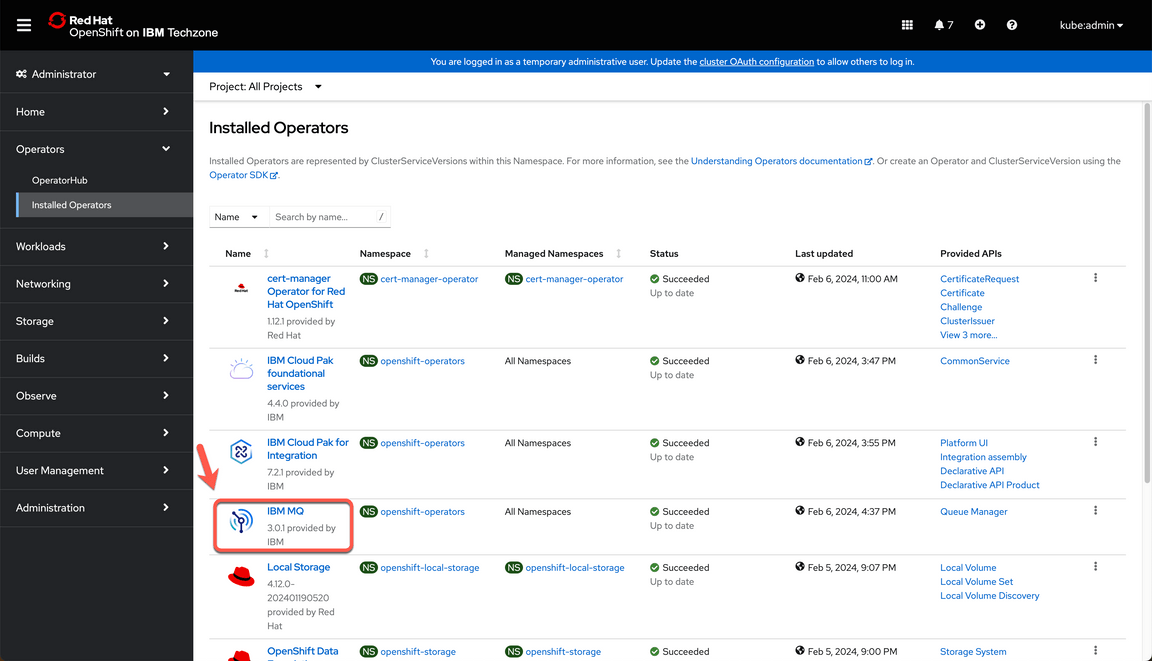

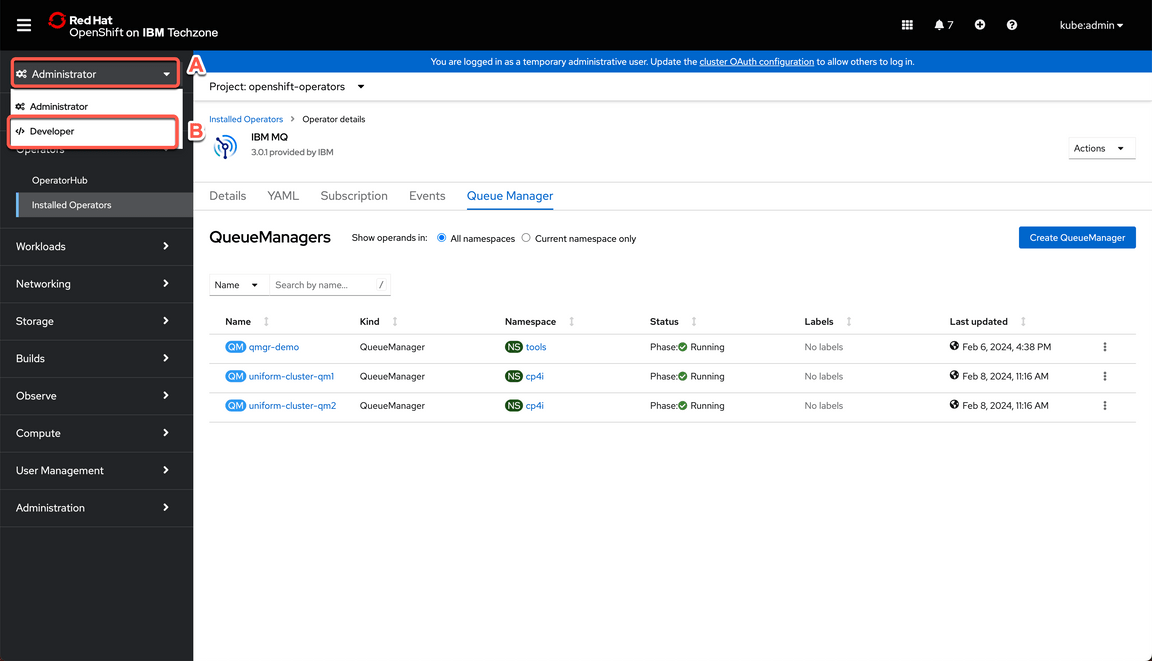

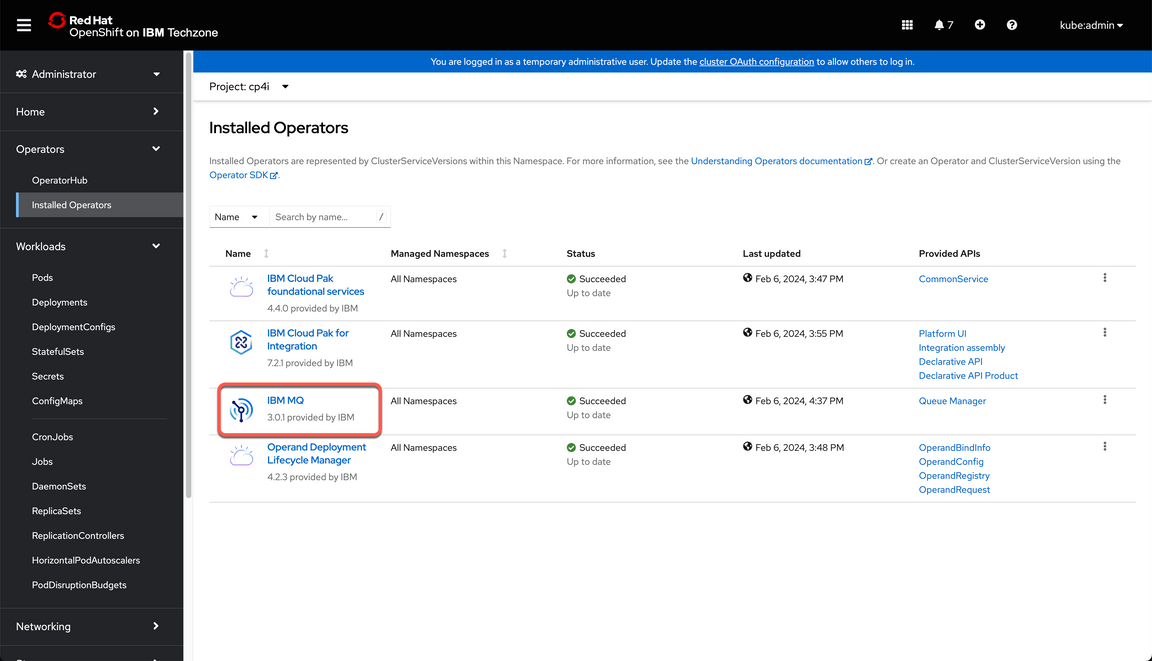

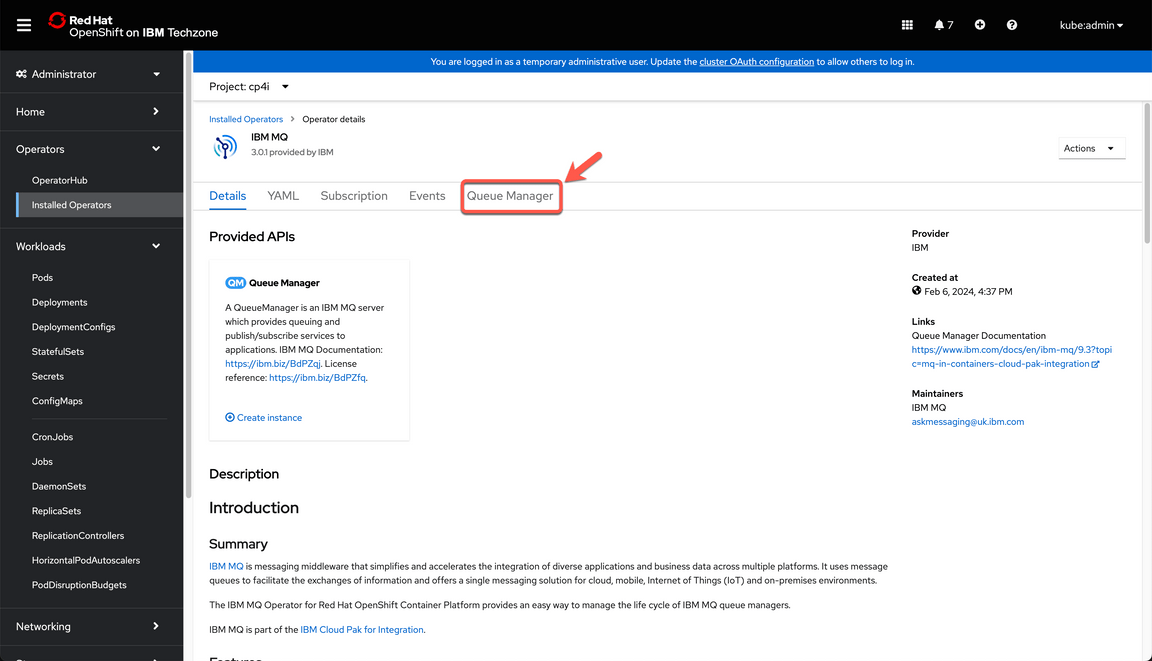

| Narration | Let’s check our environment on the OpenShift Web Console. On the Installed Operators page, we can confirm that IBM MQ operator is installed. But we have only one queue manager so far. Next step is to create our Uniform Cluster in MQ. |

| Action 1.3.1 | Open the OpenShift Web Console and log in.

|

| Action 1.3.2 | Open the Operators (A) > Installed Operators (B).

|

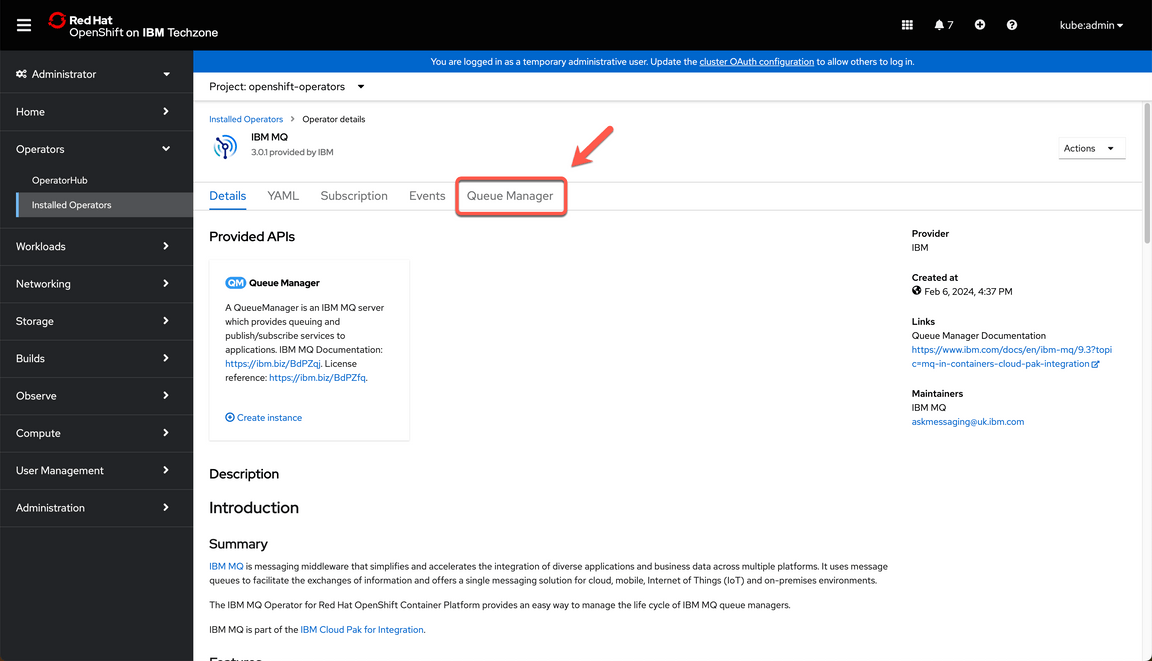

| Action 1.3.3 | Open the IBM MQ operator.

|

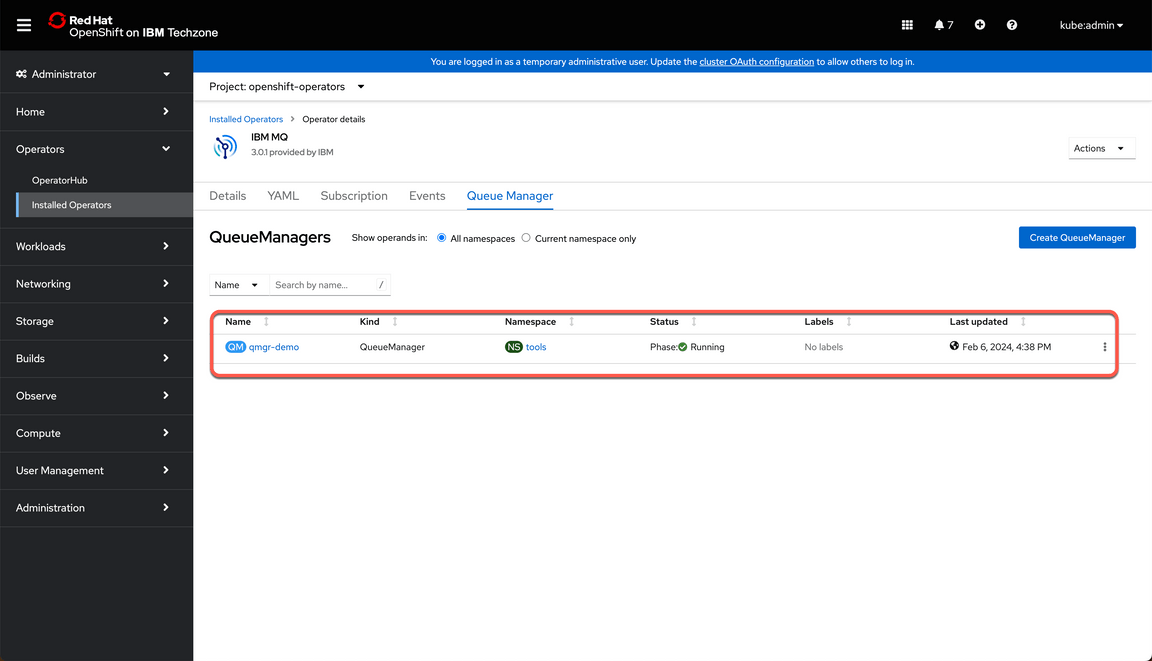

| Action 1.3.4 | Open the Queue Manager tab.

|

| Action 1.3.5 | Show the queue manager available

|

2 - Deploy Uniform Cluster configuration

| 2.1 | Create configurations |

|---|---|

| Narration | The objective of a uniform cluster deployment is that applications can be designed for scale and availability and can connect to any of the queue managers within the uniform cluster. This removes any dependency on a specific queue manager, resulting in better availability and workload balancing of messaging traffic. Let’s create it now. First, we need to create our uniform cluster configurations. Let’s start the configuration to create a queue manager uniform cluster with two queue managers. A ConfigMap with a config.ini is used by all three queue managers. This identifies which of the queue managers are going to maintain the full repository of information about the cluster. Another ConfigMap holds the MQSC commands that all queue managers use to define the channel they will need to be members of the cluster. Each queue manager then has it’s own ConfigMap with an additional MQSC file defining the addresses for the channels it will use to join the cluster. |

| Action 2.1.1 | On a terminal windows, open the cp4i-demo folder that you created in the Demo Preparation part, log in on your OpenShift environment and run the command below. oc apply -f resources/03d-qmgr-uniform-cluster-config.yaml |

| Action 2.1.2 | On a terminal windows, run the command below to prepare certificates for Queue Managers. scripts/10d-qmgr-uc-pre-config.sh |

| 2.2 | Deploy Queue Managers |

|---|---|

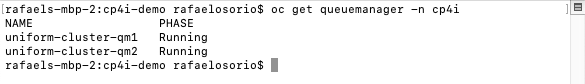

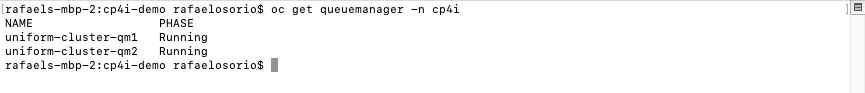

| Narration | Uniform clusters are a specific pattern of an IBM MQ cluster that provides a highly available and horizontally scaled small collection of queue managers. These queue managers are configured almost identically, so that an application can interact with them as a single group. This makes it easier to ensure each queue manager in the cluster is being used, by automatically ensuring application instances are spread evenly across the queue managers. Now we need to create our two queue Managers. The QueueManager specifications for each of the queue managers just need to point to the ConfigMaps created earlier. Let’s do it! Great, now let’s confirm the instances have been deployed successfully before moving to the next step. |

| Action 2.2.1 | Run the command below.oc apply -f instances/${CP4I_VER}/${OCP_TYPE}/13a-qmgr-uniform-cluster-qm1.yaml -n cp4i |

| Action 2.2.2 | Run the command below.oc apply -f instances/${CP4I_VER}/${OCP_TYPE}/13b-qmgr-uniform-cluster-qm2.yaml -n cp4i |

| Action 2.2.3 | Run the command below.oc get queuemanager -n cp4i Note this will take few minutes, but at the end you should get a response like this.

|

| 2.3 | Deploy NGINX to serve CCDT |

|---|---|

| Narration | When one application want to connect multiple Queue Managers this can be done using Client connection channel table(CCDT). Queue managers store client connection channel information in a client channel definition table. This information includes authentication rules you have defined for channels on the queue manager. The table is updated whenever a client connection channel is defined or altered. For this demo, we need to create the Client Channel Definition Table (CCDT) to be used by our application and we need to deploy a NGINX instance to serve CCDT. Voilá, the NGINX service was created to be used by our application. Now we can deploy our application. |

| Action 2.3.1 | Run the command below to create CCDT to be used by App. oc apply -f resources/04a-nginx-ccdt-configmap.yaml |

| Action 2.3.2 | Run the command below to deploy NGINX instance to serve CCDT. oc apply -f resources/04b-nginx-deployment.yaml |

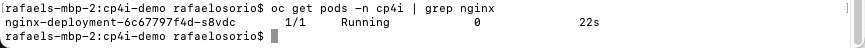

| Action 2.3.3 | Confirm the instances has been deployed successfully before moving to the next step running the following command. oc get pods -n cp4i | grep nginx You should get a response like this.

|

| Action 2.3.4 | Run the command below to create service for NGINX to be used by App. oc apply -f resources/04c-nginx-service.yaml |

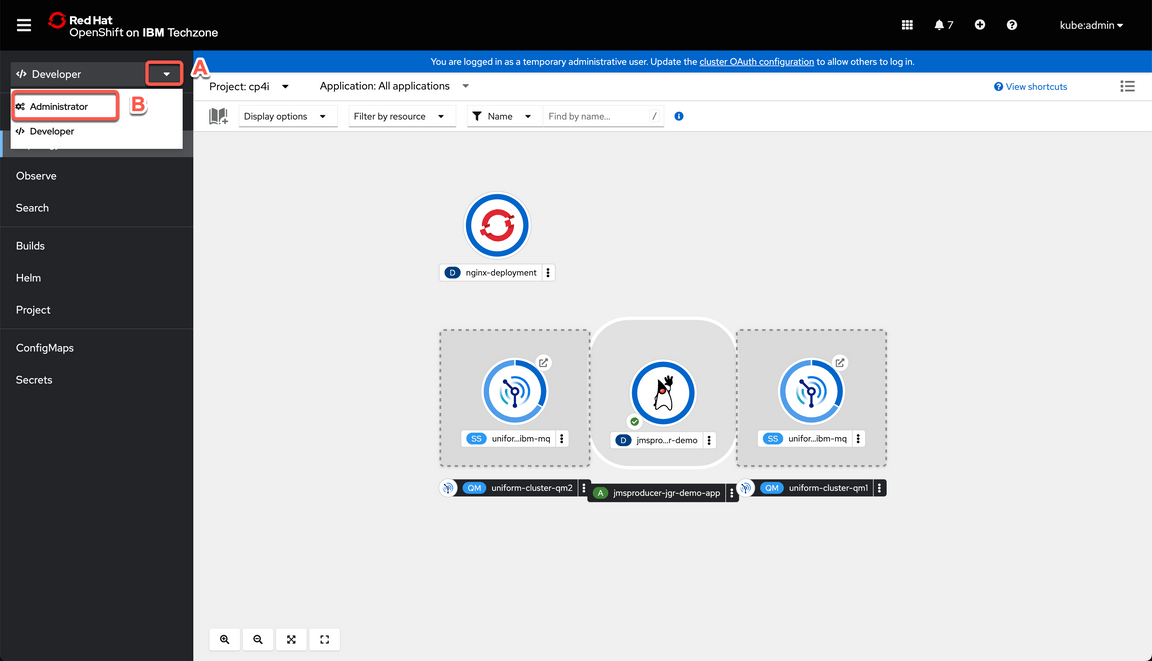

3 - Deploy MQ Application

| 3.1 | Check the Topology |

|---|---|

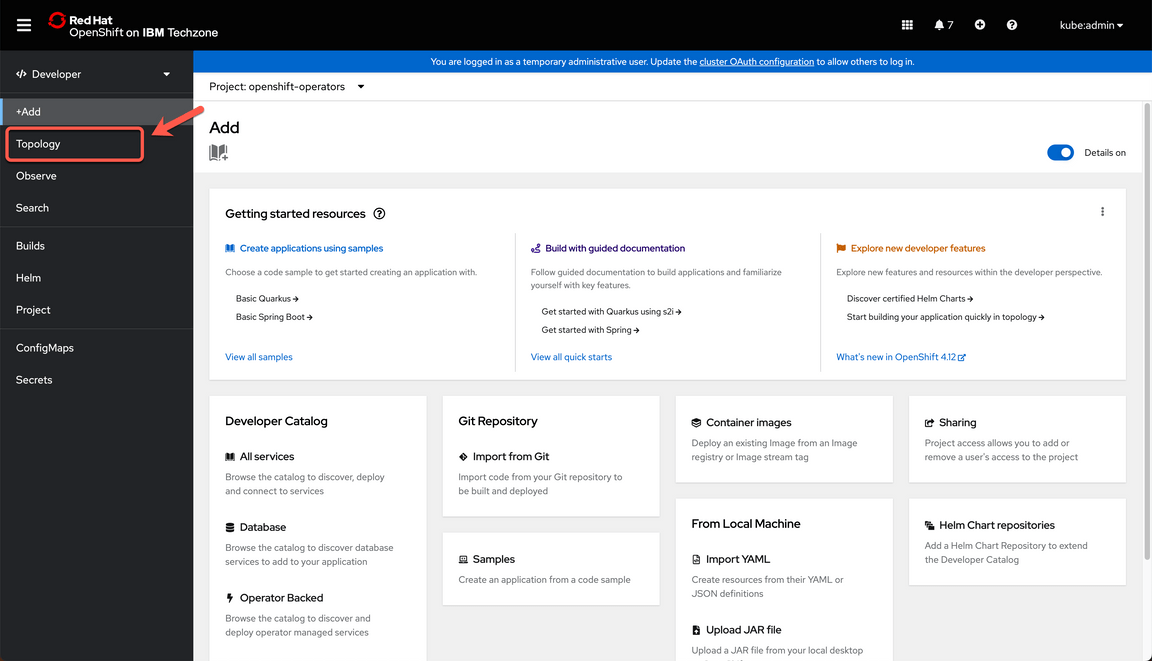

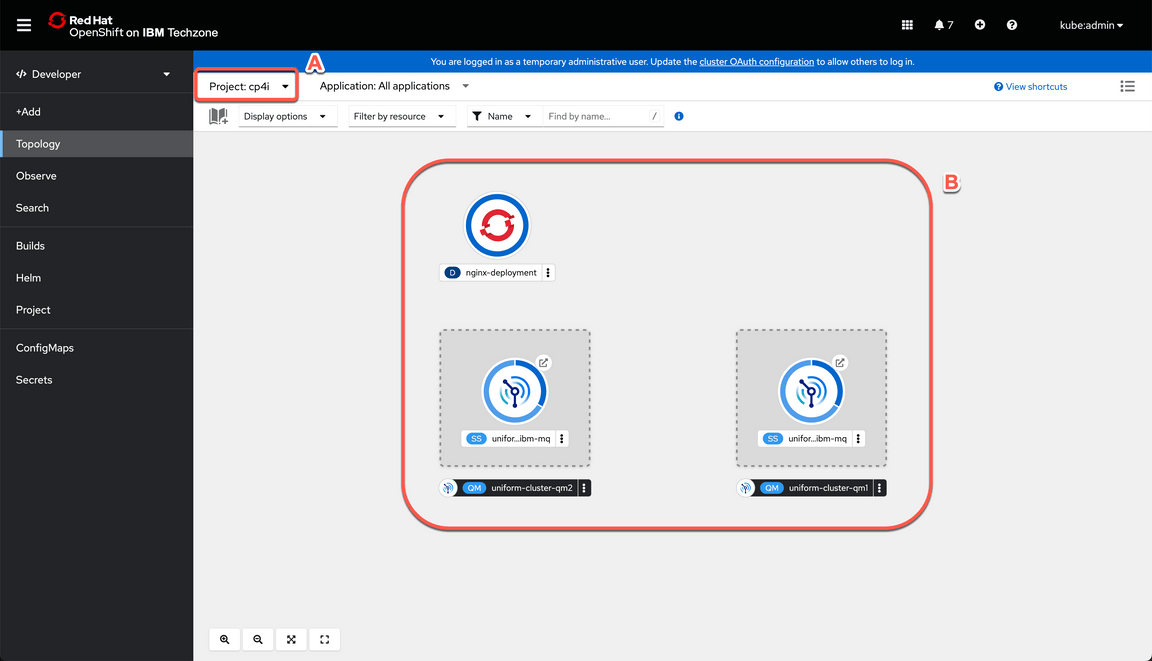

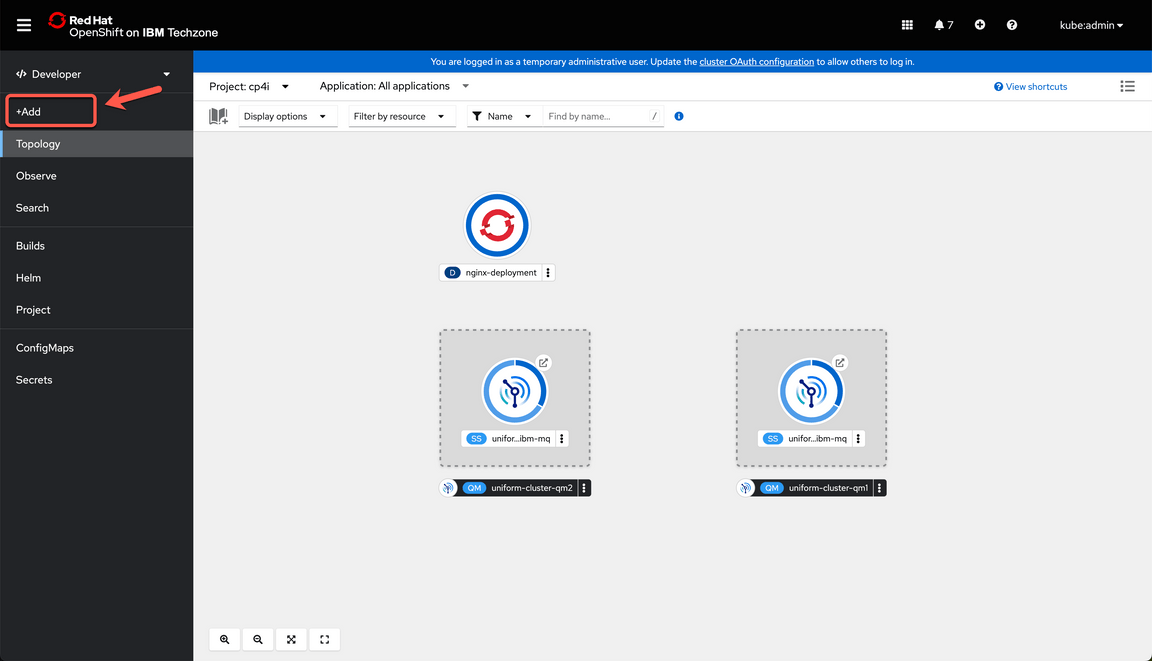

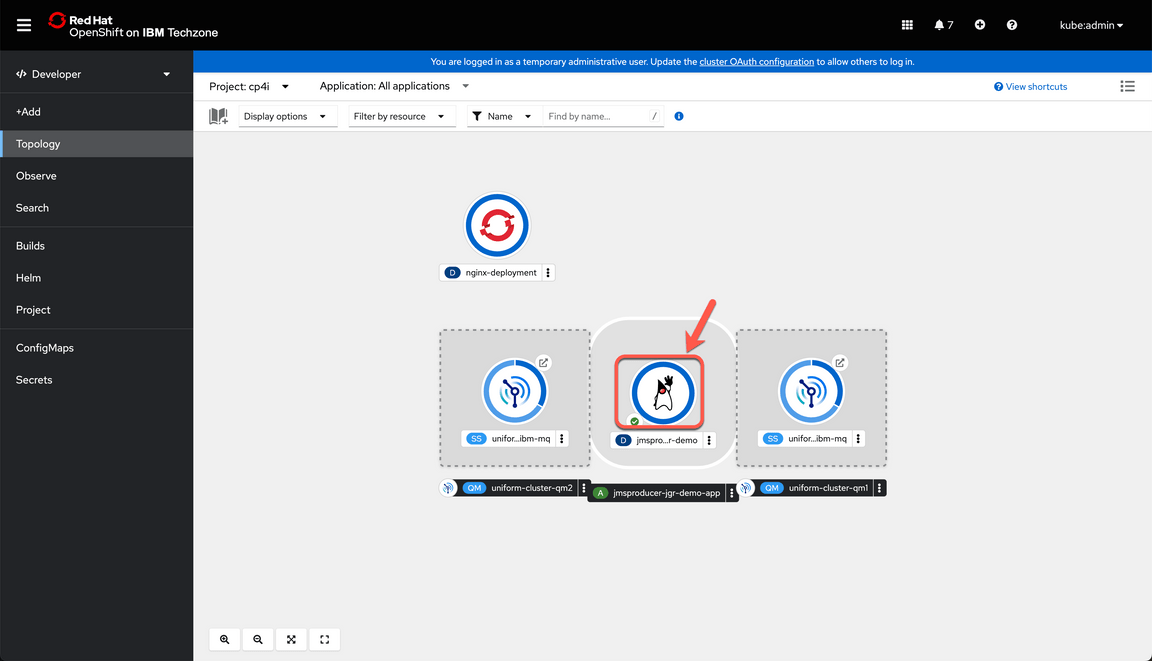

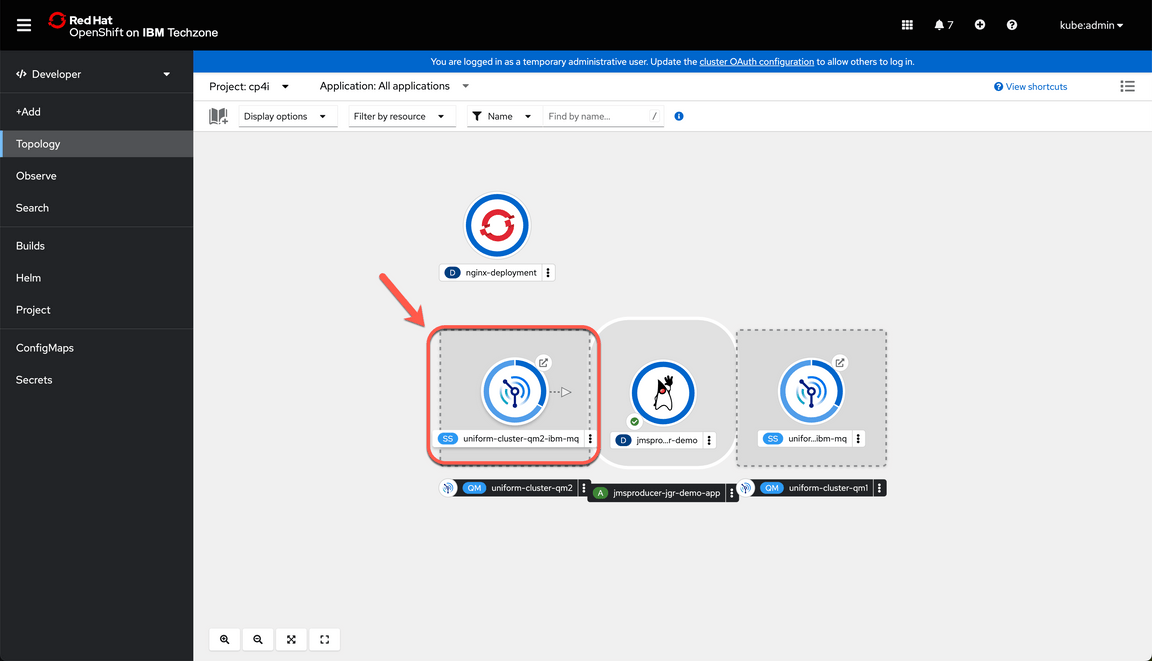

| Narration | Now that the uniform cluster is running, we can proceed to deploy the application that will be interacting with the queue managers. First, we will switch to the “Developer” perspective. In this perspective you can view the queue managers. Here you will see the tiles representing each queue manager. |

| Action 3.1.1 | Back to the OpenShift Web Console page, click on the Administrator option (A) and select the Developer (B) perspective.

Note - If you see the Welcome to the Developer Perspective dialog, go ahead and close it. |

| Action 3.1.2 | Open the Topology page.

|

| Action 3.1.3 | Filter by cp4i project (A) and show the topology (B).

|

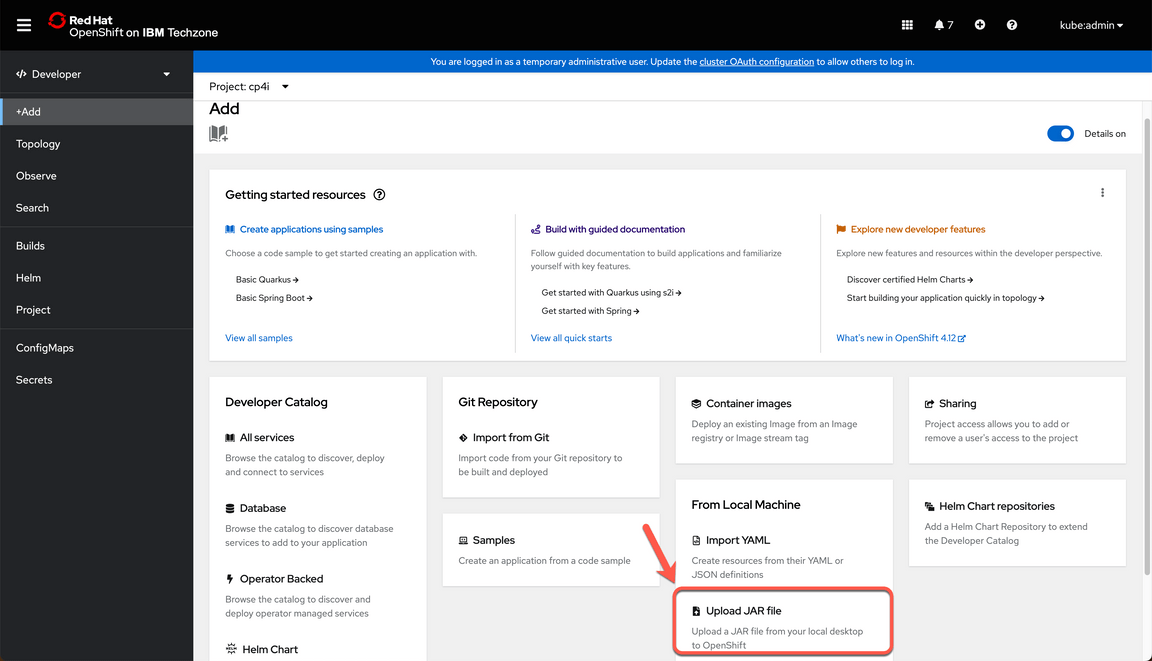

| 3.2 | Deploy JAR file |

|---|---|

| Narration | For demo purposes, we have pre-created the JMS application that will use our Queue Managers. Let’s deploy it. |

| Action 3.2.1 | Click on +Add on left menu.

|

| Action 3.2.2 | Click on Upload JAR file.

|

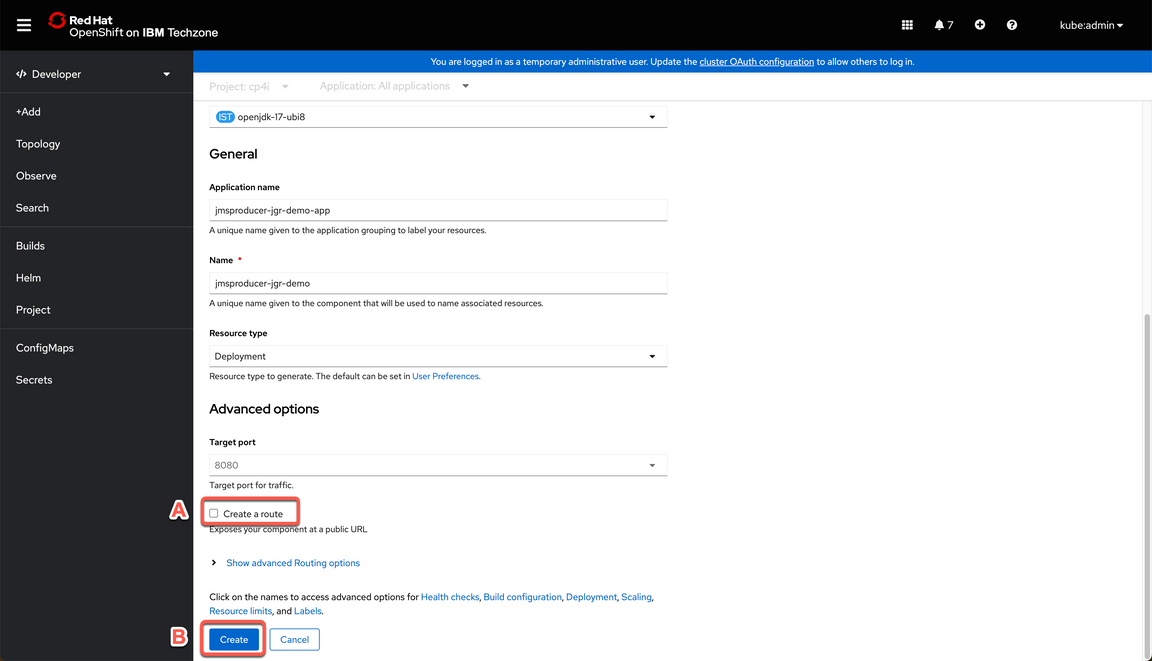

| Action 3.2.3 | Select Browse (A) and follow the dialogs to select the jmsproducer-jgr-demo.jar file (B) (check the demo preparation document about how to get the jar file).

|

| Action 3.2.4 | Scroll down and uncheck Create a route (A), then click Create button (B).

|

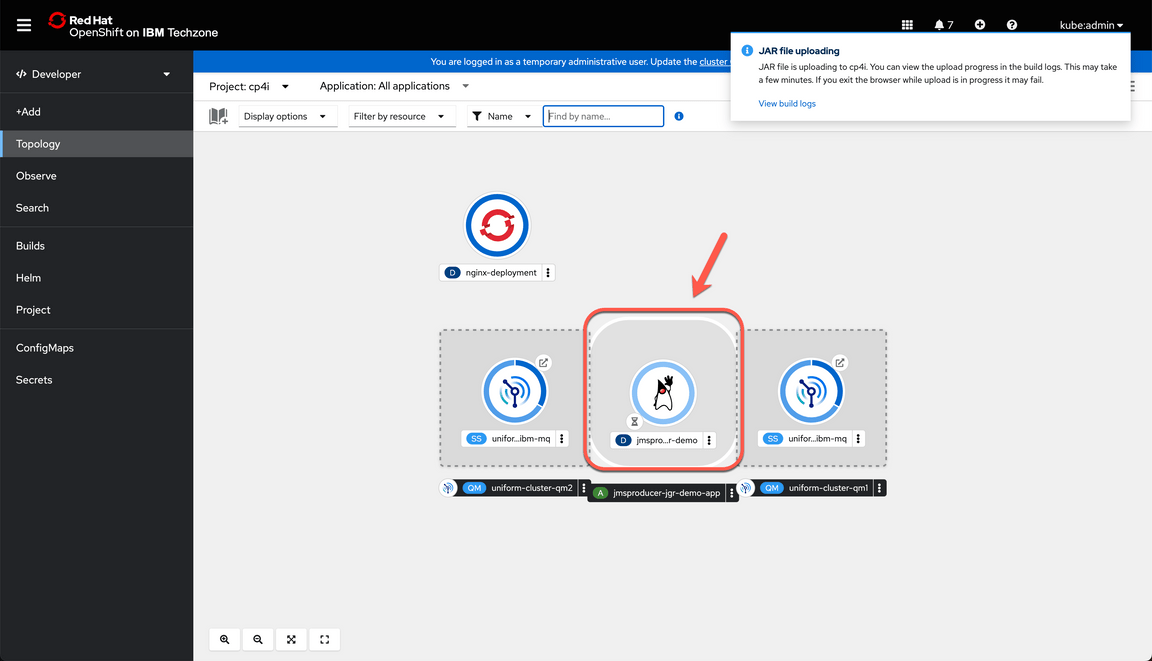

| Action 3.2.5 | Show the tile representing the deployment in the Topology view.

|

| 3.3 | Review Deployment |

|---|---|

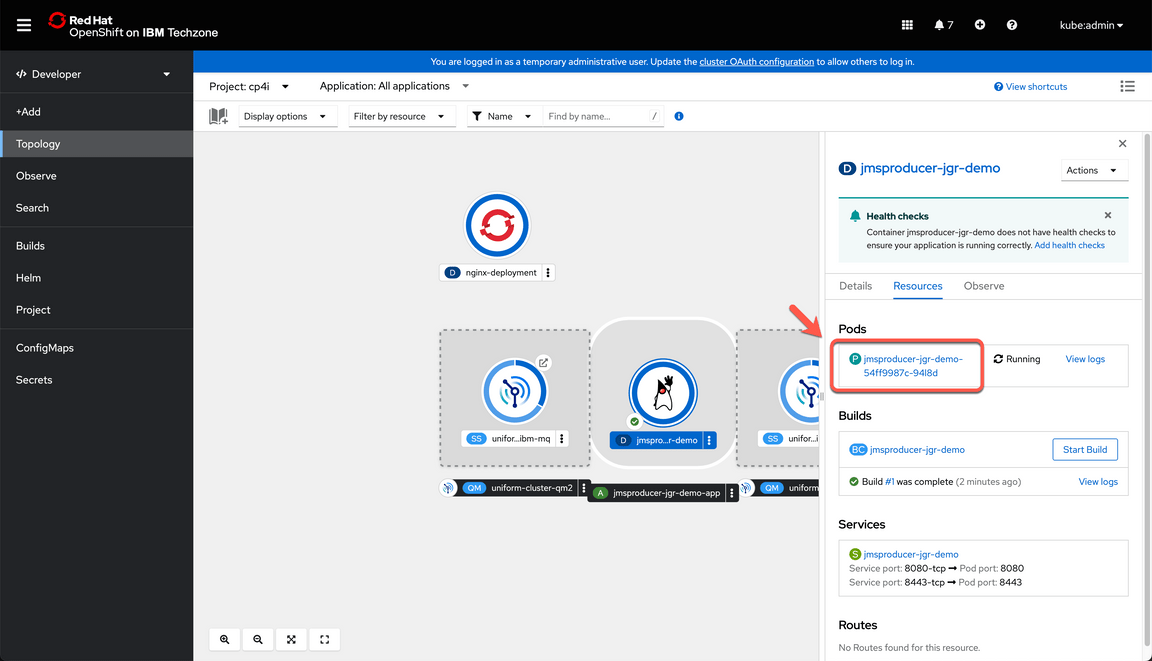

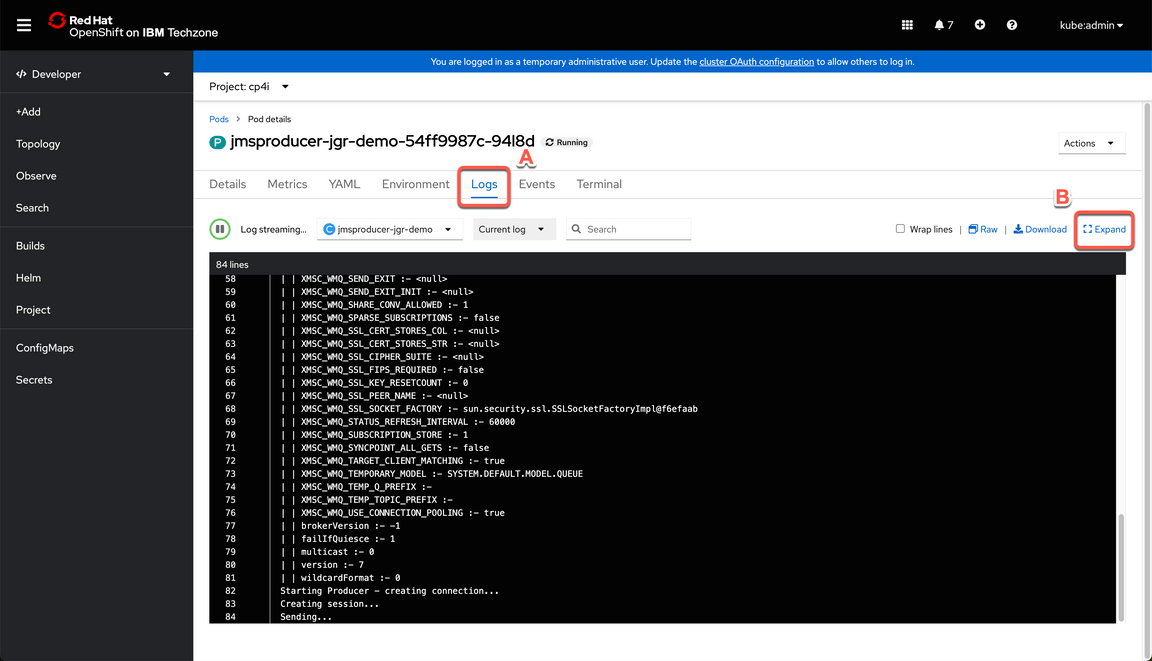

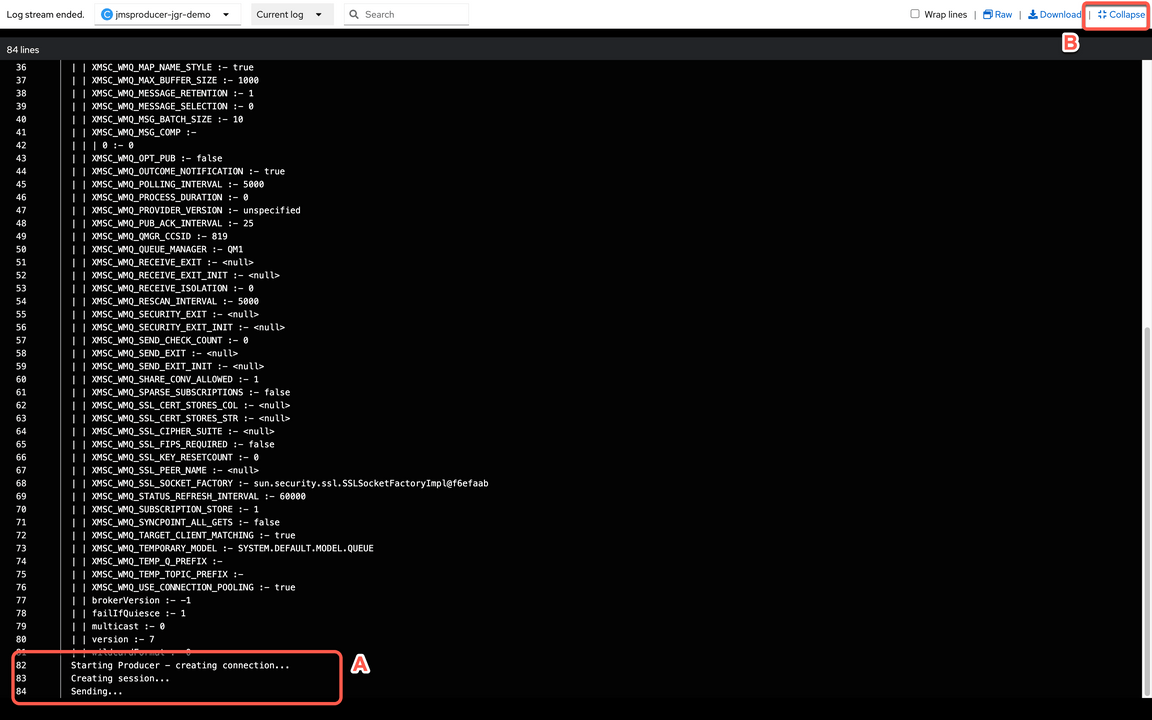

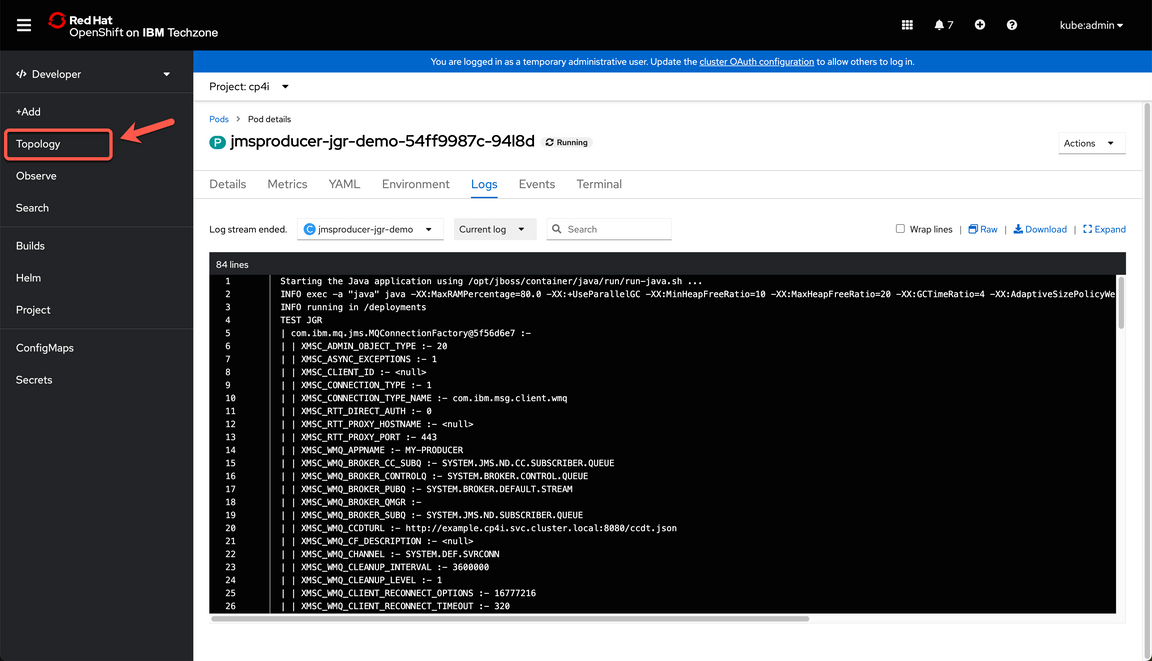

| Narration | Now, let’s review our application connection. From here, we can easily check the application log. Great, our application was able to connect to a queue manager and it is sending messages. |

| Action 3.3.1 | After a few second click on the tile representing the deployment to display the pod.

|

| Action 3.3.2 | Click on the pod name.

|

| Action 3.3.3 | In the pod page go to the Logs tab (1) and click Expand (2) to get a full view of the log.

|

| Action 3.3.4 | Take your time reviewing the log, and at the bottom you will find a message saying “Sending…” confirming the application was able to connect to a queue manager and it is sending messages (A). When done, collapse the log window. (B)

|

4 - Validate Uniform Cluster connectivity

| 4.1 | Explore the Queue Manager 2 |

|---|---|

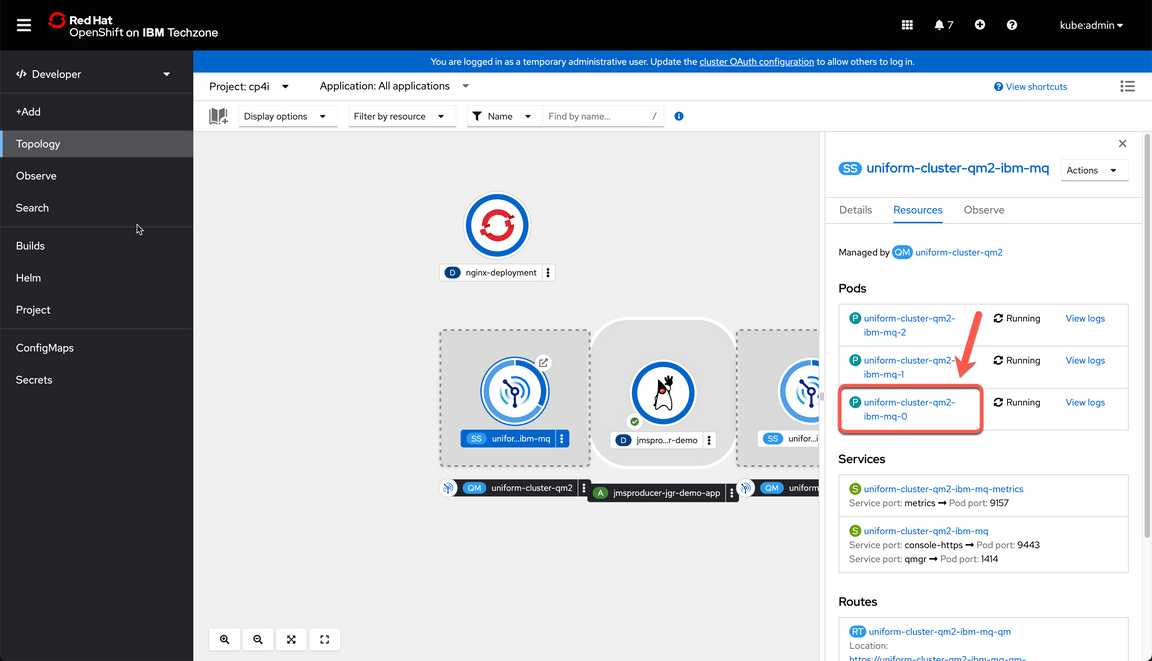

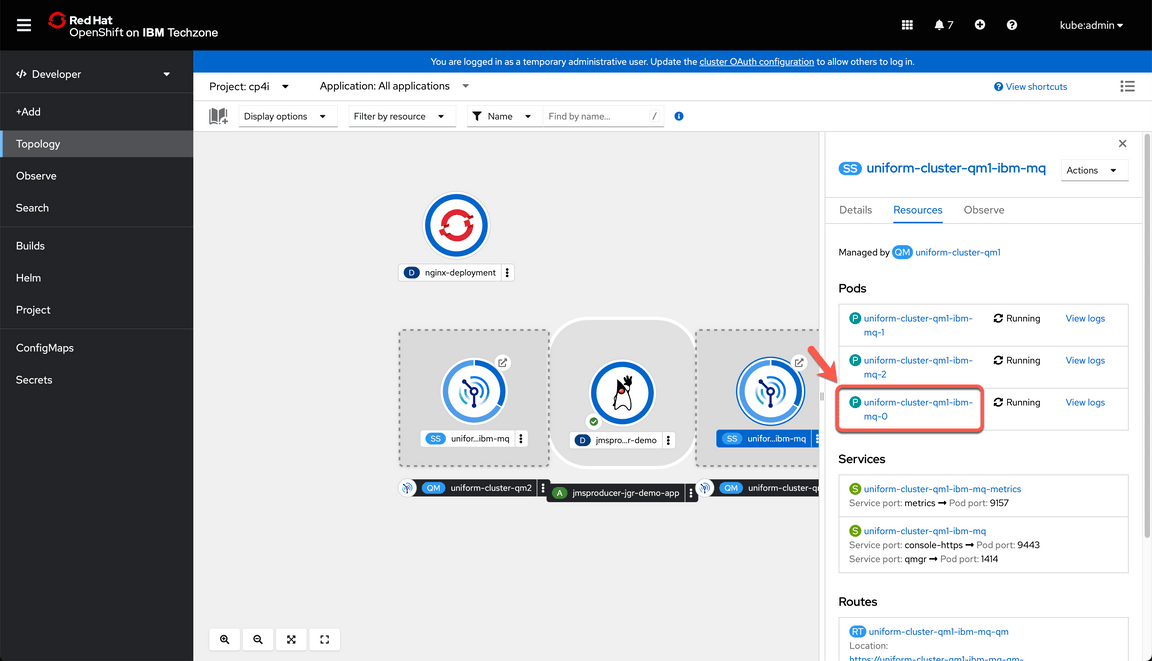

| Narration | Now that the MQ application is deployed let’s check the behavior with the Uniform Cluster. Let’s open Queue Manager 2. The pod ending with 0 is by default the active instance, let’s explore it. |

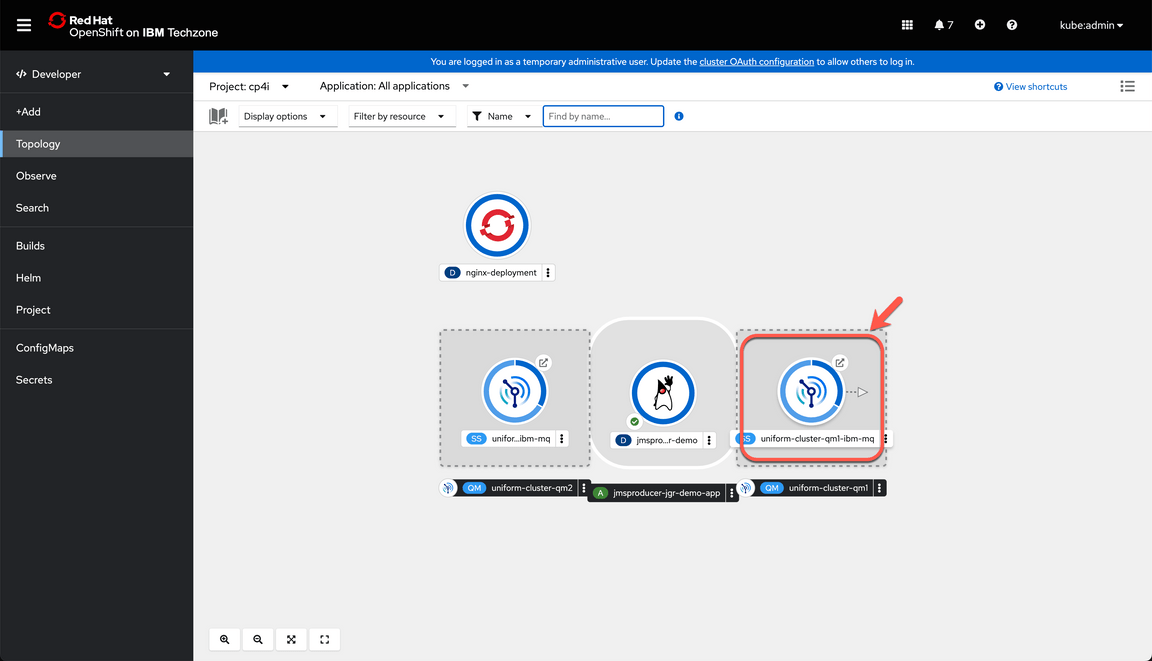

| Action 4.1.1 | Navigate back to the Topology page.

|

| Action 4.1.2 | Select the tile that represent QM02.

|

| Action 4.1.3 | Select the pod ending with 0 that by default is the active instance.

|

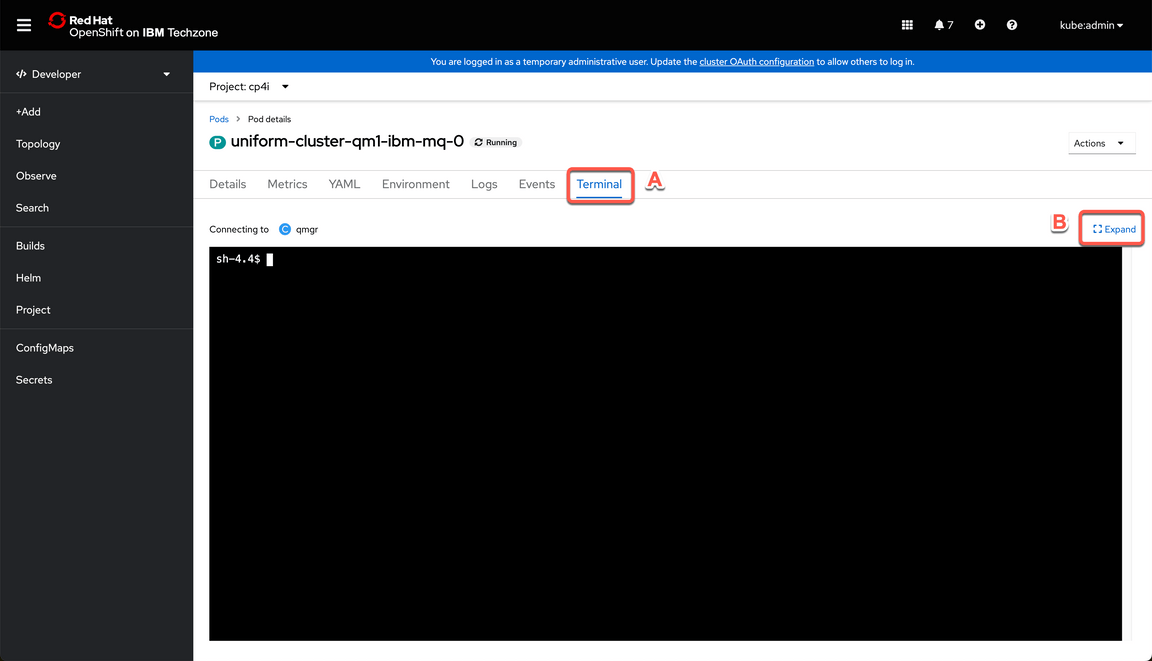

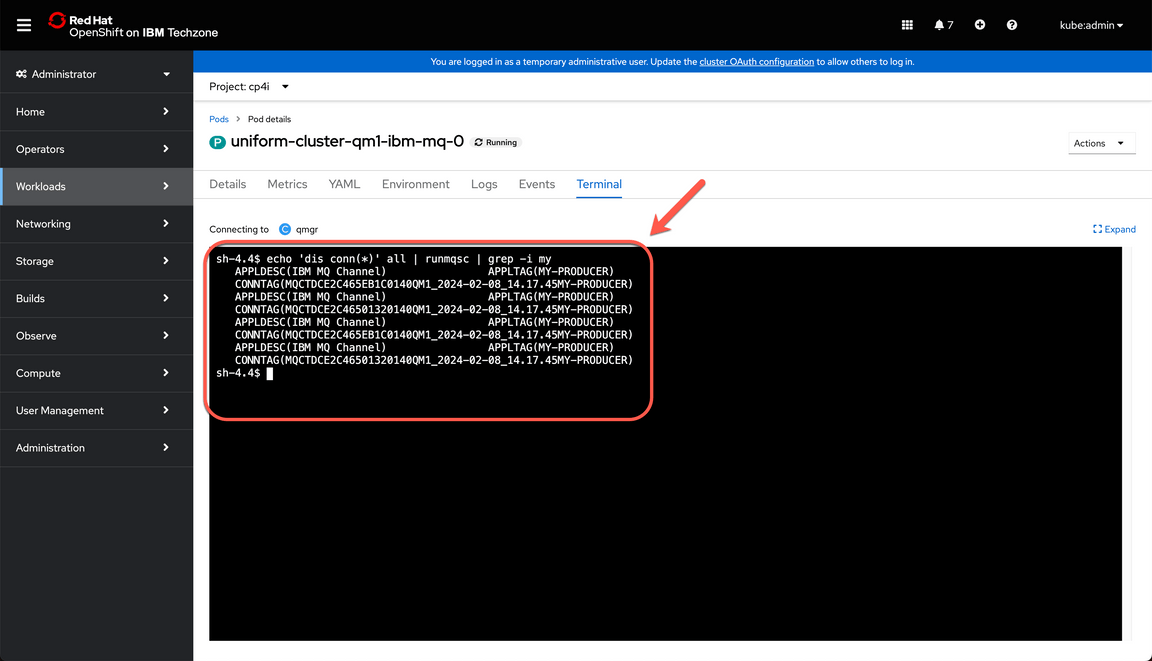

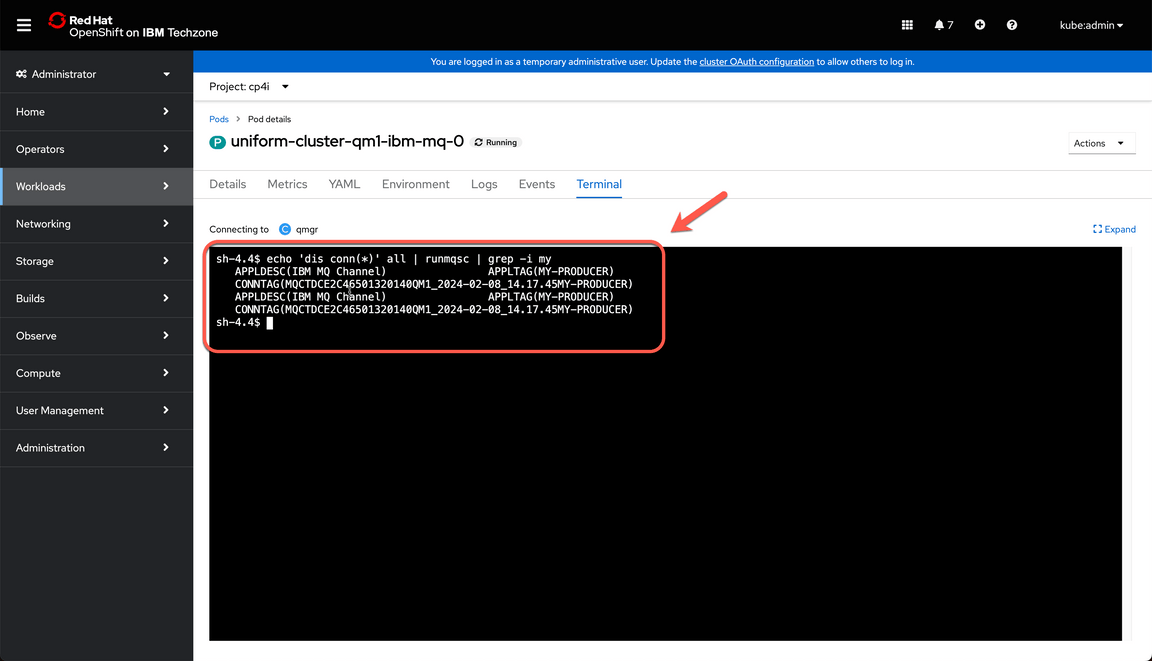

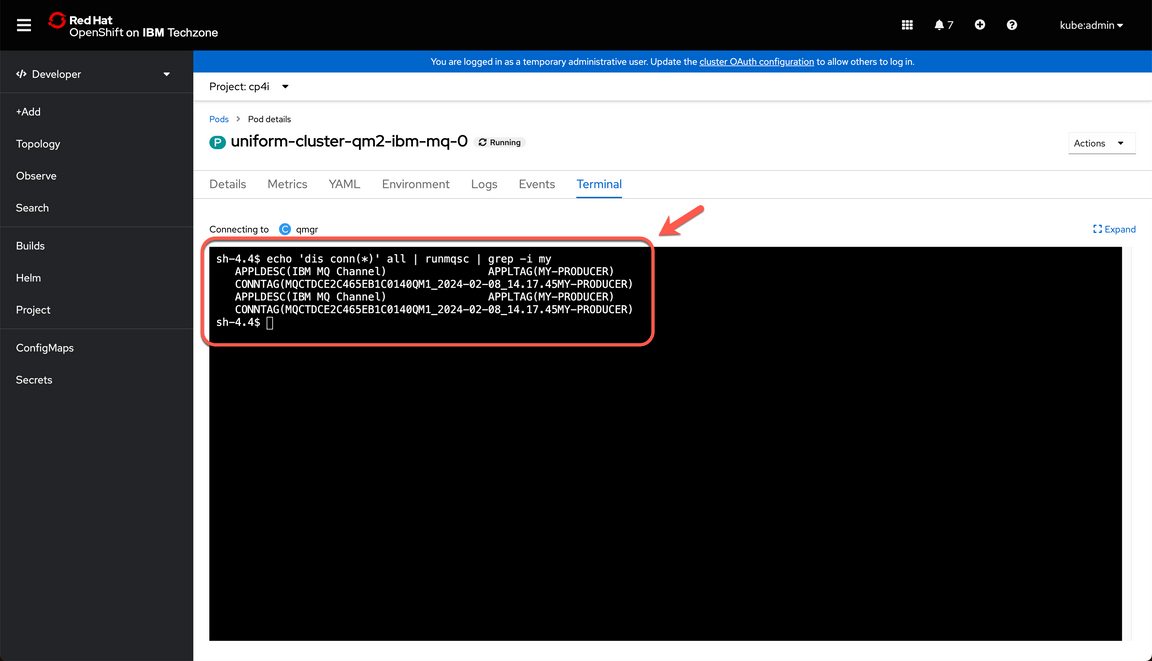

| 4.2 | Review connections to QM02 |

|---|---|

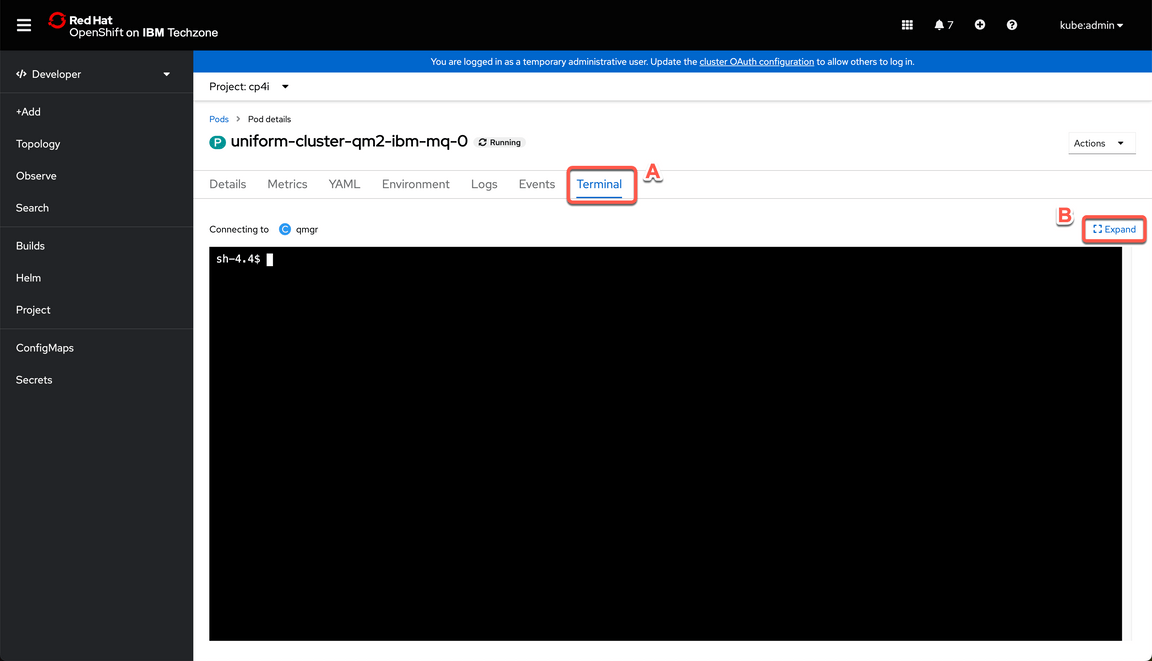

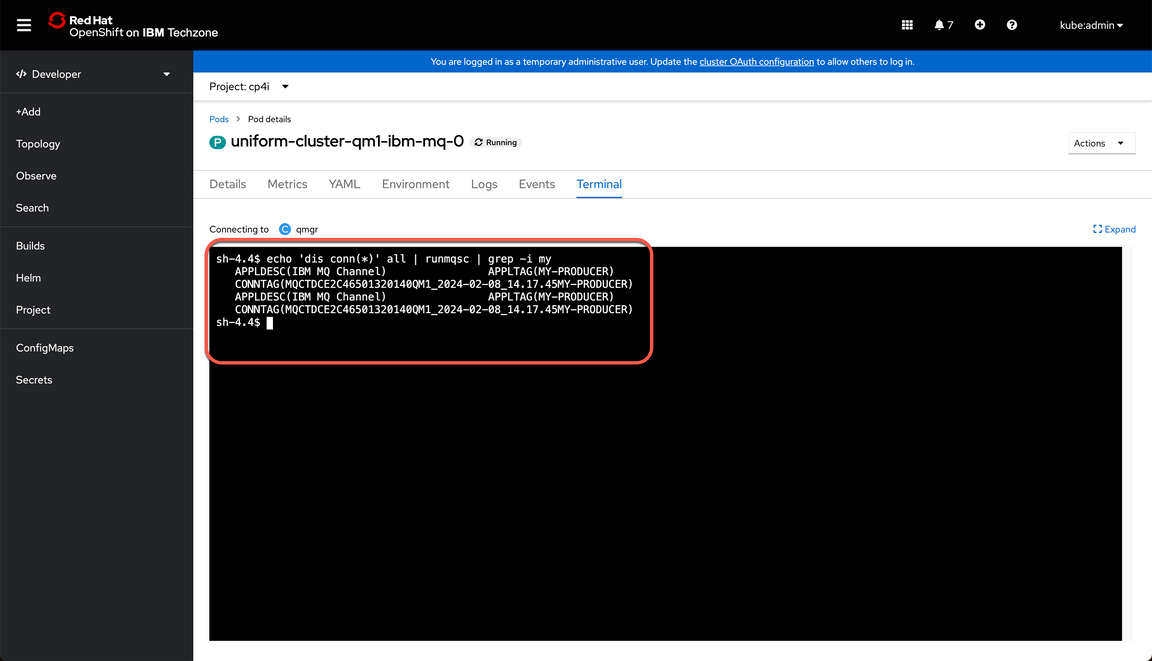

| Narration | In order to check the connection status, we will use the command “display connections” and we will filter by the MQ App name that is “MY-PRODUCER”. We will execute the command directly from the terminal in each MQ pod. Right now, we don’t have any connection in this pod. But in the next step we will get a better picture on how the connections are distributed. |

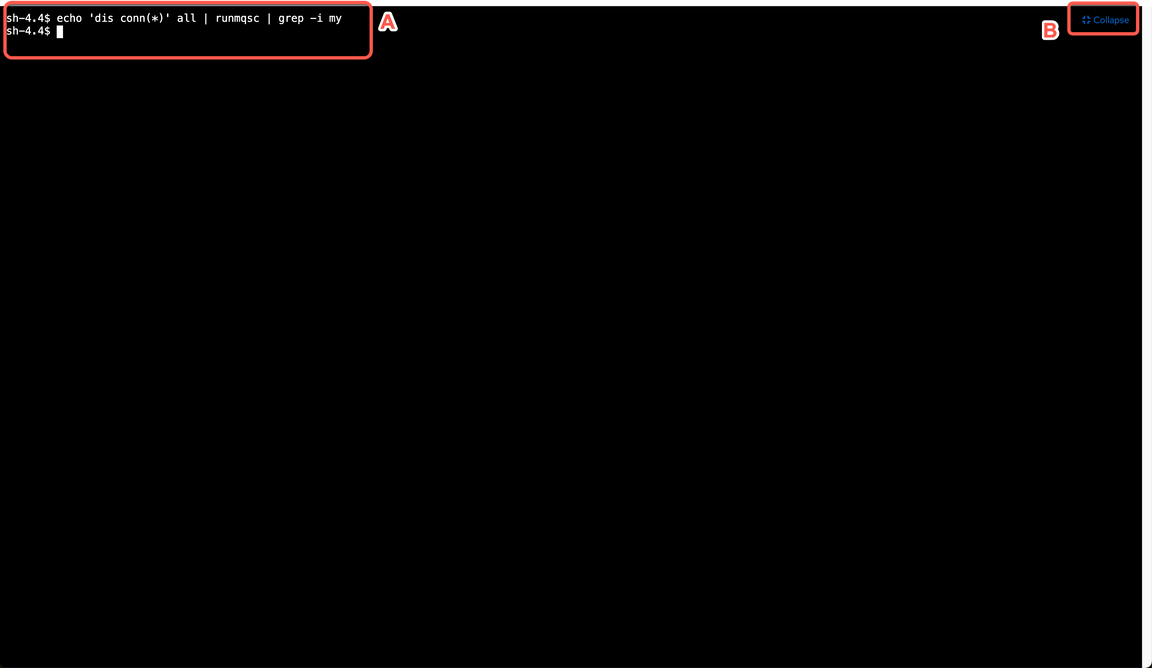

| Action 4.2.1 | Open the Terminal tab (A) and click Expand (B)

|

| Action 4.2.2 | Enter the command below in your terminal windows (if your prefer, you can paste using the context menu) and hit enter to check how many active connections associated with our application are in this queue manager. echo ‘dis conn(*)’ all | runmqsc | grep -i my |

| Action 4.2.3 | Show that there isn’t any connection in this Message queue (A). Once you are over you can Collapse (B) the terminal again.

|

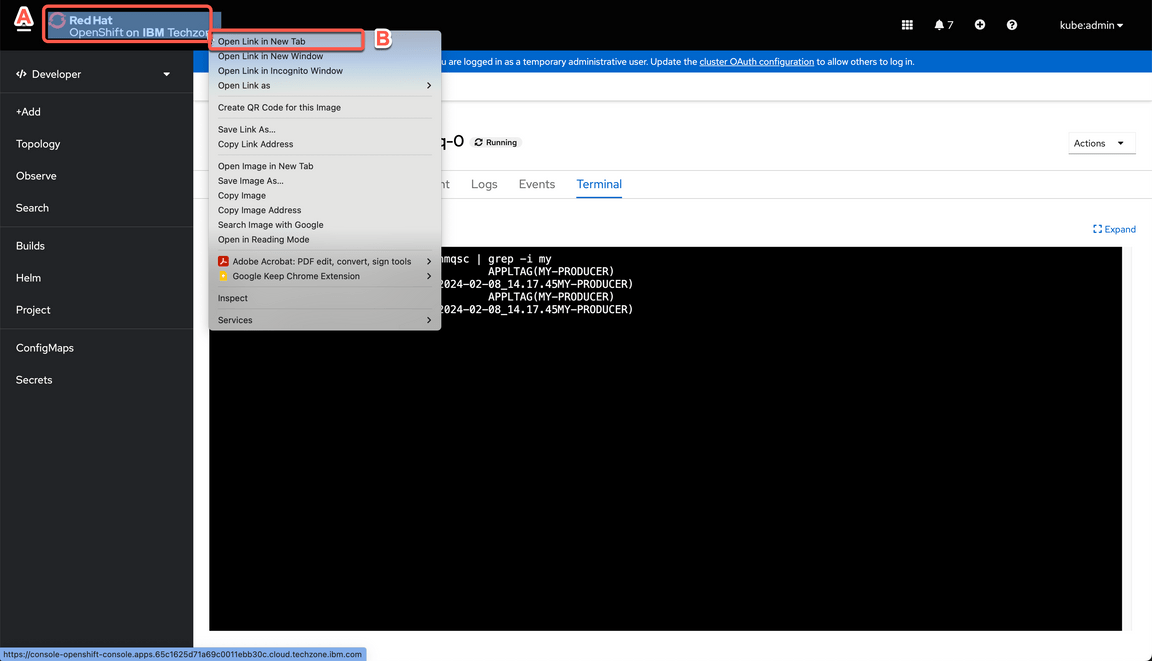

| 4.3 | Explore the Queue Manager 1 |

|---|---|

| Narration | Now let’s explore Queue Manager 1. Let’s repeat the same procedure as before to select the active pod. |

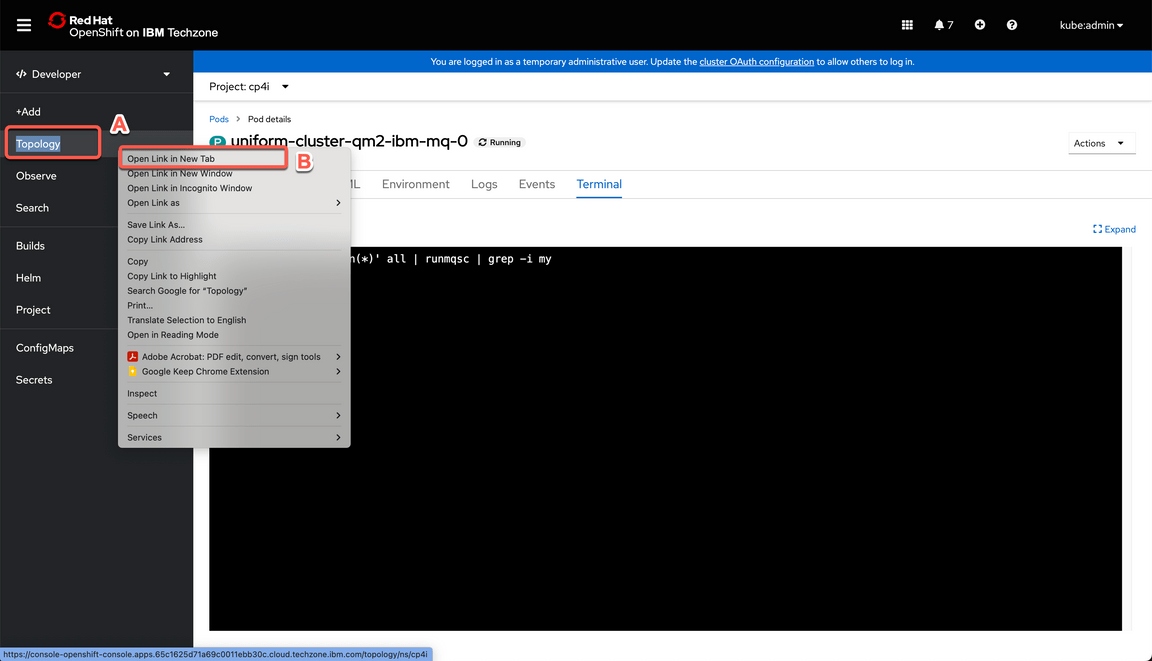

| Action 4.3.1 | Right click the Topology menu (A) and select Open Link in New Tab (B) to keep a window open for each queue manager since you may need to go back and forth.

|

| Action 4.3.2 | Select the tile that represent QM01.

|

| Action 4.3.3 | Select the pod ending with 0.

|

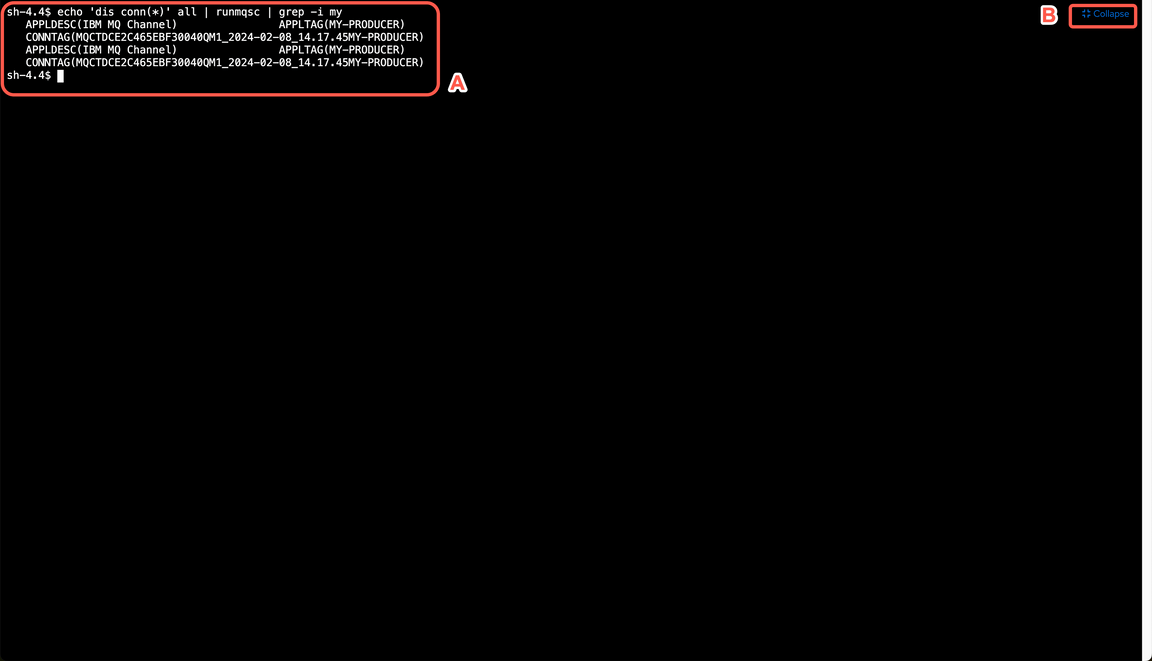

| 4.4 | Review connections to QM01 |

|---|---|

| Narration | Now let’s check the connection status in this MQ pod. This time we see a couple of active connections, proving that the application we deployed is connected to the cluster. |

| Action 4.4.1 | Open the Terminal tab (A) and click Expand (B)

|

| Action 4.4.2 | Enter the command below in your terminal windows (if your prefer, you can paste using the context menu) and hit enter to check how many active connections associated with our application are in this queue manager. echo ‘dis conn(*)’ all | runmqsc | grep -i my |

| Action 4.4.3 | Show the connections available (A). Once you are over you can Collapse (B) the terminal again.

Note - In your case the result could potentially be the opposite since we have no affinity defined and the application will connect to any queue manager, but since we only have one instance there will only be a connection to one queue manager at a time. |

5 - Scale MQ Application

| 5.1 | Increase the number of instances |

|---|---|

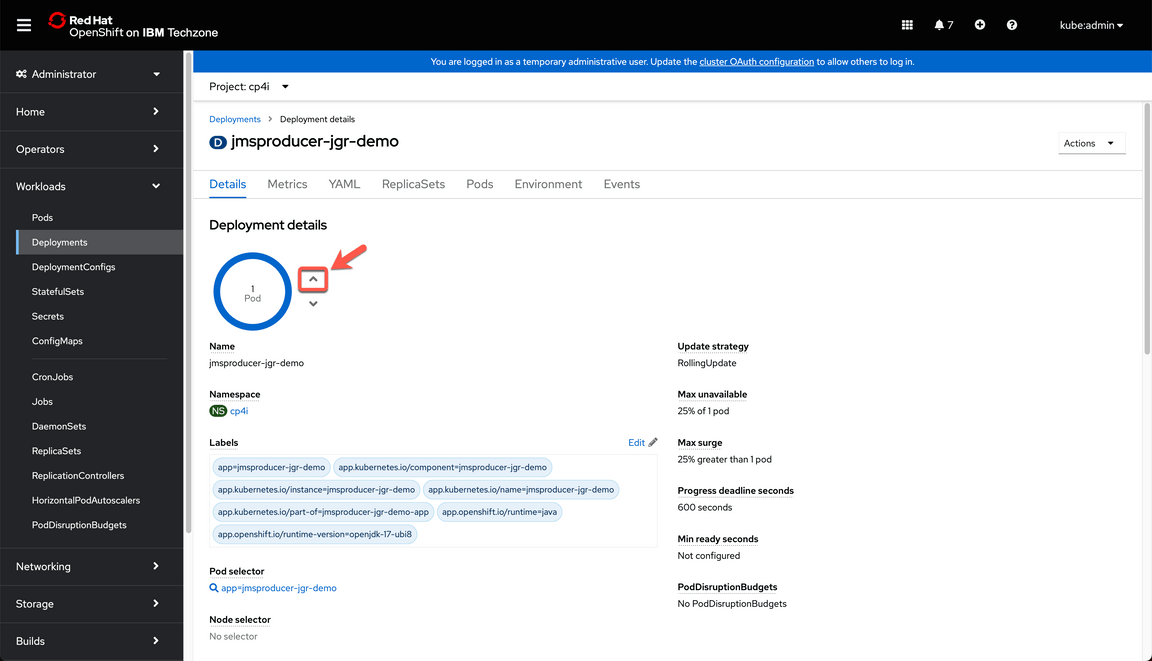

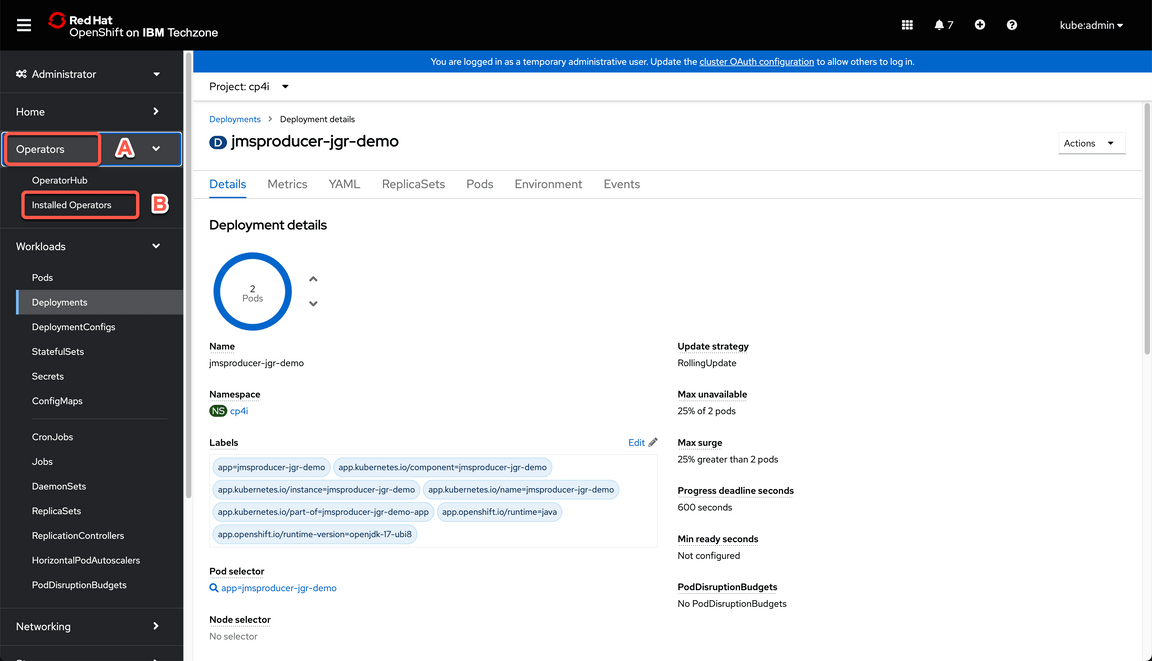

| Narration | At the moment, our application is running in a single pod and therefore it is only connected to one of the queue managers, but what if the workload increases and I need to scale my app. Let’s simulate the scenario and see how the connections are distributed. Let’s explore the Deployments view of our application. Here we can see there is only one pod. Let’s increase it to have two instances. |

| Action 5.1.1 | Right click on the RedHat OpenShift logo (A) and select Open Link in New Tab (B) to keep a window open.

|

| Action 5.1.2 | Change to Administrator perspective.

|

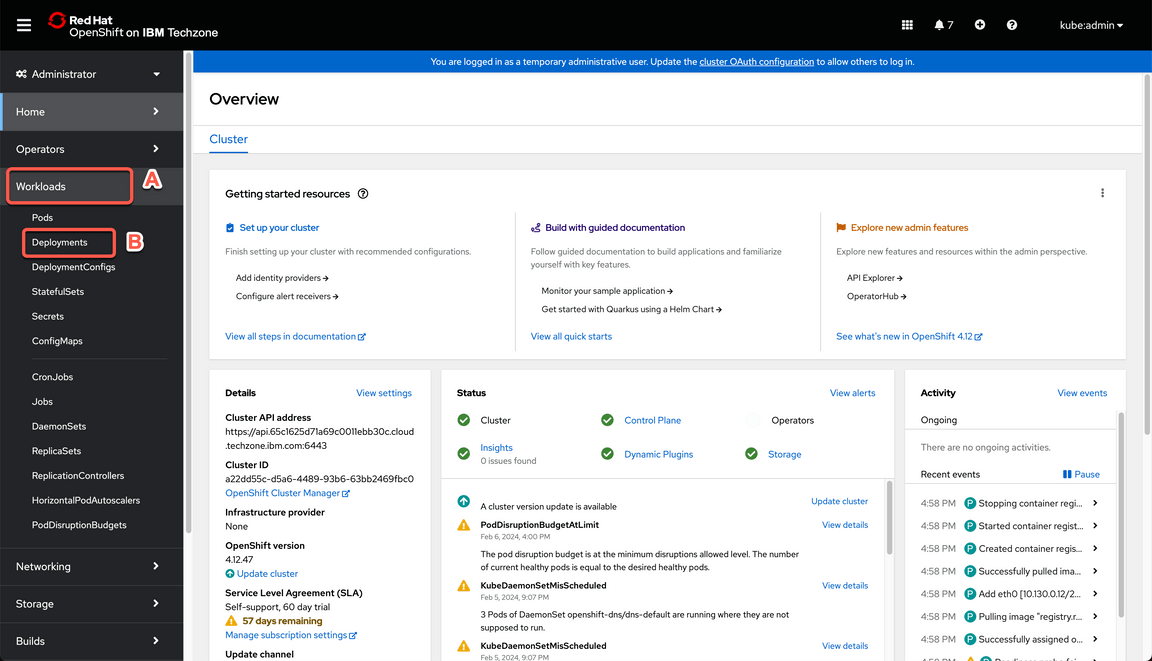

| Action 5.1.3 | Open the Workloads menu (A) and select Deployments (B).

|

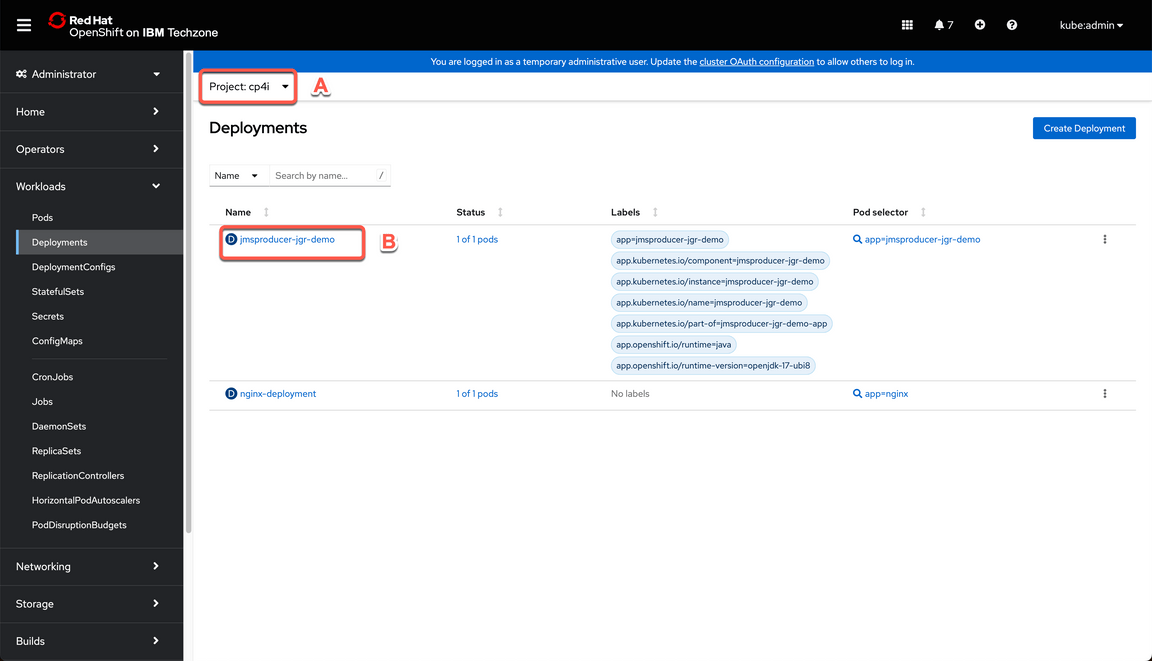

| Action 5.1.4 | Check that you are on cp4i project (A). Click on the jmsproducer deployment (B).

|

| Action 5.1.5 | Click on the arrow up icon to increase the number of instances to two.

|

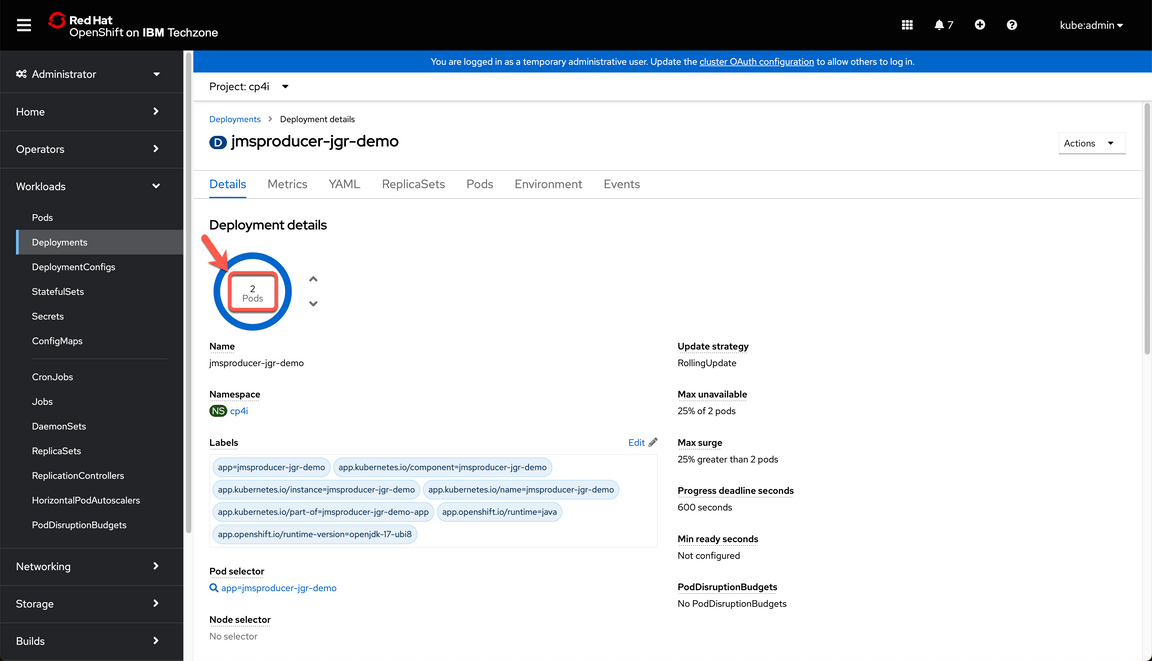

| Action 5.1.6 | Wait a few seconds. After a moment you will see the number of pods is updated to two.

|

| 5.2 | Review connectivity |

|---|---|

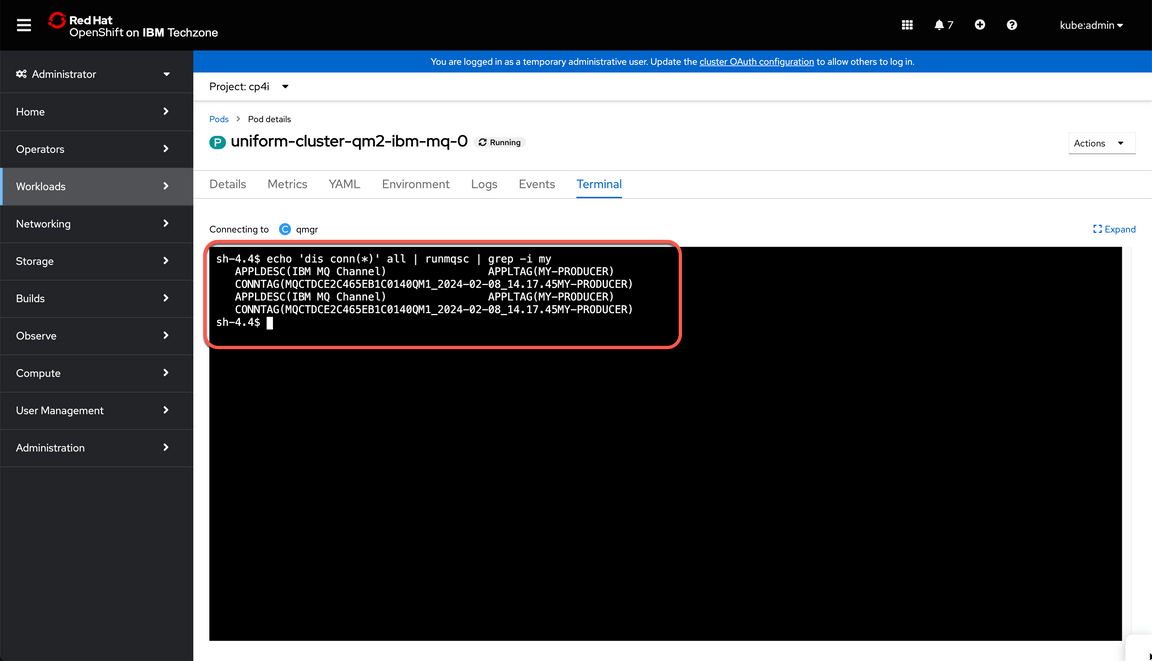

| Narration | Now, let’s check how many connections we have per queue manager. This time we should see that each queue manager has a couple of connections. |

| Action 5.2.1 | Back to the MQ1 browser tab, refresh the terminal page and enter the command below again and press enter. echo ‘dis conn(*)’ all | runmqsc | grep -i my |

| Action 5.2.2 | Show that you have multiple connections in MQ1.

|

| Action 5.2.3 | Back to the MQ2 browser tab, enter the command below again and press enter. echo ‘dis conn(*)’ all | runmqsc | grep -i my |

| Action 5.2.4 | Show that you have multiple connections in MQ2.

|

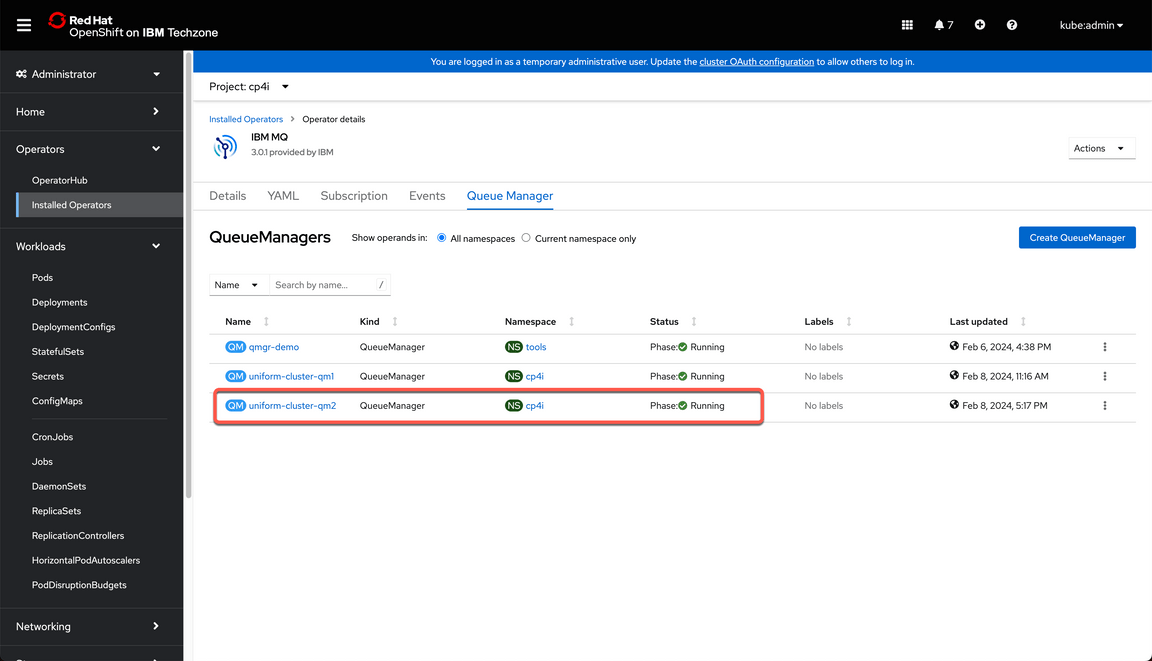

6 - Rebalance connections

| 6.1 | Delete Queue Manager |

|---|---|

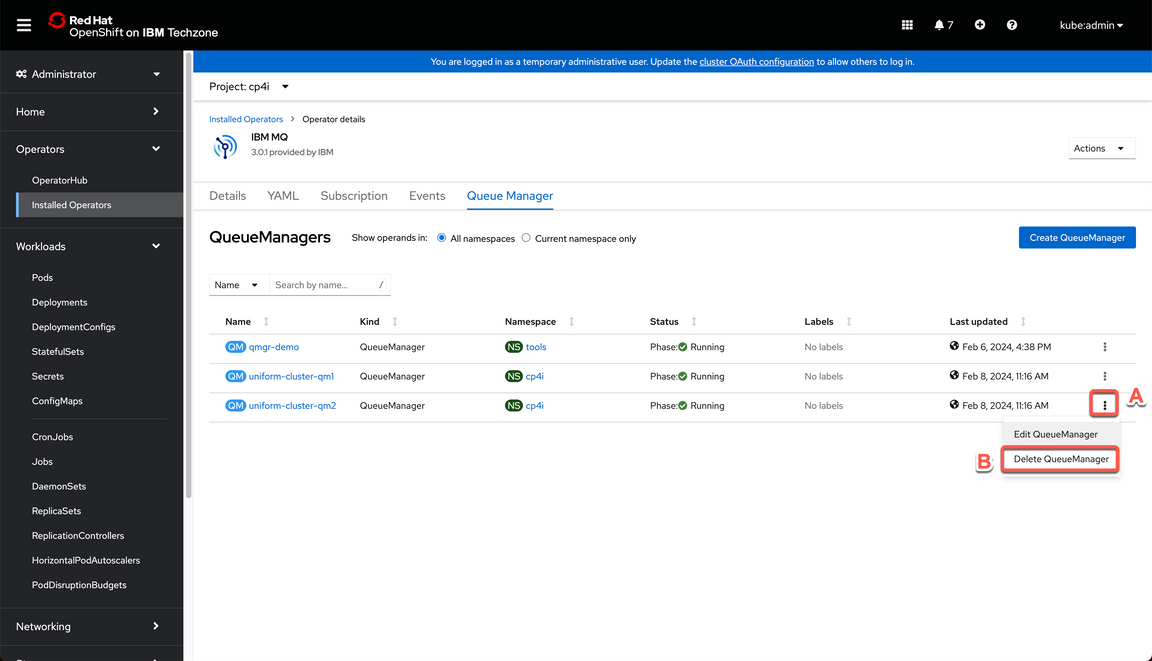

| Narration | We observed how each instance will connect to a different queue manager trying to keep a homogenous distribution, but what would happen if one of the queue managers goes down? Let’s find out. Let’s check our queue managers in the installed operators’ page. We could kill one of the active pods for any of the queue managers, but since we have configured Native HA, one of the standby instances will take over and at the end, each queue manager will keep a couple of connections, so in this case we will go ahead and fully delete the queue manager. |

| Action 6.1.1 | Back to the Administrator perspective browser tab, on the left navigator, open the Operators (A) > Installed Operators (B) menu.

|

| Action 6.1.2 | Navigate to the IBM MQ Operator again.

|

| Action 6.1.3 | Open the Queue Manager tab.

|

| Action 6.1.4 | Click on the hamburger menu for QM02 (A) and select Delete Queue Manager (B).

|

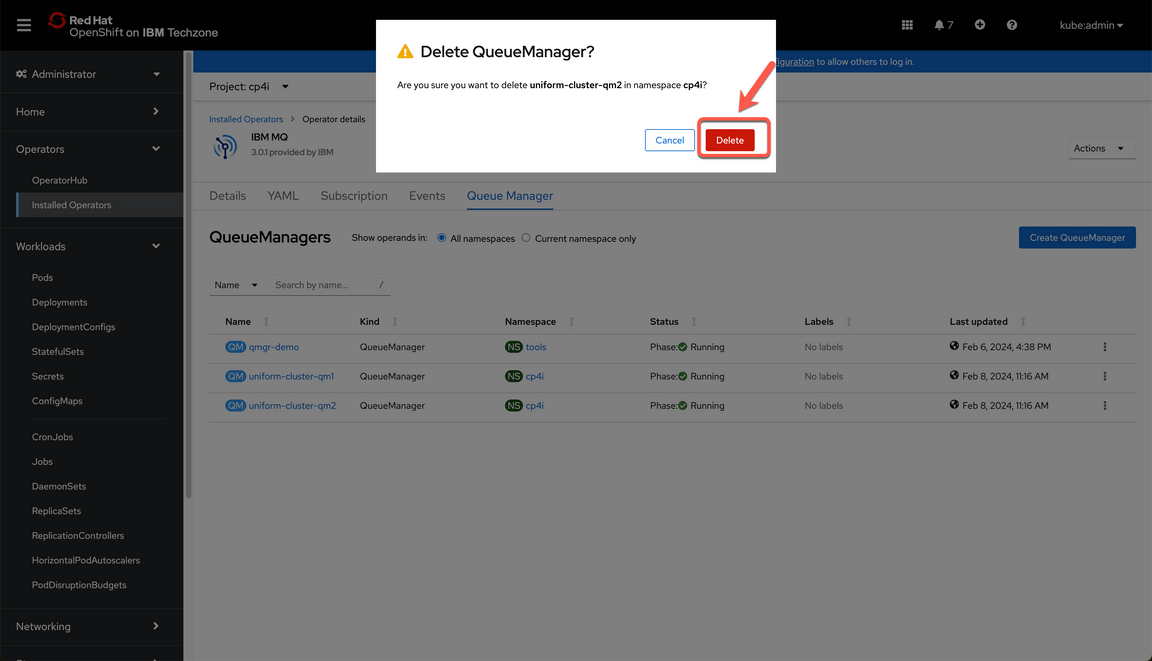

| Action 6.1.5 | Click the Delete button on the warning dialog to confirm you want to delete the queue manager.

|

| 6.2 | Review connectivity |

|---|---|

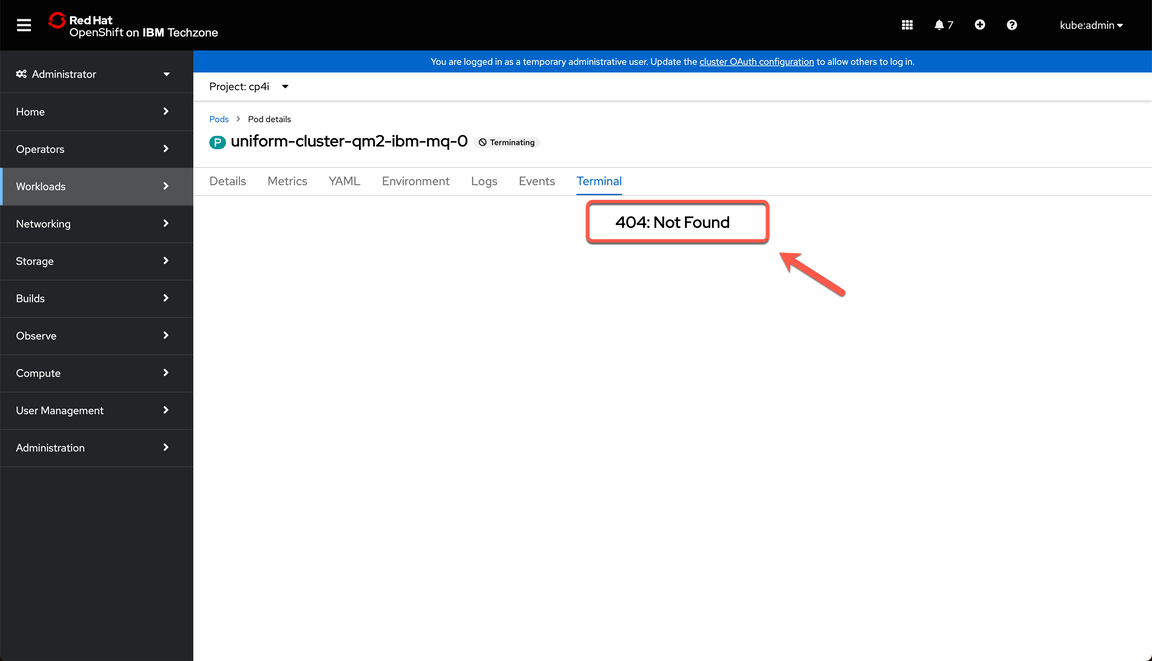

| Narration | If we try to navigate back to the active pod for queue manager 2, we will get an error message since the queue manager and therefore its pods have been deleted already. However, if we navigate to the active pod for queue manager one and submit the command to check the number of active connections, we will see all the connections are directed to the active queue manager assuring the client application can continue sending messages. |

| Action 6.2.1 | Back to the MQ2 browser tab, show the error message: “404”.

|

| Action 6.2.2 | Back to the MQ1 browser tab, refresh the terminal page and enter the command below again and press enter. echo ‘dis conn(*)’ all | runmqsc | grep -i my |

| Action 6.2.3 | Show that you have all the connections now in MQ1.

|

| 6.3 | Recreate Queue Manager 2 |

|---|---|

| Narration | Now let’s recreate Queue Manager 2. For this demo, we will recreate using the Command Line Interface, but in a production environment, we can use a gitOps approach. |

| Action 6.3.1 | Run the command below.oc apply -f instances/${CP4I_VER}/${OCP_TYPE}/13b-qmgr-uniform-cluster-qm2.yaml -n cp4i |

| Action 6.3.2 | Run the command below.oc get queuemanager -n cp4i Note this will take few minutes, but at the end you should get a response like this.

|

| Action 6.3.3 | Back to OpenShift Console browser tab with the Administrator profile, on the IBM MQ operator on the Queue Manager tab, check the new Queue Manager is ready.

|

| 6.4 | Review the final scenario |

|---|---|

| Narration | Once we confirm both queue managers are up and running, we can go back to the terminal of the active pod for each queue manager to check the number of active connections. And a similar behavior would happen if additional queue managers were added to the uniform cluster. The connection would be rebalanced providing a way to scale horizontally. Great! Here, we have arrived at the conclusion of our demonstration. |

| Action 6.4.1 | Back to the MQ1 browser tab, refresh the terminal page and enter the command below again and press enter. echo ‘dis conn(*)’ all | runmqsc | grep -i my |

| Action 6.4.2 | Show that you have multiple connections in MQ1 (not all connections as presented in the previous section).

|

| Action 6.4.3 | Back to the MQ2 browser tab, you need to reopen the MQ2 pod0 terminal page. Enter the command below again and press enter. echo ‘dis conn(*)’ all | runmqsc | grep -i my |

| Action 6.4.4 | Show that you have multiple connections in MQ2 again.

|

Summary

Let’s summarize what we’ve done today.

In the demo we: accessed the Cloud Pak for Integration environment and explored the IBM MQ capabilities; deployed an uniform cluster; deployed an MQ Application; validated the uniform cluster connectivity; scaled the MQ application and rebalanced the connections.

From an operations perspective, we showed how we can easily scale the number of instances of your client applications up and down, without having to reconfigure their connection details and without needing to manually distribute or load balance them.

And here we demonstrated how to quickly and easily grow the queue manager cluster – adding a new queue manager to the cluster without complex configuration.

Thank you for your attention.